@abelsu7

2020-03-03T09:57:59.000000Z

字数 15617

阅读 1164

Linux 下使用 virt-manager 基于虚拟机快速搭建 Ceph (jewel 10.x) 集群

Ceph KVM virt-manager

0. 资源准备

- 下载 CentOS-7-x86_64-Minimal-1908.iso 镜像,用于安装虚拟机的操作系统

- 磁盘可用空间

>=200G(估计值,非必须,满足实际需求即可)

我的本地宿主机环境如下:

> cat /etc/redhat-releaseFedora release 31 (Thirty One)> uname -r5.4.8-200.fc31.x86_64> df -hT /Filesystem Type Size Used Avail Use% Mounted on/dev/mapper/fedora-root ext4 395G 123G 254G 33% /> virt-manager --version2.2.1> free -htotal used free shared buff/cache availableMem: 15Gi 2.4Gi 10Gi 434Mi 2.3Gi 12GiSwap: 0B 0B 0B> screenfetch/:-------------:\ root@ins-7590:-------------------:: OS: Fedora 31 ThirtyOne:-----------/shhOHbmp---:\ Kernel: x86_64 Linux 5.4.8-200.fc31.x86_64/-----------omMMMNNNMMD ---: Uptime: 40m:-----------sMMMMNMNMP. ---: Packages: 2237:-----------:MMMdP------- ---\ Shell: zsh 5.7.1,------------:MMMd-------- ---: Resolution: 3600x1080:------------:MMMd------- .---: DE: GNOME:---- oNMMMMMMMMMNho .----: WM: GNOME Shell:-- .+shhhMMMmhhy++ .------/ WM Theme::- -------:MMMd--------------: GTK Theme: Adwaita-dark [GTK2/3]:- --------/MMMd-------------; Icon Theme: Adwaita:- ------/hMMMy------------: Font: Cantarell 11:-- :dMNdhhdNMMNo------------; CPU: Intel Core i7-9750H @ 12x 4.5GHz:---:sdNMMMMNds:------------: GPU: Mesa DRI Intel(R) UHD Graphics 630 (Coffeelake 3x8 GT2):------:://:-------------:: RAM: 2469MiB / 15786MiB:---------------------://

1. 虚拟机环境搭建

计划使用三台虚拟机搭建三节点的 Ceph 集群:

| hostname | IP | 备注 |

|---|---|---|

| ceph-node1 | 192.168.200.101 | deploy, 1 mon, 2 osd |

| ceph-node2 | 192.168.200.102 | 1 mon, 2 osd |

| ceph-node3 | 192.168.200.103 | 1 mon, 2 osd |

1.1 创建磁盘镜像

创建一个目录(例如/mnt/ceph/)用来存放三台虚拟机的磁盘镜像:

~ > mkdir -p /mnt/ceph~ > cd /mnt/ceph//mnt/ceph > mkdir ceph-node1 ceph-node2 ceph-node3/mnt/ceph > ls -ltotal 12drwxr-xr-x 2 root root 4096 Mar 2 15:23 ceph-node1drwxr-xr-x 2 root root 4096 Mar 2 15:23 ceph-node2drwxr-xr-x 2 root root 4096 Mar 2 15:23 ceph-node3

注:先准备

ceph-node1,其余两台之后可通过virt-clone复制

使用qemu-img命令为ceph-node1创建一块容量为100G的系统盘,以及两块容量各为2T的磁盘,镜像格式均为qcow2:

/mnt/ceph > qemu-img create -f qcow2 ceph-node1/ceph-node1.qcow2 100GFormatting 'ceph-node1/ceph-node1.qcow2', fmt=qcow2 size=107374182400 cluster_size=65536 lazy_refcounts=off refcount_bits=16/mnt/ceph > qemu-img create -f qcow2 ceph-node1/disk-1.qcow2 2TFormatting 'ceph-node1/disk-1.qcow2', fmt=qcow2 size=2199023255552 cluster_size=65536 lazy_refcounts=off refcount_bits=16/mnt/ceph > qemu-img create -f qcow2 ceph-node1/disk-2.qcow2 2TFormatting 'ceph-node1/disk-2.qcow2', fmt=qcow2 size=2199023255552 cluster_size=65536 lazy_refcounts=off refcount_bits=16/mnt/ceph > tree -h.├── [ 4.0K] ceph-node1│ ├── [ 194K] ceph-node1.qcow2│ ├── [ 224K] disk-1.qcow2│ └── [ 224K] disk-2.qcow2├── [ 4.0K] ceph-node2└── [ 4.0K] ceph-node33 directories, 3 files

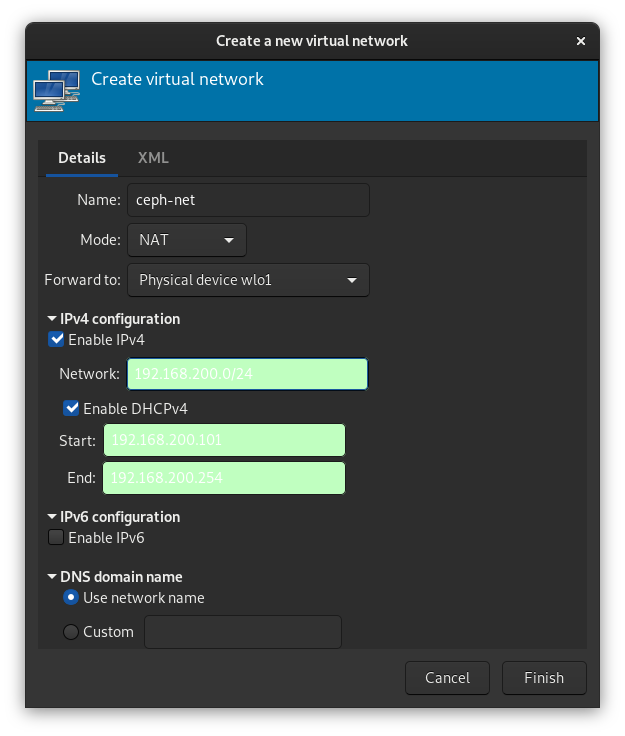

1.2 创建虚拟网络

在virt-manager里创建虚拟网络ceph-net,模式NAT,转发至本机的上网接口。规划的网段为192.168.200.0/24,DHCP 范围192.168.200.101~254:

ceph-net自动生成的 XML 定义如下:

<network><name>ceph-net</name><uuid>cc046613-11c4-4db7-a478-ac6d568e69ec</uuid><forward dev="wlo1" mode="nat"><nat><port start="1024" end="65535"/></nat><interface dev="wlo1"/></forward><bridge name="virbr1" stp="on" delay="0"/><mac address="52:54:00:86:86:e8"/><domain name="ceph-net"/><ip address="192.168.200.1" netmask="255.255.255.0"><dhcp><range start="192.168.200.101" end="192.168.200.254"/></dhcp></ip></network>

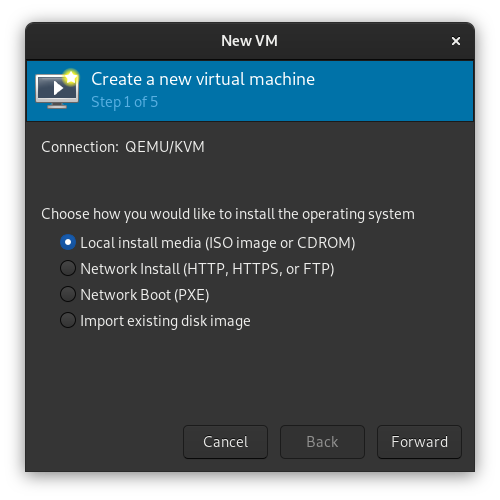

1.3 安装操作系统

在virt-manager中新建虚拟机:

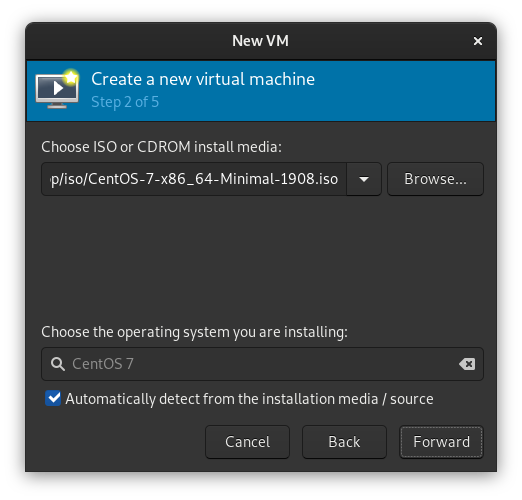

选择下载好的CentOS-7-x86_64-Minimal-1908.iso作为系统安装镜像:

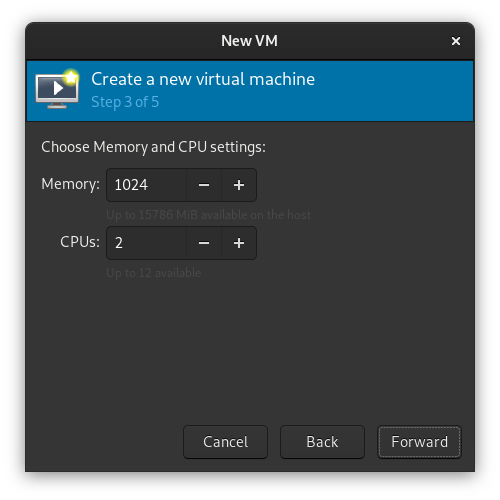

2 核 1G,默认即可:

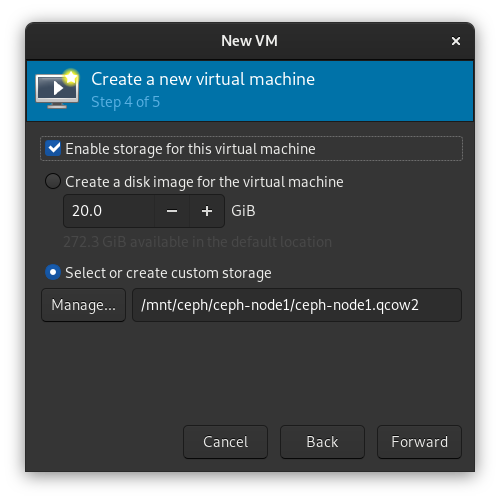

选择之前创建好的ceph-node1.qcow2作为系统镜像:

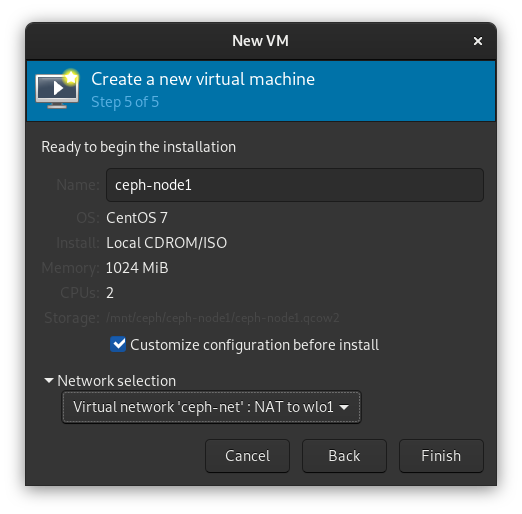

设置虚拟机名为ceph-node1,网络选择ceph-net,并勾选Customize configuration before install,添加两块 2T 磁盘:

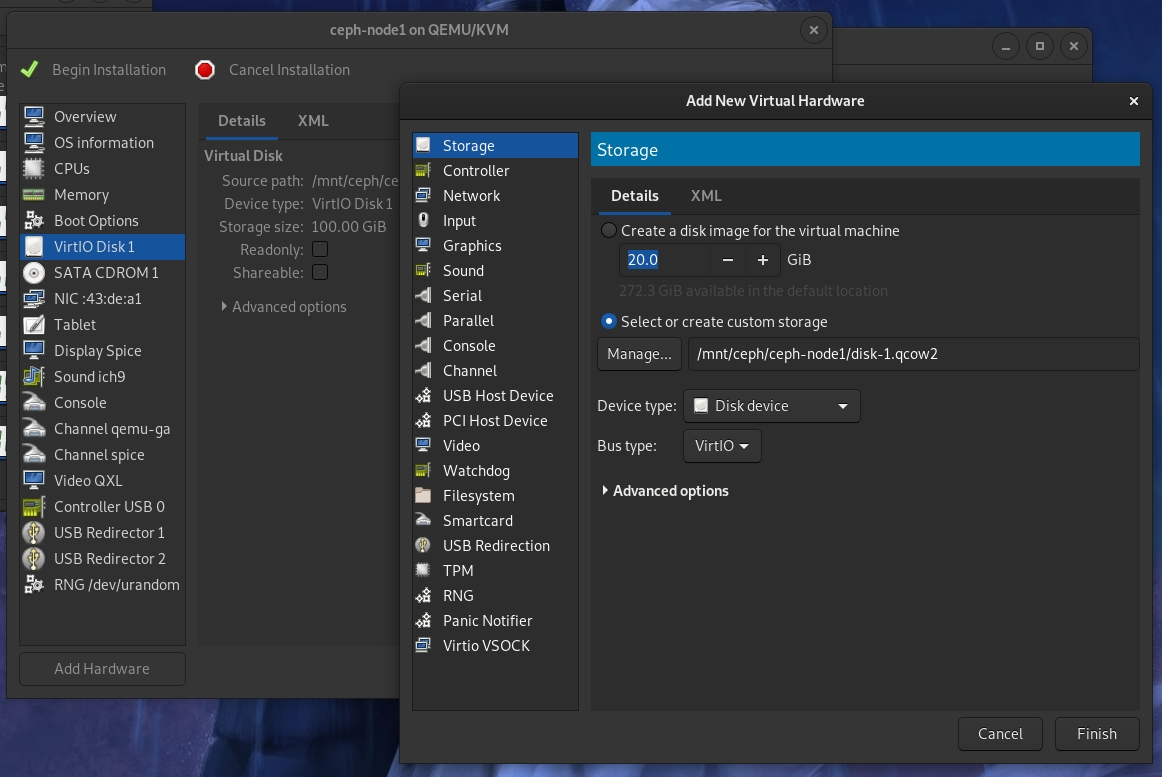

将disk-1.qcow2和disk-2.qcow2添加为VirtIO Disk:

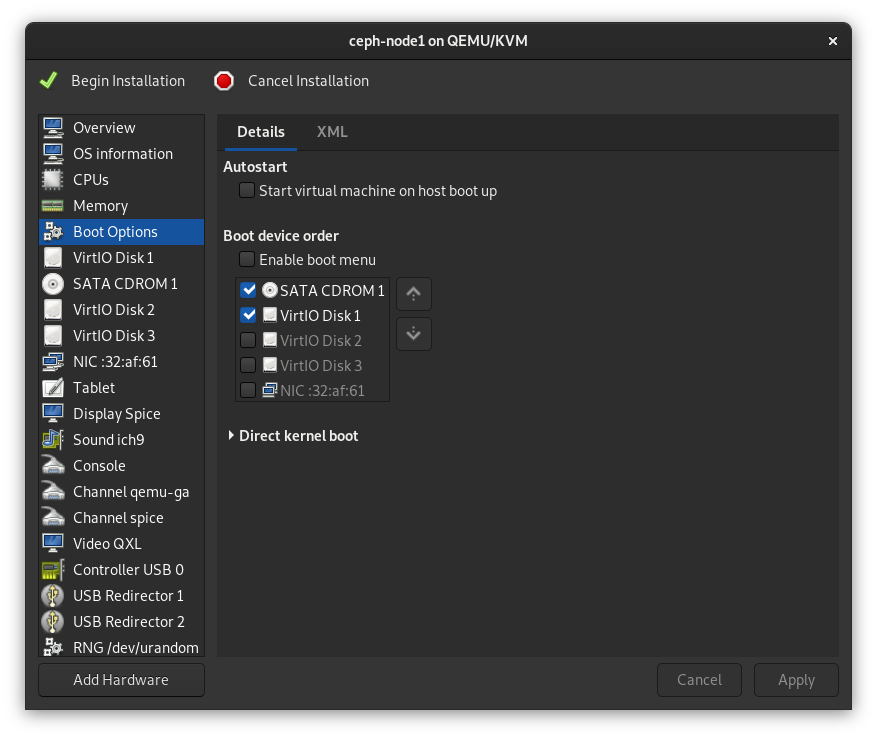

最后确认Boot Option中CDROM为第一启动项目,即可开始安装:

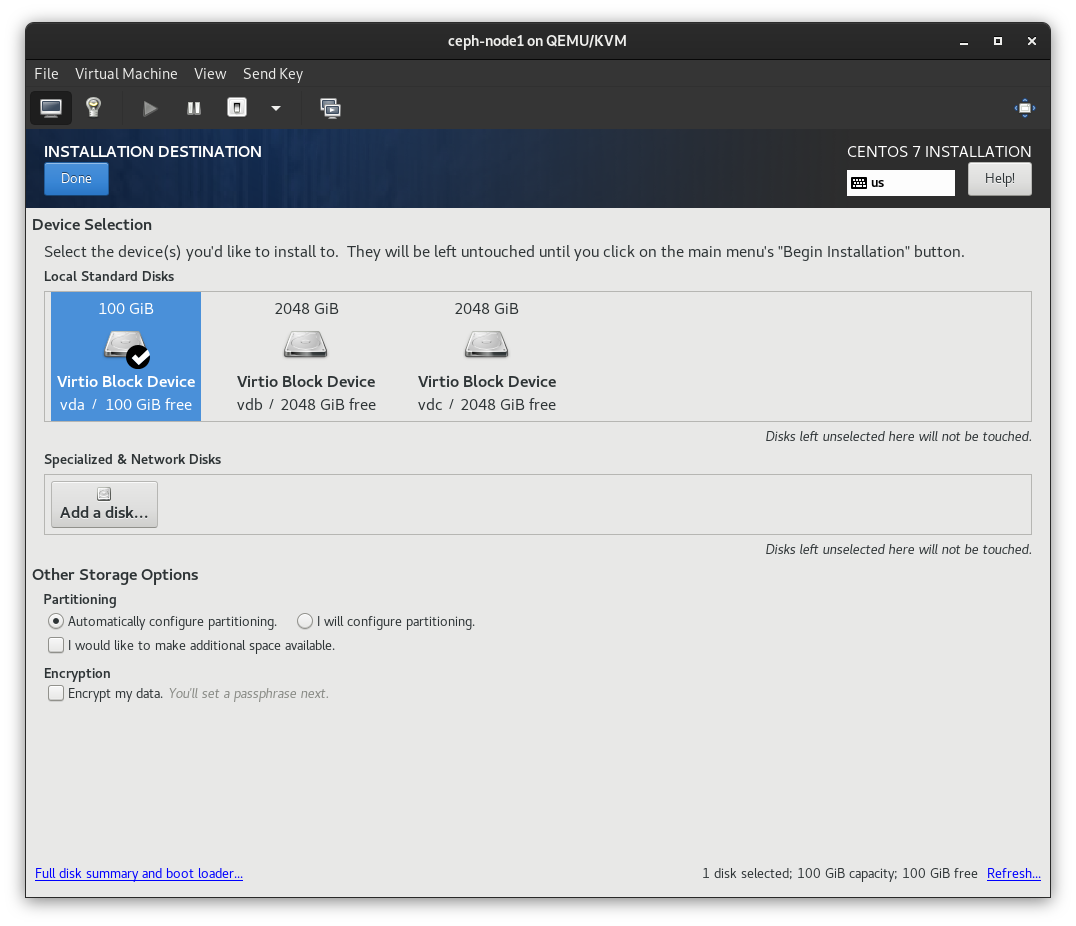

之后就进入了熟悉的CentOS安装界面,选择100G的盘作为系统盘,顺便观察两块2T的磁盘是否识别:

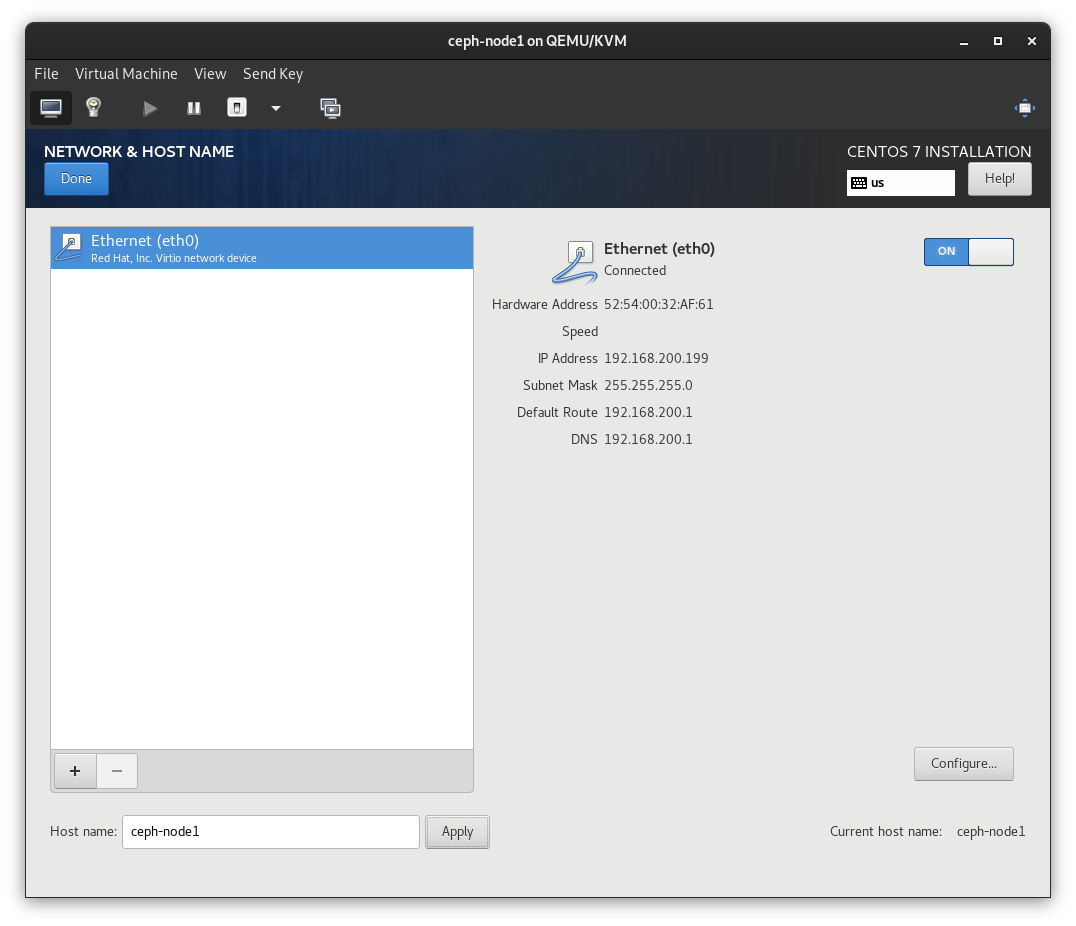

打开网络开关,确认是否已获得动态分配的192.168.200.0/24网段内的 IP 地址,具体的地址之后进入系统可以修改。另外,左下角将主机名修改为ceph-node1并Apply

现在即可开始安装,记得设置root密码。安装完重启进入系统:

[root@ceph-node1 ~]$ lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTsr0 11:0 1 1024M 0 romvda 252:0 0 100G 0 disk├─vda1 252:1 0 1G 0 part /boot└─vda2 252:2 0 99G 0 part├─centos_ceph--node1-root 253:0 0 50G 0 lvm /├─centos_ceph--node1-swap 253:1 0 2G 0 lvm [SWAP]└─centos_ceph--node1-home 253:2 0 47G 0 lvm /homevdb 252:16 0 2T 0 diskvdc 252:32 0 2T 0 disk

1.4 克隆前的准备

之前在安装过程中看到,eth0自动获取了分配的 IP 地址,现在我们需要将eth0的 IP 地址修改为我们计划的静态 IP 地址,即192.168.200.101。

修改网卡的配置文件:

vi /etc/sysconfig/network-scripts/ifcfg-eth0# 修改以下几个配置项BOOTPROTO="static"IPADDR=192.168.200.101NETMASK=255.255.255.0GATEWAY=192.168.200.1DNS1=192.168.200.1ONBOOT="yes"

重启网络,之后检查一下内网和外网的连通性:

[root@ceph-node1 ~]$ systemctl restart network # 重启网络[root@ceph-node1 ~]$ ip addr list eth02: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 52:54:00:32:af:61 brd ff:ff:ff:ff:ff:ffinet 192.168.200.101/24 brd 192.168.200.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fe80::5054:ff:fe32:af61/64 scope linkvalid_lft forever preferred_lft forever[root@ceph-node1 ~]$ nmclieth0: connected to eth0"Red Hat Virtio"ethernet (virtio_net), 52:54:00:32:AF:61, hw, mtu 1500ip4 defaultinet4 192.168.200.101/24route4 192.168.200.0/24route4 0.0.0.0/0inet6 fe80::916d:cd1f:bb97:ca22/64route6 fe80::/64route6 ff00::/8lo: unmanaged"lo"loopback (unknown), 00:00:00:00:00:00, sw, mtu 65536DNS configuration:servers: 192.168.200.1interface: eth0[root@ceph-node1 ~]$ ping 192.168.200.1 # ping 网关[root@ceph-node1 ~]$ ping baidu.com # ping 外网

简便起见,关闭firewalld和selinux:

# 关闭 firewalld 并禁用[root@ceph-node1 ~]$ systemctl stop firewalld[root@ceph-node1 ~]$ systemctl disable firewalld# 临时关闭 selinux[root@ceph-node1 ~]$ getenforceEnforcing[root@ceph-node1 ~]$ setenforce 0[root@ceph-node1 ~]$ getenforcePermissive# 永久关闭 selinux,重启后生效[root@ceph-node1 ~]$ sed -i 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

将yum源修改为阿里云的源,并添加ceph的源:

[root@ceph-node1 ~]$ yum clean all[root@ceph-node1 ~]$ curl http://mirrors.aliyun.com/repo/Centos-7.repo >/etc/yum.repos.d/CentOS-Base.repo[root@ceph-node1 ~]$ curl http://mirrors.aliyun.com/repo/epel-7.repo >/etc/yum.repos.d/epel.repo[root@ceph-node1 ~]$ sed -i '/aliyuncs/d' /etc/yum.repos.d/CentOS-Base.repo[root@ceph-node1 ~]$ sed -i '/aliyuncs/d' /etc/yum.repos.d/epel.repo[root@ceph-node1 ~]$ vim /etc/yum.repos.d/ceph.repo# 添加以下内容[ceph]name=cephbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/x86_64/gpgcheck=0[ceph-noarch]name=cephnoarchbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch/gpgcheck=0[root@ceph-node1 ~]$ yum makecache[root@ceph-node1 ~]$ yum repolistLoaded plugins: fastestmirror, prioritiesLoading mirror speeds from cached hostfilerepo id repo name statusbase/7/x86_64 CentOS-7 - Base - mirrors.aliyun.com 10,097ceph ceph 499ceph-noarch cephnoarch 16epel/x86_64 Extra Packages for Enterprise Linux 7 - x86_64 13,196extras/7/x86_64 CentOS-7 - Extras - mirrors.aliyun.com 323updates/7/x86_64 CentOS-7 - Updates - mirrors.aliyun.com 1,478repolist: 25,609

安装ceph客户端、ntpdate以及其他软件:

# 安装 ceph 客户端[root@ceph-node1 ~]$ yum install ceph ceph-radosgw[root@ceph-node1 ~]$ ceph --versionceph version 10.2.11 (e4b061b47f07f583c92a050d9e84b1813a35671e)# 必须安装,借助 ntpdate 同步时间[root@ceph-node1 ~]$ yum install ntp ntpdate ntp-doc# 非必须,方便监控性能数据[root@ceph-node1 ~]$ yum install wget vim htop dstat iftop tmux

设置开机运行ntpdate自动同步时间:

[root@ceph-node1 ~]$ ntpdate ntp.sjtu.edu.cn2 Mar 09:49:39 ntpdate[1915]: adjust time server 84.16.73.33 offset 0.051449 sec[root@ceph-node1 ~]$ echo ntpdate ntp.sjtu.edu.cn >> /etc/rc.d/rc.local[root@ceph-node1 ~]$ chmod +x /etc/rc.d/rc.local

添加各节点主机名,生成 SSH 密钥并配置免密登录:

[root@ceph-node1 ~]$ echo ceph-node1 >/etc/hostname[root@ceph-node1 ~]$ vim /etc/hosts# 添加以下主机名192.168.200.101 ceph-node1192.168.200.102 ceph-node2192.168.200.103 ceph-node3# 三次回车,生成密钥[root@ceph-node1 ~]$ ssh-keygen# 设置本机免密登录# 由于虚拟机克隆后密钥是同一份# 因此只需执行这一次 ssh-copy-id# 三台虚拟机相互之间均可以免密登录[root@ceph-node1 ~]$ ssh-copy-id root@ceph-node1

在宿主机上同样添加以上三个节点的主机名,并ssh-copy-id到虚拟机配置免密登录:

[root@ceph-host ~]$ vim /etc/hosts# 添加以下主机名192.168.200.101 ceph-node1192.168.200.102 ceph-node2192.168.200.103 ceph-node3[root@ceph-host ~]$ ssh-copy-id root@ceph-node1

至此虚拟机环境已准备完毕,可以执行shutdown -h now关机,记得在virt-manager里将CDROM移除掉,并确保VirtIO Disk 1为启动项。

1.5 克隆虚拟机

使用virt-clone命令基于ceph-node1克隆出ceph-node2、ceph-node3另外两台虚拟机,它将为网卡生成新的 MAC 地址,并将镜像复制到指定路径:

> virsh domblklist ceph-node1Target Source-------------------------------------------------vda /mnt/ceph/ceph-node1/ceph-node1.qcow2vdb /mnt/ceph/ceph-node1/disk-1.qcow2vdc /mnt/ceph/ceph-node1/disk-2.qcow2> virt-clone --original ceph-node1 --name ceph-node2 \--file /mnt/ceph/ceph-node2/ceph-node2.qcow2 \--file /mnt/ceph/ceph-node2/disk-1.qcow2 \--file /mnt/ceph/ceph-node2/disk-2.qcow2> virt-clone --original ceph-node1 --name ceph-node3 \--file /mnt/ceph/ceph-node3/ceph-node3.qcow2 \--file /mnt/ceph/ceph-node3/disk-1.qcow2 \--file /mnt/ceph/ceph-node3/disk-2.qcow2> tree -h /mnt/ceph//mnt/ceph├── [ 4.0K] ceph-node1│ ├── [ 1.8G] ceph-node1.qcow2│ ├── [ 224K] disk-1.qcow2│ └── [ 224K] disk-2.qcow2├── [ 4.0K] ceph-node2│ ├── [ 1.8G] ceph-node2.qcow2│ ├── [ 224K] disk-1.qcow2│ └── [ 224K] disk-2.qcow2└── [ 4.0K] ceph-node3├── [ 1.8G] ceph-node3.qcow2├── [ 224K] disk-1.qcow2└── [ 224K] disk-2.qcow23 directories, 9 files> virsh list --allId Name State------------------------------------- ceph-node1 shut off- ceph-node2 shut off- ceph-node3 shut off

登录ceph-node2,修改主机名及 IP 地址:

# 修改主机名,下次登录生效[root@ceph-node2 ~]$ hostname ceph-node2[root@ceph-node2 ~]$ echo ceph-node2 > /etc/hostname# 注销重新登录,使新的主机名生效[root@ceph-node2 ~]$ exit# 修改为对应的 IP 地址[root@ceph-node2 ~]$ vim /etc/sysconfig/network-scripts/ifcfg-eth0IPADDR=192.168.200.102# 重启网络[root@ceph-node2 ~]$ systemctl restart network

登录ceph-node3,进行同样的操作,至此三台虚拟机已经准备完毕。

2. 安装 Ceph 集群

计划使用三台虚拟机搭建三节点的 Ceph 集群:

| hostname | IP | 备注 |

|---|---|---|

| ceph-node1 | 192.168.200.101 | deploy, 1 mon, 2 osd |

| ceph-node2 | 192.168.200.102 | 1 mon, 2 osd |

| ceph-node3 | 192.168.200.103 | 1 mon, 2 osd |

通过ceph-deploy工具即可很方便的从一个节点(此例中为ceph-node1)部署ceph集群,因此在ceph-node1上执行以下操作:

2.1 安装 ceph-deploy

注:以下命令在

ceph-node1上执行

安装ceph-deploy:

[root@ceph-node1 ~]$ yum info ceph-deployLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfileAvailable PackagesName : ceph-deployArch : noarchVersion : 1.5.39Release : 0Size : 284 kRepo : ceph-noarchSummary : Admin and deploy tool for CephURL : http://ceph.com/License : MITDescription : An easy to use admin tool for deploy ceph storage clusters.[root@ceph-node1 ~]$ yum install ceph-deploy -y[root@ceph-node1 ~]$ ceph-deploy --version1.5.39

2.2 开始部署

注:以下命令在

ceph-node1上执行

创建部署目录my-cluster:

[root@ceph-node1 ~]$ mkdir my-cluster[root@ceph-node1 ~]$ cd my-cluster/# 生成初始配置文件[root@ceph-node1 my-cluster]$ ceph-deploy new ceph-node1 ceph-node2 ceph-node3[root@ceph-node1 my-cluster]$ ls -hltotal 12K-rw-r--r-- 1 root root 203 Mar 2 09:18 ceph.conf-rw-r--r-- 1 root root 3.0K Mar 2 09:18 ceph-deploy-ceph.log-rw------- 1 root root 73 Mar 2 09:18 ceph.mon.keyring[root@ceph-node1 my-cluster]$ cat ceph.conf[global]fsid = 86537cd8-270c-480d-b549-1f352de6c907mon_initial_members = ceph-node1, ceph-node2, ceph-node3mon_host = 192.168.200.101,192.168.200.102,192.168.200.103auth_cluster_required = cephxauth_service_required = cephxauth_client_required = cephx

注:在任何时候当你陷入困境并希望从头开始部署时,就执行以下命令以清空

ceph的package,并擦除各节点的数据和配置:

[root@ceph-node1 my-cluster]$ ceph-deploy purge ceph-node1 ceph-node2 ceph-node3[root@ceph-node1 my-cluster]$ ceph-deploy purgedata ceph-node1 ceph-node2 ceph-node3[root@ceph-node1 my-cluster]$ ceph-deploy forgetkeys[root@ceph-node1 my-cluster]$ rm ceph.*

根据此前的 IP 配置向ceph.conf中添加public_network,并稍微增大mon之间的时差允许范围(默认为0.05s,现改为2s):

[root@ceph-node1 my-cluster]$ echo public_network=192.168.200.0/24 >> ceph.conf[root@ceph-node1 my-cluster]$ echo mon_clock_drift_allowed = 2 >> ceph.conf[root@ceph-node1 my-cluster]$ cat ceph.conf[global]fsid = 86537cd8-270c-480d-b549-1f352de6c907mon_initial_members = ceph-node1, ceph-node2, ceph-node3mon_host = 192.168.200.101,192.168.200.102,192.168.200.103auth_cluster_required = cephxauth_service_required = cephxauth_client_required = cephxpublic_network=192.168.200.0/24mon_clock_drift_allowed = 2

开始部署monitor:

[root@ceph-node1 my-cluster]$ ceph-deploy mon create-initial

查看集群状态,此时health为HEALTH_ERR是因为还没有部署osd:

[root@ceph-node1 my-cluster]$ ceph -scluster 86537cd8-270c-480d-b549-1f352de6c907health HEALTH_ERRno osdsmonmap e2: 3 mons at {ceph-node1=192.168.200.101:6789/0,ceph-node2=192.168.200.102:6789/0,ceph-node3=192.168.200.103:6789/0}election epoch 6, quorum 0,1,2 ceph-node1,ceph-node2,ceph-node3osdmap e1: 0 osds: 0 up, 0 inflags sortbitwise,require_jewel_osdspgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects0 kB used, 0 kB / 0 kB avail64 creating

开始部署osd:

[root@ceph-node1 my-cluster]$ ceph-deploy --overwrite-conf osd prepare \ceph-node1:/dev/vdb ceph-node1:/dev/vdc \ceph-node2:/dev/vdb ceph-node2:/dev/vdc \ceph-node3:/dev/vdb ceph-node3:/dev/vdc --zap-disk[root@ceph-node1 my-cluster]$ ceph-deploy --overwrite-conf osd activate \ceph-node1:/dev/vdb1 ceph-node1:/dev/vdc1 \ceph-node2:/dev/vdb1 ceph-node2:/dev/vdc1 \ceph-node3:/dev/vdb1 ceph-node3:/dev/vdc1

查看集群状态:

[root@ceph-node1 my-cluster]$ ceph -scluster 86537cd8-270c-480d-b549-1f352de6c907health HEALTH_OKmonmap e2: 3 mons at {ceph-node1=192.168.200.101:6789/0,ceph-node2=192.168.200.102:6789/0,ceph-node3=192.168.200.103:6789/0}election epoch 6, quorum 0,1,2 ceph-node1,ceph-node2,ceph-node3osdmap e30: 6 osds: 6 up, 6 inflags sortbitwise,require_jewel_osdspgmap v72: 64 pgs, 1 pools, 0 bytes data, 0 objects646 MB used, 12251 GB / 12252 GB avail64 active+clean

至此,集群部署完成。

2.3 关闭 cephx 认证

首先在ceph-node1上修改my-cluster目录下的ceph.conf:

[root@ceph-node1 my-cluster]$ vim ceph.conf# 将 cephx 全部改为 noneauth_cluster_required = noneauth_service_required = noneauth_client_required = none

之后通过ceph-deploy将该配置文件推送到三个节点上:

[root@ceph-node1 my-cluster]$ ceph-deploy --overwrite-conf config push ceph-node1 ceph-node2 ceph-node3

最后分别在三个节点上重启mon和osd:

# ceph-node1[root@ceph-node1 ~]$ systemctl restart ceph-mon.target[root@ceph-node1 ~]$ systemctl restart ceph-osd.target# ceph-node2[root@ceph-node2 ~]$ systemctl restart ceph-mon.target[root@ceph-node2 ~]$ systemctl restart ceph-osd.target# ceph-node3[root@ceph-node3 ~]$ systemctl restart ceph-mon.target[root@ceph-node3 ~]$ systemctl restart ceph-osd.target

稍后可观察到集群恢复:

[root@ceph-node1 ~]$ ceph -scluster 86537cd8-270c-480d-b549-1f352de6c907health HEALTH_OKmonmap e2: 3 mons at {ceph-node1=192.168.200.101:6789/0,ceph-node2=192.168.200.102:6789/0,ceph-node3=192.168.200.103:6789/0}election epoch 12, quorum 0,1,2 ceph-node1,ceph-node2,ceph-node3osdmap e42: 6 osds: 6 up, 6 inflags sortbitwise,require_jewel_osdspgmap v98: 64 pgs, 1 pools, 0 bytes data, 0 objects647 MB used, 12251 GB / 12252 GB avail64 active+clean[root@ceph-node1 ~]$ ceph osd treeID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY-1 11.96457 root default-2 3.98819 host ceph-node10 1.99409 osd.0 up 1.00000 1.000005 1.99409 osd.5 up 1.00000 1.00000-3 3.98819 host ceph-node21 1.99409 osd.1 up 1.00000 1.000002 1.99409 osd.2 up 1.00000 1.00000-4 3.98819 host ceph-node33 1.99409 osd.3 up 1.00000 1.000004 1.99409 osd.4 up 1.00000 1.00000

2.4 在宿主机上通过 libvirt 连接 Ceph 集群

首先回到宿主机,安装ceph客户端:

[root@ceph-host ~]$ yum install ceph ceph-radosgw

之后创建/mnt/ceph/rbd-pool.xml:

<pool type='rbd'><name>rbd</name><source><host name='ceph-node1' port='6789'/><name>rbd</name></source></pool>

定义rbd存储池并启动:

[root@ceph-host ~]$ virsh pool-create /mnt/ceph/rbd-pool.xmlPool rbd defined from /mnt/ceph/rbd-pool.xml[root@ceph-host ~]$ virsh pool-start rbdPool rbd started[root@ceph-host ~]$ virsh pool-info rbdName: rbdUUID: 0e3115e5-87c8-41c6-979b-3b8277deef78State: runningPersistent: yesAutostart: noCapacity: 11.96 TiBAllocation: 1.32 KiBAvailable: 11.96 TiB[root@ceph-host ~]$ virsh pool-dumpxml rbd<pool type='rbd'><name>rbd</name><uuid>0e3115e5-87c8-41c6-979b-3b8277deef78</uuid><capacity unit='bytes'>13155494166528</capacity><allocation unit='bytes'>1349</allocation><available unit='bytes'>13154814738432</available><source><host name='ceph-node1' port='6789'/><name>rbd</name></source></pool>

使用qemu-img尝试创建一个rbd镜像:

[root@ceph-host ~]$ qemu-img create -f rbd rbd:rbd/test-from-host 10GFormatting 'rbd:rbd/test-from-host', fmt=rbd size=10737418240[root@ceph-host ~]$ qemu-img info rbd:rbd/test-from-hostimage: json:{"driver": "raw", "file": {"pool": "rbd", "image": "test-from-host", "driver": "rbd"}}file format: rawvirtual size: 10 GiB (10737418240 bytes)disk size: unavailablecluster_size: 4194304

使用rbd命令与virsh查看该镜像:

[root@ceph-host ~]$ rbd lstest-from-host[root@ceph-host ~]$ rbd duNAME PROVISIONED USEDtest-from-host 10 GiB 0 B[root@ceph-host ~]$ virsh vol-list rbdName Path--------------------------------------test-from-host rbd/test-from-host[root@ceph-host ~]$ virsh vol-info rbd/test-from-hostName: test-from-hostType: networkCapacity: 10.00 GiBAllocation: 10.00 GiB[root@ceph-host ~]$ virsh vol-dumpxml rbd/test-from-host<volume type='network'><name>test-from-host</name><key>rbd/test-from-host</key><source></source><capacity unit='bytes'>10737418240</capacity><allocation unit='bytes'>10737418240</allocation><target><path>rbd/test-from-host</path><format type='raw'/></target></volume>

宿主机若要关机,关闭三台虚拟机即可。宿主机开机后,再重新启动三台虚拟机,集群会自动恢复至HEALTH_OK状态。