@hongchenzimo

2018-06-03T16:38:22.000000Z

字数 10718

阅读 1045

DeepLearning - Overview of Sequence model

博客园 DeepLearning.ai MLAlgo

I have had a hard time trying to understand recurrent model. Compared to Ng's deep learning course, I found Deep Learning book written by Ian, Yoshua and Aaron much easier to understand.

This post is structure in following order:

- Intuitive interpretation of RNN

- Basic RNN computation and different structure

- RNN limitation and variants

Why we need Recurrent Neural Network

The main reason behind Convolution neural network is using sparse connection and parameter sharing to reduce the scale of NN. Then why we need recurrent neural network?

If we use traditional neural network to deal with a series input , like speech, text, we will encounter 2 main challenges:

- The input length varies. Of course we can cut or zero-pad the training data to have the same length. But how can we deal with the test data with unknown length?

- For every time stamp, we will need a independent parameter. It makes it hard to capture the pattern in the sequence, compared to traditional time-series model like AR, ARIMA.

CNN can partly solve the second problem. By using a kernel with size K, the model can capture the pattern of k neighbors. Therefore, when the input length is fixed or limited, sometimes we do use CNN to deal with time-series data. However compared with sequence model, CNN has a very limited(shallow) parameter sharing. I will further talk about this below.

RNN Intuitive Interpretation

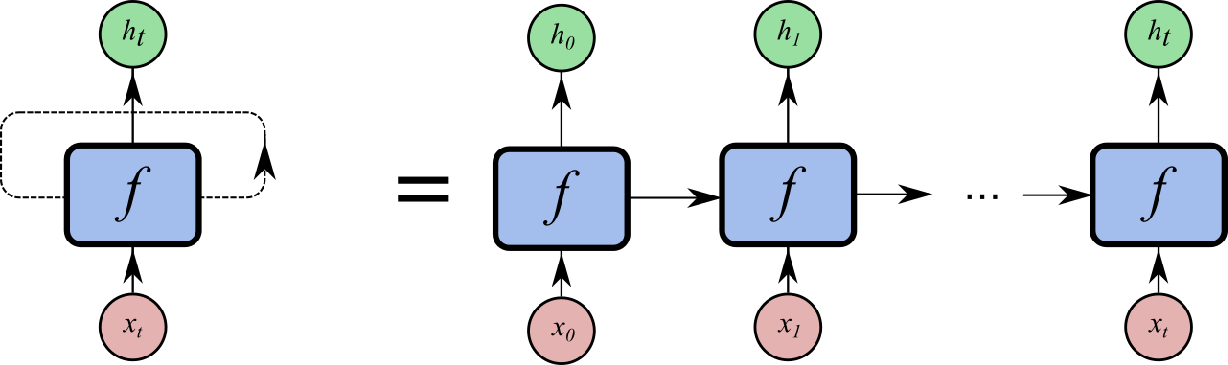

For a given sequence, RNN takes in input in the following way:

Regardless of how the output looks like, at each timestamp, the information is processed in the following way:

I consider the above recurrent structure as having a nerual network with unlimited number of hidden layer. Each hidden layer takes in information from input and previous hidden layer and they all share same parameter. The number of hidden layer is equal to your input length (time step).

There are 2 main advantages of above Recurrent structure:

- The input size is fixed regardless of the the sequence length. Therefore it can be applied to any sequence without limitation.

- Case1: if the input is a multi-dimensional timeseries like open/high/low/close price in stock market, a 4-dimensional timeseries, then the input size is 4.

- Case2: if we use 10-dimensional vector to represent each word in sentence, then no matter how long the sentence is, the input size is 10.

- Same paramter learnt at differnt timestamp. is not time-related, similar to the transformation matrix in Markov Chain.

And if we further unfold the above formula, we will get following:

Basically for hidden layer at time , it can include all the information from the very beginning. Compared to Recurrent structure, CNN can only incorporate limited information from nearest K timestamp, where k is the kernel size.

Basic RNN Computation

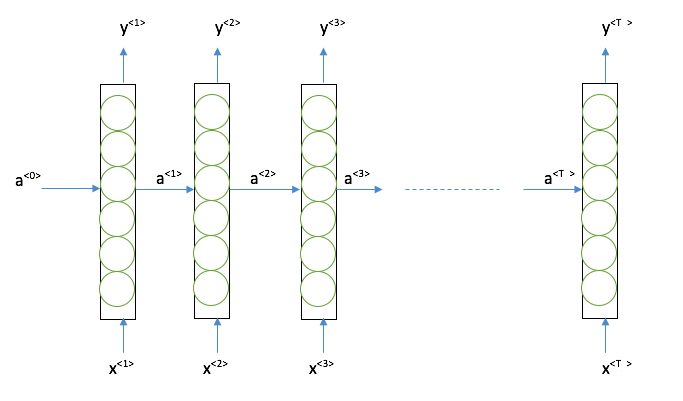

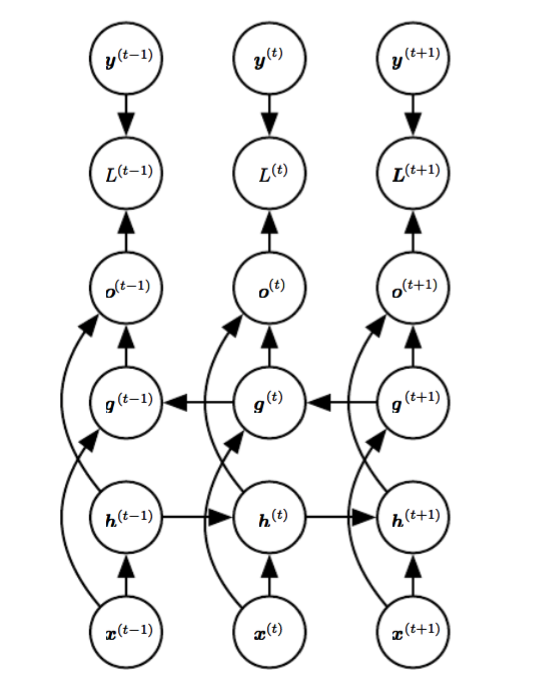

Now let's add more details to basic RNN, below is the most representative structure of Basic RNN:

For each hidden layer, it takes both the information from input and the previous hidden layer,then generate an output. Since all the other format of RNN can be derived from this, we will go through the computation of RNN in above structure.

1. Forward propogation

Here I will mainly use the notation from Andrew's Course with small modification.

denotes the hiden layer output at time t

denotes the input at time t

denotes the target output at time t

denotes model output at time t

denotes the output before activation function.

, , are the weight and bias matrix at hidden layer

, are the weight and bias matrix used at output.

We will use activation function at hidden and at output.

For a given sample , RNN will start at time 0, and start forward propagation till T. At each time stamp t, following computation is made:

We can further simplify the above formula by combining the weight matrix like below:

,

The negative log likelihood loss function of a sequence input is defined as following:

2. Backward propogation

In recurrent neural network, same parameter is shared at different time. Therefore we need the gradient of all nodes to calculate the gradient of parameter. And don't forget that the gradient of each node not only takes information from output, but also from next hidden neuron. That's why Back-Propagation-through-time can not run parallel, but have to follow descending order of time.

Start from T, given loss function, and softmax activation function, gradient of is following:

Therefroe we can get gradient of as below

For the last node, the gradient only consider , while for all the other nodes, the gradient need to consider both and , we will get following:

Now we have the gradient for each hidden neuron, we can further get the gradient for all the parameter, given that same parameters are shared at different time stamp. see below:

3. Different structures of RNN

So far the RNN we have talked about has 2 main features: input length = output length, and direct connection between hidden neuron. While actually RNN have many variants. We can further classify the difference as following:

- How recurrent works

- Different input and output length

As for how recurrent works, there are 2 basic types:

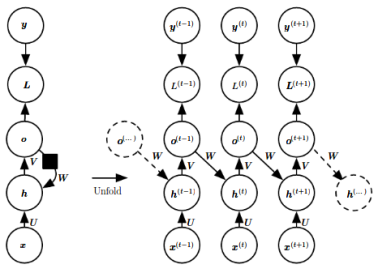

1. there is recurrent connection between hidden neuron, see below RNN1

2. there is recurrent connection between output and hidden neuron, see below RNN2

RNN1. Hidden to Hidden

RNN2. Ouput to Hidden

Teacher forcing Train & Test

It changes the original maximum log likelihood into conditional log likelihood.

However teacher forcing has a big problem that the input distribution of training and testing is different, since we don't have realized output in testing. Later we will talk about how to overcome such problem.

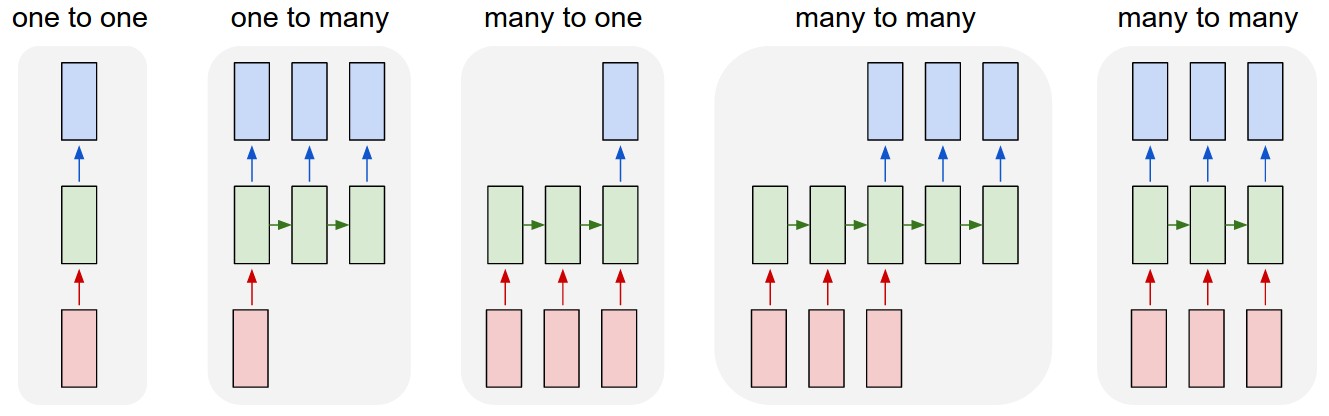

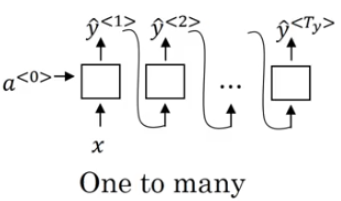

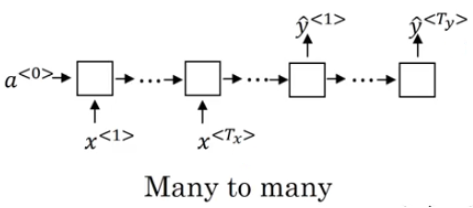

Next Let's talk about input and output length. In the below image, it gives all the possibilities:

many-to-many(seq2seq) with same length is the one we have been talking so far. Let' go through others.

many-to-one(seq2one) can be used in sentiment analysis. The only difference between the RNN we have been talking about and many-to-one is that each sequence input has only 1 ouput.

one-to-many(one2seq) can be used in music generation. It uses the second recurrent structure - feeding output to next hidden neuron.

many-to-many(seq2seq) with different length is wildly known as Encoder-Decoder System. It has many application in machine translation due to its flexibility. We will further talk about this in the application post.

Basic RNN limitation and variants

Now we see what basic RNN can do. Dose it has any limitation? And how do we get around with it?

1. Time order - Bidirection RNN

We have been emphasizing that RNN has to compute in order of time in propagation, meaning the is only based on the information prior from to . However there are situations that we need to consider both prior and post information, for example in translation we need to consider the entire context. Like following:

2. long-term dependence

We have mentioned the gradient vanishing/exploding problem before for deep neural network. Same idea also applies here. In Deep Learning book, author gives an intuitive explanation.

If we consider a simplified RNN without input and activation function. Then RNN becomes a time-series model with matrix implementation as following:

The problem lies in Eigenvalue , where may lead to gradient exploding, while may lead to gradient vanishing.

Gradient exploding can be solved by gradient clipping. Basically we cap the gradient for certain threshold. For gradient vanishing, one popular solution is gated RNN. Gated model has additional parameter to control at each timestamp whether to remember or forget the pass information, in order to maintain useful information for longer time period.

Gated RNN 1 - GRU Unit

Based on basic RNN, GRU add more computation within in each hidden neuron to control information flow. We use to denote hidden layer output. And within each hidden layer, we do following:

Where is the update Gate, and is the reset gate. They both measure the relevance between the previous hidden layer and the present input. Please note here and has same dimension as , and element-wise multiplication is applied.

If the relevance is low than update gate will choose to forget history information,and reset gate will also allow less history information into the current calculation. If and then we get basic RNN.

And sometimes only is used, leading to a simplified GRU with less parameter to train.

Gated RNN 2 - LSTM Unit

Another solution to long-term dependence is LSTM unit. It has 3 gates to train.

Where is the update Gate, and is the forget gate, and is the output gate.

Beside GRU and LSTM, there are also other variants. It is hard to say which is the best. If your data set is small, maybe you should try simplified RNN, instead of LSTM, which has less parameter to train.

So far we have reviewed most of the basic knowledge in Neural Network. Let's have some fun in the next post. The next post for this series will be some cool applications in CNN and RNN.

To be continued.