@skysbjdy

2015-06-07T06:20:02.000000Z

字数 7964

阅读 1417

Summary of Pedestrian Detection Research

Histograms of Oriented Gradients[1]

One of the most popular and successful “person detectors” out there right now is the HOG with SVM approach.

The basic idea is that local object appearance and shape can often be characterizedrather well by the distribution of local intensity gradients oredge directions, even without precise knowledge of the corresponding gradient or edge positions.

The six steps of this algorithm:

- Gamma/Color Normalization

- Gradient Computation

- Spatial/Orientation Binning

- Normalization and Descriptor Blocks

- Detector and Context

- Classifier

HOG is a type of “feature descriptor”. The intent of a feature descriptor is to generalize the object in such a way that the same object (in this case a person) produces as close as possible to the same feature descriptor when viewed under different conditions. This makes the classification task easier.

The creators of this approach trained a Support Vector Machine (a type of machine learning algorithm for classification), or “SVM”, to recognize HOG descriptors of people.

The HOG person detector is fairly simple to understand (compared to SIFT object recognition, for example). One of the main reasons for this is that it uses a “global” feature to describe a person rather than a collection of “local” features. Put simply, this means that the entire person is represented by a single feature vector, as opposed to many feature vectors representing smaller parts of the person.

The HOG person detector uses a sliding detection window which is moved around the image. At each position of the detector window, a HOG descriptor is computed for the detection window. This descriptor is then shown to the trained SVM, which classifies it as either “person” or “not a person”.

To recognize persons at different scales, the image is subsampled to multiple sizes. Each of these subsampled images is searched.

Gradient Histograms

The HOG person detector uses a detection window that is 64 pixels wide by 128 pixels tall.

To compute the HOG descriptor, we operate on 8×8 pixel cells within the detection window. These cells will be organized into overlapping blocks, but we’ll come back to that.

Within a cell, we compute the gradient vector at each pixel. We take the 64 gradient vectors (in our 8×8 pixel cell) and put them into a 9-bin histogram. The Histogram ranges from 0 to 180 degrees, so there are 20 degrees per bin.

For each gradient vector, it’s contribution to the histogram is given by the magnitude of the vector (so stronger gradients have a bigger impact on the histogram). We split the contribution between the two closest bins. So, for example, if a gradient vector has an angle of 85 degrees, then we add 1/4th of its magnitude to the bin centered at 70 degrees, and 3/4ths of its magnitude to the bin centered at 90.

I believe the intent of splitting the contribution is to minimize the problem of gradients which are right on the boundary between two bins. Otherwise, if a strong gradient was right on the edge of a bin, a slight change in the gradient angle (which nudges the gradient into the next bin) could have a strong impact on the histogram.

Why put the gradients into this histogram, rather than simply using the gradient values directly? The gradient histogram is a form of “quantization”, where in this case we are reducing 64 vectors with 2 components each down to a string of just 9 values (the magnitudes of each bin). Compressing the feature descriptor may be important for the performance of the classifier, but I believe the main intent here is actually to generalize the contents of the 8×8 cell.

Imagine if you deformed the contents of the 8×8 cell slightly. You might still have roughly the same vectors, but they might be in slightly different positions within the cell and with slightly different angles. The histogram bins allow for some play in the angles of the gradients, and certainly in their positions.

Normalizing Gradient Vectors

The next step in computing the descriptors is to normalize the histograms. Let's take a moment to first look at the effect of normalizing gradient vectors in general.

You can add or subtract a fixed amount of brightness to every pixel in the image, and you will still get the same the same gradient vectors at every pixel.

It turns out that by normalizing your gradient vectors, you can also make them invariant to multiplications of the pixel values. The effect of the multiplication is that bright pixels became much brighter while dark pixels only became a little brighter, thereby increasing the contrast between the light and dark parts of the image.

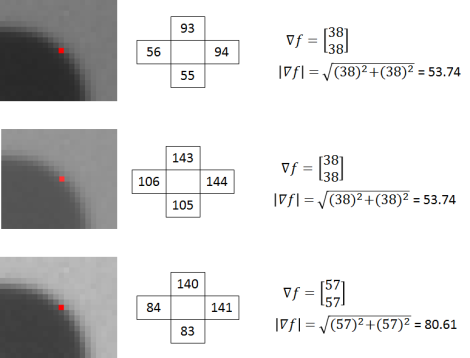

Let's look at the actual pixel values and how the gradient vector changes in these three images. The numbers in the boxes below represent the values of the pixels surrounding the pixel marked in red.

The gradient vectors are equivalent in the first and second images, but in the third, the gradient vector magnitude has increased by a factor of 1.5.

If you divide all three vectors by their respective magnitudes, you get the same result for all three vectors: [ 0.71 0.71]’.

So in the above example we see that by dividing the gradient vectors by their magnitude we can make them invariant (or at least more robust) to changes in contrast.

Dividing a vector by its magnitude is referred to as normalizing the vector to unit length, because the resulting vector has a magnitude of 1. Normalizing a vector does not affect its orientation, only the magnitude.

Histogram Normalization

Recall that the value in each of the nine bins in the histogram is based on the magnitudes of the gradients in the 8×8 pixel cell over which it was computed. If every pixel in a cell is multiplied by 1.5, for example, then we saw above that the magnitude of all of the gradients in the cell will be increased by a factor of 1.5 as well. In turn, this means that the value for each bin of the histogram will also be increased by 1.5x. By normalizing the histogram, we can make it invariant to this type of illumination change.

Block Normalization

Rather than normalize each histogram individually, the cells are first grouped into blocks and normalized based on all histograms in the block.

The blocks used by Dalal and Triggs consisted of 2 cells by 2 cells. The blocks have “50% overlap”. This block normalization is performed by concatenating the histograms of the four cells within the block into a vector with 36 components (4 histograms x 9 bins per histogram). Divide this vector by its magnitude to normalize it.

The effect of the block overlap is that each cell will appear multiple times in the final descriptor, but normalized by a different set of neighboring cells. (Specifically, the corner cells appear once, the other edge cells appear twice each, and the interior cells appear four times each).

Final Descriptor Size

The 64 x 128 pixel detection window will be divided into 7 blocks across and 15 blocks vertically, for a total of 105 blocks. Each block contains 4 cells with a 9-bin histogram for each cell, for a total of 36 values per block. This brings the final vector size to 7 blocks across x 15 blocks vertically x 4 cells per block x 9-bins per histogram = 3,780 values.

Datasets[2]

Multiple public pedestrian datasets have been collected over the years; INRIA, ETH, TUD-Brussels, Daimler, Caltech-USA, and KITTI are the most commonly used ones. They all have different char- acteristics, weaknesses, and strengths.

INRIA is amongst the oldest and as such has comparatively few images. It benefits however from high quality annotations of pedestrians in diverse settings (city, beach, mountains, etc.), which is why it is commonly selected for training. ETH and TUD-Brussels are mid-sized video datasets. Daimler is not considered by all methods because it lacks colour channels. Daimler ste- reo, ETH, and KITTI provide stereo information. All datasets but INRIA are obtained from video, and thus enable the use of optical flow as an additional cue.

Today, Caltech-USA and KITTI are the predominant benchmarks for pedestrian detection. Both are comparatively large and challenging. Caltech-USA stands out for the large number of methods that have been evaluated side-by- side. KITTI stands out because its test set is slightly more diverse, but is not yet used as frequently. INRIA, ETH (monocular), TUD-Brussels, Daimler (monocular), and Caltech-USA are available under a unified evaluation toolbox; KITTI uses its own separate one with unpublished test data. Both toolboxes maintain an online ranking where published methods can be compared side by side.

Source Code

Matlab

- Piotr's Computer Vision Matlab Toolbox(ACF,LDCF)

- Discriminatively trained deformable part models(Latent SVM)

- Robust Real-Time Face Detetection(VJ)

- Part-based Feature Synthesis for Human Detection

- Fast multiple-part based object detection using KD-Ferns(PFS-SVM)

- Sketch Tokens: A Learned Mid-level Representation for Contour and Object Detection

- A Discriminative Deep Model for Pedestrian Detection with Occlusion Handling

- Joint Deep Learning for Pedestrian Detection

- Single-Pedestrian Detection aided by Multi-pedestrian Detection

C or CUDA