@HarryUp

2017-04-07T05:09:40.000000Z

字数 465

阅读 490

Improving Interpretability of Deep Neural Networks with Semantic Information

Paper_Note Understand_DNN

Highlight

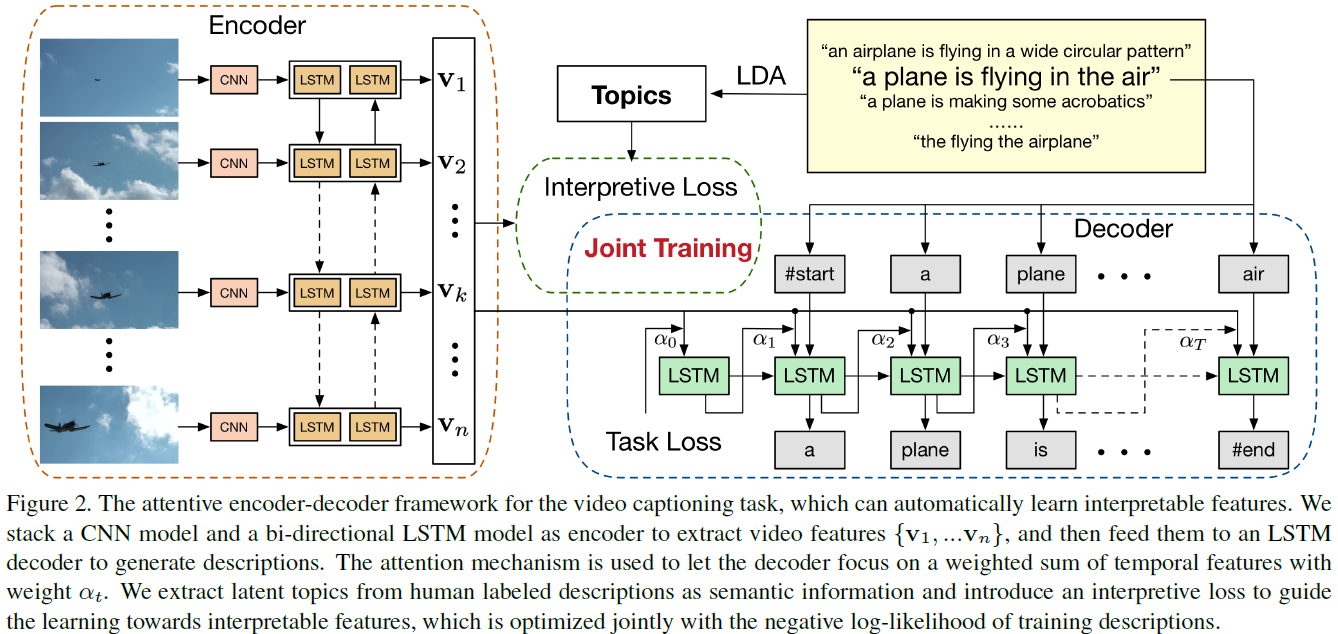

- 加入Interpretive Loss增加构建时的解释导向性 (how to!)

- 引入Prediction Difference Maximization求出序列数据中对结果贡献大的特征(这里是neuron)

!!!高层语义transfer,Interpretive Loss灌输知识

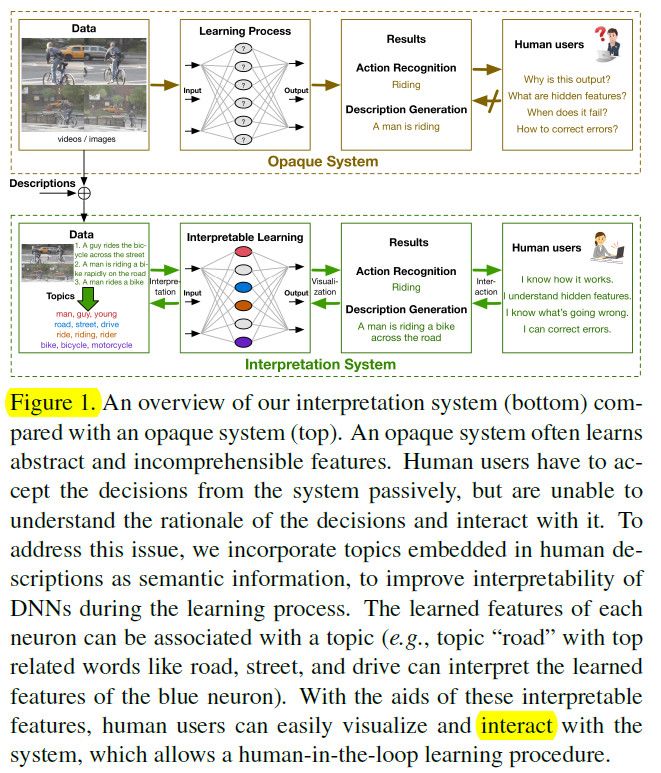

Overview

Interpretation Discover

LDA+人工根据类内的words标注

Interpretation Guide

用Interpretive Loss,引导向着明确语义的地方学习

Human in the Loop

- Prediction Difference Maximization找每个neuron对哪个topic贡献最多(最相关),打等号(必然会有feature重叠)

- 利用类似Adaboost的原理更新每个neuron权重

- 传递错误信息到encoder