@awsekfozc

2015-12-17T17:57:53.000000Z

字数 2698

阅读 2199

Flume

Flume

1)简介

日志数据收集工具,关键部分agent。每个agent分为三个部分

1.source:要收集数据的源。主动push数据到channel.

2.channel:数据管道,接受source推送的数据,被sink拉取数据

3.sink:收集的数据要存放的位置。主动从channel上poll数据。

2)安装

下载地址

http://archive.cloudera.com/cdh5/cdh/5/flume-ng-1.5.0-cdh5.3.5.tar.gz

官方文档

http://archive.cloudera.com/cdh5/cdh/5/flume-ng-1.5.0-cdh5.3.6/FlumeUserGuide.html

安装JDK

安装解压

$ tar -zxvf flume-ng-1.5.0-cdh5.3.6.tar.gz -C /opt/cdh/

配置JAVA_HOME

文件flume-env.sh

export JAVA_HOME=/opt/moduels/jdk1.7.0_67

3)简单示例

HDFS Sink for hour[1]

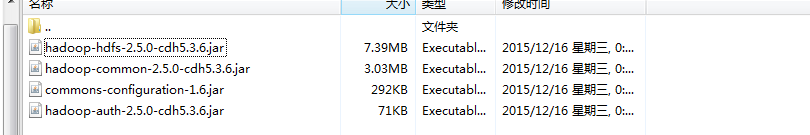

将hadoop jar包放入 flume_home/lib

执行

bin/flume-ng agent --conf conf --name a1 --conf-file conf/hdfs-sink.conf -Dflume.root.logger=INFO,console

配置

# The configuration file needs to define the sources,# the channels and the sinks.#defined agent.a1.sources = r1a1.channels = c1a1.sinks = k1#defined sources.a1.sources.r1.type = execa1.sources.r1.command = tail -f /opt/cdh/hive-0.13.1-cdh5.3.6/logs/hive.loga1.sources.r1.shell = /bin/bash -c#defined channels.a1.channels.c1.type = filea1.channels.c1.checkpointDir = /opt/datas/flume/checkpointa1.channels.c1.dataDirs = /opt/datas/flume/data#defined sinks.a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://hadoop.zc.com:8020/user/zc/flume/hive-log/%Y%m%d/%Ha1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.filePrefix = zca1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.batchSize = 1000a1.sinks.k1.hdfs.rollSize = 128000000a1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.round = truea1.sinks.k1.hdfs.useLocalTimeStamp = true#defined sources channels sinks.a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

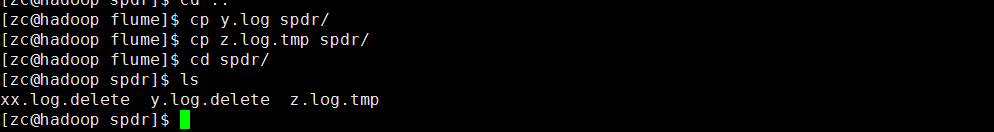

Spooling Directory Source[2]

常搭配其他日志框架使用(如log4),当日志框架将文件完成写入后,加入标示,如拓展名改变。flume检测到后读取文件,读取完成后,修改标示。

配置

# The configuration file needs to define the sources,# the channels and the sinks.#defined agent.a1.sources = r1a1.channels = c1a1.sinks = k1#defined sources.a1.sources.r1.type = spooldira1.sources.r1.spoolDir = /opt/datas/flume/spdra1.sources.r1.fileSuffix = .deletea1.sources.r1.ignorePattern = ^(.)*\\.log.tmp$#defined channels.a1.channels.c1.type = filea1.channels.c1.checkpointDir = /opt/datas/flume/checkpointa1.channels.c1.dataDirs = /opt/datas/flume/data#defined sinks.a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://hadoop.zc.com:8020/user/zc/flume/hive-loga1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.filePrefix = zca1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.batchSize = 1000a1.sinks.k1.hdfs.rollSize = 128000000a1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.round = truea1.sinks.k1.hdfs.useLocalTimeStamp = true#defined sources channels sinks.a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

在此输入正文