@shaobaobaoer

2020-04-11T10:37:44.000000Z

字数 2708

阅读 1075

A brief Note about the 4th chapter of 《NN & DL》

未分类

ref EXPLAINING AND HARNESSING ADVERSARIAL EXAMPLES

http://neuralnetworksanddeeplearning.com/chap4.html

I found some hardship when writing the diplomat essay. I have learn a lot about the methods of A.Es but still find it hard to explain the reason why A.E can make it.

Otherwise, I find a lack of acknowledge when reading the eassy about FGSM. I find that the writer of that eassy is a very prestigious person named "Goodfellow" , the father of GAN.

A visual proof that neural nets can compute any function

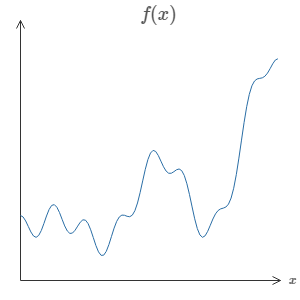

One of the most striking facts about neural networks is that they can compute any function at all. That is, suppose someone hands you some complicated, wiggly function,

May be that func can be much more complex , or may have muti-inputs & outputs. We can conclude them in a matrix and explain it in a higher space. so the can be showed as follows:

To sum up , this result tells us that neural networks have a kind of universality.

The universality theorem is well known by people who use neural networks. But why it's true is not so widely understood.

2 caveats(注意点)

when u are using the statement " a NN can compute any func" . U should pay attention to 2 caveats

Firstly, it doesn't mean NN can exactly compute any func, but it means NN can approximation compute them. And how can NN make it ? Just increasing the num of hidden neurons.

Secondly, the approximated funcs should be described as continuous func. if an func is discontinuous, for e.g. abs function. I won't in general be possible to approximate by NN. However when we are meeting these funcs , NN also can use an continuous func to approximation it well.

one input; one output;

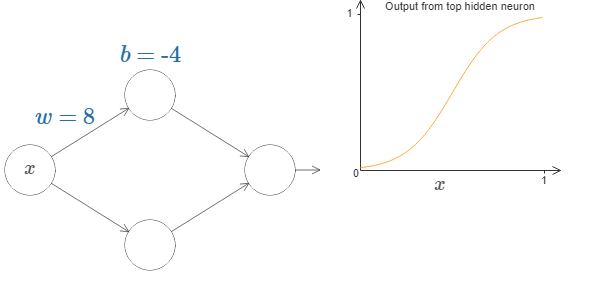

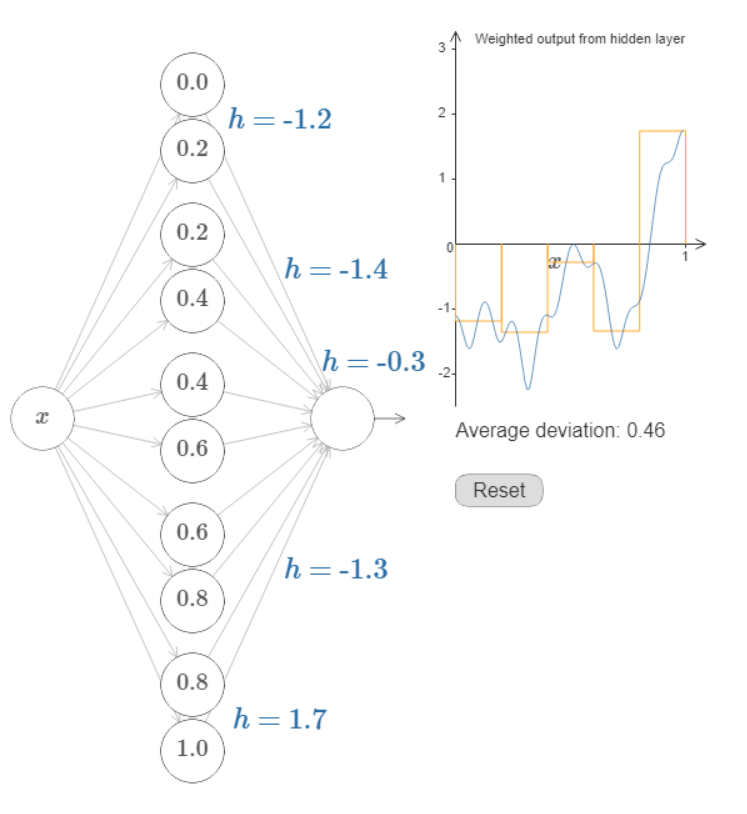

so here comes to a very simple NN , its hidden neuron is sigmoid funciton and it has one layer

if we change the weight , the curve will become much shaper ,while we change the bias the loc of inflection point will change.

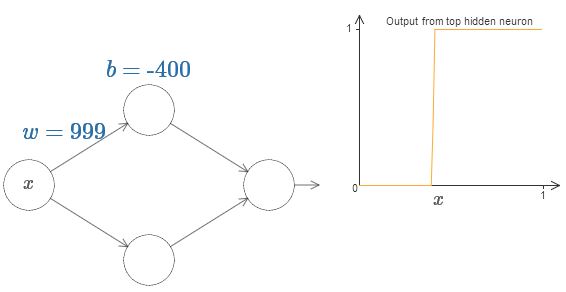

Let's see what will happen when the weight equals to 999 and bias equals to -400,

Amazing this curve is much more like and discontinue func but it just consist by a continuous func

Another detail of this func is the parametier , mathetically , it explains where the infleciton point is.

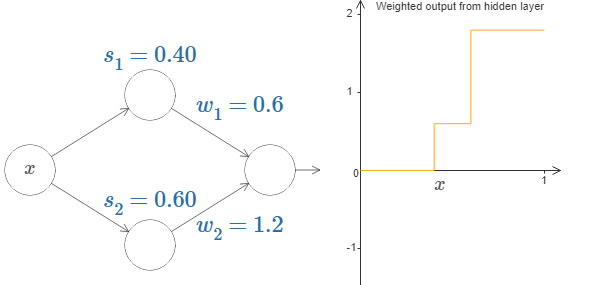

here we can change the curve a bit more complex, so when we consider 2 groups of parameter what will happen ?

We can find that the curve can have other inflection points becasue the consists of 2 parts ,

However we aren't satisfied with this situation ,because the curve is monotonic increasing. If we want a part of decreasing curve , what we can do is make weight become negative

so as we add more hidden layer the curve will have more inflection points and the curve will become closer and closer to the given func

Muti input situation

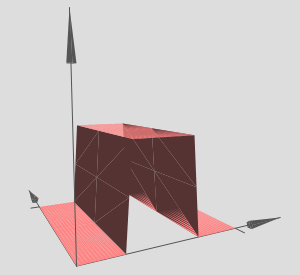

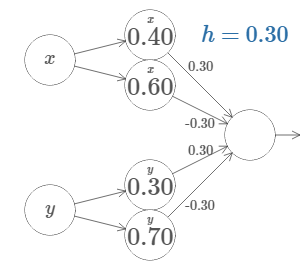

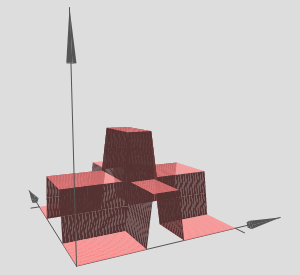

so if we have muti input(e.g. 2) what will happen to our graph ?

If we could build such tower functions, then we could use them to approximate arbitrary functions, just by adding up many towers of different heights, and in different locations

Why adversarail Sample Exsits?

Szegedy:2013

神经网络的高度非线性性质导致对抗样例的存在。以及纯粹的监督学习模型中不充分的模型平均和不充分的正则化所导致的过拟合。

Explain: X

Goodfellow:2015

高维空间中线性性质才是导致对抗样例存在的真正原因

Explain: FGSM