@zhangsiming65965

2019-03-26T06:12:19.000000Z

字数 54907

阅读 164

Kubernetes集群部署

K8S

---Author:张思明 ZhangSiming

---Mail:1151004164@cnu.edu.cn

---QQ:1030728296

如果有梦想,就放开的去追;

因为只有奋斗,才能改变命运。

一、官方提供的三种部署方式

- minikube

Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。

部署地址:https://kubernetes.io/docs/setup/minikube/

- kubeadm

Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

缺点:

1.还在测试中;

2.主控节点与Node节点有一个连接超时时间,超过这个时间就连接不上了(1年);

3.所有内部组件都被部署好了,难以得到K8S的学习。

- 二进制包

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

下载地址:https://github.com/kubernetes/kubernetes/releases

二、Kubernetes平台环境规划

2.1实验环境配置

| 软件 | 版本 |

|---|---|

| Linux操作系统 | CentOS7.5_x64 |

| Kubernetes | 1.12 |

| Docker | 18.xx-ce(社区版) |

| Etcd | 3.3.11 |

| Flannel | 0.10 |

| 角色 | IP | 组件 |

|---|---|---|

| Master01 | 192.168.17.130 | Kube-APIServer、Kube-controller-manager、Kube-scheduler、Etcd |

| Master02 | 192.168.17.131 | Kube-APIServer、Kube-controller-manager、Kube-scheduler、Etcd |

| Node01 | 192.168.17.132 | Kubelet、Kube-proxy、Docker、flannel、Etcd |

| Node02 | 192.168.17.133 | Kubelet、Kube-proxy、Docker、flannel |

| Load Balance(Master) | 192.168.17.134、192.168.17.135(VIP) | Nginx L4 |

| Load Balancer(Backup) | 192.168.17.136 | Nginx L4 |

| Registry | 192.168.17.137 | Harbor |

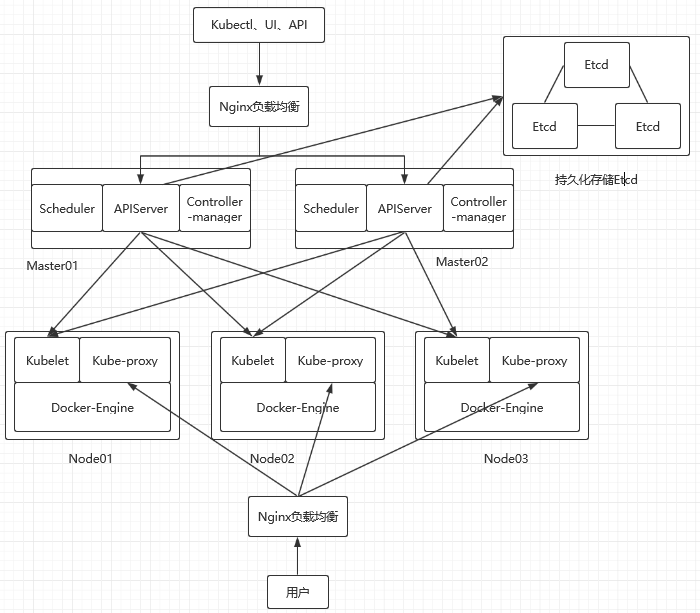

2.2实验架构图

三、自签SSL证书

3.1什么是SSL证书

SSL证书(SSL Certificates)是HTTP明文协议升级HTTPS加密协议必备的数字证书。它在客户端(浏览器)与服务端(网站服务器)之间搭建一条安全的加密通道,对两者之间交换的信息进行加密,确保传输数据不被泄露或篡改。

HTTP------>HTTPS

什么是自签的SSL证书?

注:自签的SSL证书顾名思义就是不是受到受信任的机构颁发的SSL证书,自己签发的证书。这种证书随意签发,不受监督也不会受任何浏览器以及操作系统信任。

3.2K8S集群使用自签证书表

| 组件 | 使用的证书 |

|---|---|

| etcd | ca.pem,server.pem,server-key.pem |

| flannel | ca.pem,server.pem,server-key.pem |

| kube-apiserver | ca.pem,server.pem,server-key.pem |

| kubelet | ca.pem,ca-key.pem |

| kube-proxy | ca.pem,kube-proxy.pem,kube-proxy-key.pem |

| kubectl | ca.pem,admin.pem,admin-key.pem |

注:ca是证书发布机构

3.3生成Etcd的自签SSL证书,增强安全性

3.3.1获取自签cfssl证书生成工具

[root@ZhangSiming ~]# mkdir K8S[root@ZhangSiming ~]# cd K8S#在K8SMaster01上生成需要的证书[root@ZhangSiming K8S]# ls K8S/cfssl.sh Etcd-cert.sh#cfssl.sh是下载证书生成工具的脚本;Etcd-cert.sh是利用cfssl工具生成相应证书的脚本[root@ZhangSiming K8S]# cat cfssl.sh#!/bin/bash#designed by Zhangsimingcurl -L https:/\/pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfsslcurl -L https:/\/pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson#使cfssl支持json语言的工具包curl -L https:/\/pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo#查看cfssl信息包chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo#执行脚本获取工具[root@ZhangSiming K8S]# bash cfssl.sh% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 9.8M 100 9.8M 0 0 1215k 0 0:00:08 0:00:08 --:--:-- 1933k% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 2224k 100 2224k 0 0 635k 0 0:00:03 0:00:03 --:--:-- 635k% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed100 6440k 100 6440k 0 0 959k 0 0:00:06 0:00:06 --:--:-- 1379k[root@ZhangSiming K8S]# ls /usr/local/bin/ | grep cfsslcfsslcfssl-certinfocfssljson#获取成功

3.3.2给Etcd生成证书

###生成脚本[root@ZhangSiming K8S]# cat Etcd-cert.sh#!/bin/bash#Designed by Zhangsimingcat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h" #过期时间},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa", #加密算法"size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOF#生成根证书的文件cfssl gencert -initca ca-csr.json | cfssljson -bare ca -#生成根证书cat > server-csr.json << EOF{"CN": "etcd","hosts": ["192.168.17.130","192.168.17.131","192.168.17.132" #这三个IP要是Etcd节点的的三个IP],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOF#利用根证书颁发子证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server#生成所有Etcd需要的证书#执行脚本[root@ZhangSiming K8S]# mkdir cert[root@ZhangSiming K8S]# lscert cfssl.sh Etcd-cert.sh[root@ZhangSiming K8S]# cd cert/[root@ZhangSiming cert]# sh ~/K8S/Etcd-cert.sh2019/01/30 16:36:15 [INFO] generating a new CA key and certificate from CSR2019/01/30 16:36:15 [INFO] generate received request2019/01/30 16:36:15 [INFO] received CSR2019/01/30 16:36:15 [INFO] generating key: rsa-20482019/01/30 16:36:16 [INFO] encoded CSR2019/01/30 16:36:16 [INFO] signed certificate with serial number 2781083946518109436281400752436734204929202158582019/01/30 16:36:16 [INFO] generate received request2019/01/30 16:36:16 [INFO] received CSR2019/01/30 16:36:16 [INFO] generating key: rsa-20482019/01/30 16:36:16 [INFO] encoded CSR2019/01/30 16:36:16 [INFO] signed certificate with serial number 616140684510672778636986208654429562581801550642019/01/30 16:36:16 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").[root@ZhangSiming cert]# lsca-config.json ca-csr.json ca.pem server-csr.json server.pemca.csr ca-key.pem server.csr server-key.pem#Etcd需要的三个证书,ca.pem、server-key.pem、server.pem已经全部生成好

四、Etcd数据库Cluster集群部署

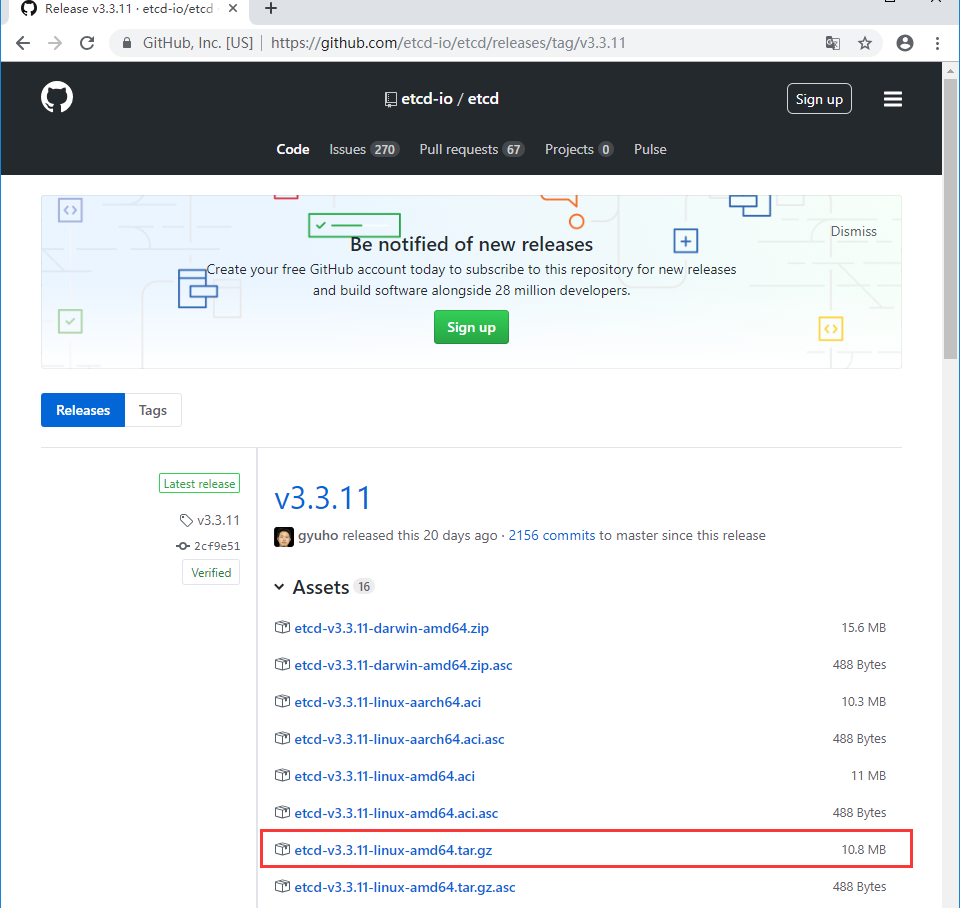

4.1下载Etcd的二进制安装包(从GitHub上)

Etcd二进制包下载地址

https://github.com/etcd-io/etcd/releases

注:下载3.3.11版本的,3.3.10版本systemctl自启动服务启动时候会出错......

拷贝下载链接:https://github.com/etcd-io/etcd/releases/download/v3.3.11/etcd-v3.3.11-linux-amd64.tar.gz

[root@ZhangSiming cert]# cat /etc/redhat-releaseCentOS Linux release 7.5.1804 (Core)[root@ZhangSiming cert]# uname -r3.10.0-862.el7.x86_64[root@ZhangSiming cert]# systemctl stop firewalld[root@ZhangSiming cert]# systemctl disable firewalld[root@ZhangSiming cert]# getenforce 0Disabled#下载Etcd包[root@ZhangSiming K8S]# lscert cfssl.sh Etcd-cert.sh[root@ZhangSiming K8S]# which wget/usr/bin/wget[root@ZhangSiming K8S]# wget https:/\/github.com/etcd-io/etcd/releases/download/v3.3.11/etcd-v3.3.11-linux-amd64.tar.gz[root@ZhangSiming K8S]# lscert cfssl.sh Etcd-cert.sh etcd-v3.3.11-linux-arm64.tar.gz#下载成功

4.2编写一键生成Etcd配置文件及服务自启动systemctl配置文件的脚本

[root@ZhangSiming etcd-v3.3.10-linux-arm64]# cat ~/K8S/Deploy/etcd.sh#!/bin/bash#Designed by Zhangsiming# example: ./etcd.sh etcd01 192.168.17.130 etcd02=https://192.168.17.131:2380,etcd03=https://192.168.17.132:2380#传入参数范例ETCD_NAME=$1ETCD_IP=$2ETCD_CLUSTER=$3WORK_DIR=/opt/etcd#脚本传入的参数及自定义全局变量cat > $WORK_DIR/cfg/etcd << EOF#生成etcd的配置文件#[Member]ETCD_NAME="${ETCD_NAME}"#Etcd数据库名称ETCD_DATA_DIR="/var/lib/etcd/default.etcd"#数据目录ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"#Etcd-cluster集群通信连接端口2380ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"#Etcd对外访问开放端口2379#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"#本地Etcd的IP:端口ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"#本地Etcd对外访问端口ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"#Etcd集群IP及端口ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"#集群认证,这个随意指定,需要所以cluster一致ETCD_INITIAL_CLUSTER_STATE="new"#new代表新创建的Etcd-clusterEOFcat >/usr/lib/systemd/system/etcd.service <<EOF#生成CentOS7的systemctl服务配置文件[Unit]Description=Etcd Server#服务描述After=network.targetAfter=network-online.targetWants=network-online.target#服务依赖条件,启动网络之后启动等...[Service]Type=notify#notify模式,即指启动守护进程之后,进行后续监听操作(找cluster节点)EnvironmentFile=${WORK_DIR}/cfg/etcd#使用的Etcd配置文件ExecStart=${WORK_DIR}/bin/etcd \--name=\${ETCD_NAME} \--data-dir=\${ETCD_DATA_DIR} \--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \--initial-cluster=\${ETCD_INITIAL_CLUSTER} \--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \--initial-cluster-state=new \--cert-file=${WORK_DIR}/ssl/server.pem \--key-file=${WORK_DIR}/ssl/server-key.pem \--peer-cert-file=${WORK_DIR}/ssl/server.pem \--peer-key-file=${WORK_DIR}/ssl/server-key.pem \--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem#这个是启动Etcd守护进程的命令,其中附带使用的自签证书Restart=on-failure#出错时重启LimitNOFILE=65536#单个进程文件描述符65535[Install]WantedBy=multi-user.target#一般这里都是multi-user即多用户服务EOFsystemctl daemon-reload #这句使编写的Etcd服务生效systemctl enable etcd #开机自启动Etcd服务(系统服务)systemctl restart etcd #重启Etcd服务

4.3配置部署Etcd节点

#拷贝文件到指定的/opt/etcd目录下[root@ZhangSiming etcd-v3.3.10-linux-arm64]# tree /opt/etcd/opt/etcd├── bin│ ├── etcd│ └── etcdctl #解压二进制包把其中的etcd、etcdctl拷贝到bin目录下├── cfg #执行脚本生成的etcd配置文件会在这个目录下└── ssl├── ca-config.json├── ca.csr├── ca-csr.json├── ca-key.pem├── ca.pem├── server.csr├── server-csr.json├── server-key.pem└── server.pem #把自签ca证书拷贝到ssl目录下3 directories, 12 files#执行脚本[root@ZhangSiming K8S]# ~/K8S/Deploy/etcd.sh etcd01 192.168.17.130 etcd02=https://192.168.17.131:2380,etcd03=https://192.168.17.132:2380[root@ZhangSiming etcd-v3.3.11-linux-amd64]# systemctl start etcdJob for etcd.service failed because a timeout was exceeded. See "systemctl status etcd.service" and "journalctl -xe" for details.#这里会等待很长时间,因为没有配置cluster中的其他两台Etcd节点,所以最后会失败,没有关系,我们接下来就配置

4.4配置部署Etcd-cluster集群

[root@ZhangSiming etcd-v3.3.11-linux-amd64]# scp -r /opt/etcd/ root@192.168.17.131:/opt#把/opt/etcd目录下所有文件拷贝到其他etcd节点的对应位置The authenticity of host '192.168.17.131 (192.168.17.131)' can't be established.ECDSA key fingerprint is SHA256:hcae7bE6sNTeEGHgZaykEfqSiPQCoW2dJBwSZ8DqUTA.ECDSA key fingerprint is MD5:4e:30:92:c6:66:00:48:d5:69:1a:10:1f:16:ef:2e:de.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.17.131' (ECDSA) to the list of known hosts.root@192.168.17.131's password:etcd 100% 18MB 18.3MB/s 00:01etcdctl 100% 15MB 29.3MB/s 00:00etcd 100% 516 636.4KB/s 00:00ca-config.json 100% 269 335.0KB/s 00:00ca.csr 100% 956 1.5MB/s 00:00ca-csr.json 100% 194 339.2KB/s 00:00ca-key.pem 100% 1679 1.8MB/s 00:00ca.pem 100% 1265 2.0MB/s 00:00server.csr 100% 1013 72.9KB/s 00:00server-csr.json 100% 294 237.4KB/s 00:00server-key.pem 100% 1679 1.5MB/s 00:00server.pem 100% 1338 1.5MB/s 00:00#拷贝自启动systemctl文件到其他Etcd节点[root@ZhangSiming etcd-v3.3.11-linux-amd64]# scp /usr/lib/systemd/system/etcd.service root@192.168.17.131:/usr/lib/systemd/system/etcd.serviceroot@192.168.17.131's password:etcd.service 100% 923 27.3KB/s 00:00

启动Etcd-cluster集群

#在三个Etcd节点都启动etcd服务[root@ZhangSiming etcd]# systemctl start etcd[root@ZhangSiming etcd]#[root@ZhangSiming ssl]# pwd/opt/etcd/ssl[root@ZhangSiming ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379" cluster-health#访问Etcd的三个节点的2379端口,进行Etcd的健康检测member 3a6f8c78a708ea9 is healthy: got healthy result from https://192.168.17.132:2379member 5658b2b12e105fd0 is healthy: got healthy result from https://192.168.17.131:2379member 9d10fc7919c17197 is healthy: got healthy result from https://192.168.17.130:2379cluster is healthy#集群是健康的!

在配置Etcd-cluster集群的时候,如果出了问题,首先查看/opt/etcd/cfg/etcd配置文件和systemctl配置文件有无错误,之后再看/var/log/messages进行排查。

五、Node安装Docker

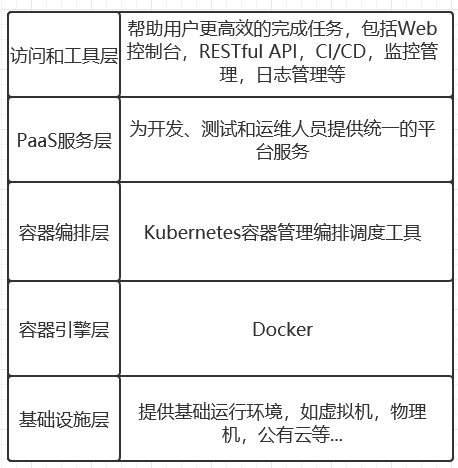

5.1K8S提供服务架构层次图

5.2K8SNode节点安装Docker

去docker官方网址:docs.docker.com,根据提示安装docker-ce

#以下操作Node01和Node02一致#安装docker依赖包[root@ZhangSiming etcd]# yum install -y yum-utils device-mapper-persistent-data lvm2#下载dockerrepo[root@ZhangSiming etcd]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo#安装2.9版本以上的container-selinux包[root@ZhangSiming etcd]# yum -y localinstall container-selinux-2.10-2.el7.noarch.rpm#yum方式安装docker[root@ZhangSiming etcd]# yum install docker-ce -y#查看docker是否安装[root@ZhangSiming etcd]# systemctl start docker[root@ZhangSiming etcd]# systemctl enable dockerCreated symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.[root@ZhangSiming etcd]# docker versionClient:Version: 18.09.1API version: 1.39Go version: go1.10.6Git commit: 4c52b90Built: Wed Jan 9 19:35:01 2019OS/Arch: linux/amd64Experimental: falseServer: Docker Engine - CommunityEngine:Version: 18.09.1API version: 1.39 (minimum version 1.12)Go version: go1.10.6Git commit: 4c52b90Built: Wed Jan 9 19:06:30 2019OS/Arch: linux/amd64Experimental: false

5.3在下载好Docker的Node进行优化配置

[root@ZhangSiming etcd]# curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io#daocloud是docker的加速器,这里复制daocloud官网的命令给本地docker安装加速器,因为docker version >= 1.12{"registry-mirrors": ["http://f1361db2.m.daocloud.io"]}Success.You need to restart docker to take effect: sudo systemctl restart docker[root@ZhangSiming etcd]# systemctl restart docker #安装完加速器重启Docker[root@ZhangSiming etcd]# docker run -dit nginx #启动一个nginxDocker进程(没有镜像默认先下载镜像再启动容器进程)Unable to find image 'nginx:latest' locallylatest: Pulling from library/nginx5e6ec7f28fb7: Pull completeab804f9bbcbe: Pull complete052b395f16bc: Pull completeDigest: sha256:f630aa07be1af4ce6cbe1d5e846bb6bb3b5ba6bfec0ec0edc08ba48d8c1d9b0fStatus: Downloaded newer image for nginx:latest04f73c49514fcca4c1d48efe706537cf2b2378a842feb4aa937f06ea1b537f57#下载nginx镜像并启动完成,因为有加速器,所以非常快[root@ZhangSiming etcd]# docker ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES04f73c49514f nginx "nginx -g 'daemon of…" 28 seconds ago Up 27 seconds 80/tcp eager_panini

六、Flannel容器集群网络部署

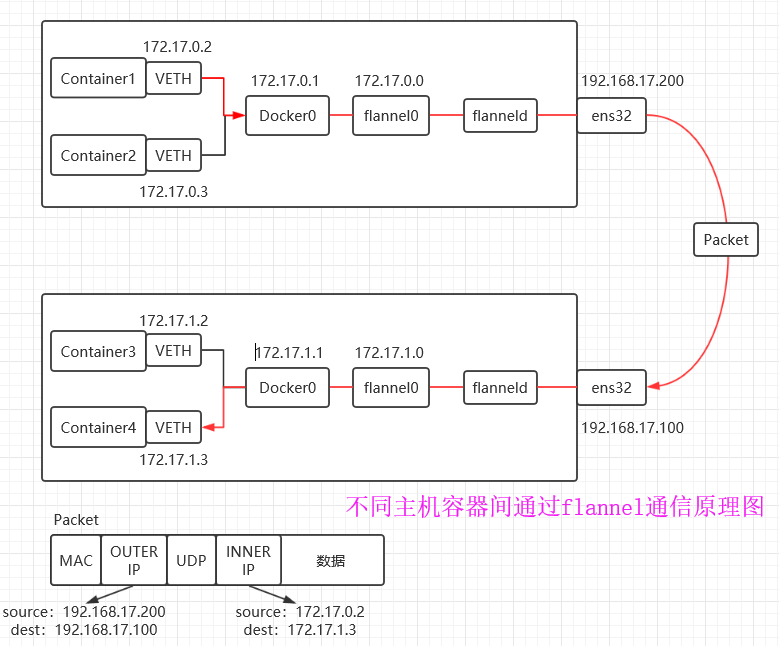

6.1基于Flannel实现不同主机容器间通信的工作原理

Overlay Network:覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。

VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址。

Flannel:是Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式。

1.主机A的container1要和主机B的container2建立通信,先把数据包发给Docker0网关;

2.Docker0把数据发给flanneld0,flanneld服务进行封装并从主机A的ens32网卡发出到以太网中;

3.主机Bens32网卡接收到数据包,由主机B的flanneld服务进行解封装,去到主机B的Docker0之后到达container2。

注意:

flanneld通过Etcd存储一个路由表,路由表指示了容器要想和容器通信,需要哪台主机发送给哪台主机;因为flanneld会首先在Etcd分配并存储一个子网,然后由这个子网给容器下发IP,所以容器间可以通信------flannel覆盖网络

6.2部署flannel容器集群网络

6.2.1写入分配给flannel的子网到Etcd,供flanneld使用

[root@ZhangSiming ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379" set /coreos.com/network/config '{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}#这是在Etcd中写入flanneld分配的子网,并指定封装类型为VXLAN[root@ZhangSiming ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379" get /coreos.com/network/config{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}#etcdctl get 查看一下刚刚设置的

6.2.2去GitHub下载flannel二进制包,并解压到/opt/kubernetes/bin/中

[root@ZhangSiming opt]# mkdir -p kubernetes/{bin,ssl,cfg}[root@ZhangSiming opt]# cd kubernetes/bin/[root@ZhangSiming bin]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz[root@ZhangSiming bin]# lsflannel-v0.11.0-linux-amd64.tar.gz[root@ZhangSiming bin]# tar xf flannel-v0.11.0-linux-amd64.tar.gz[root@ZhangSiming bin]# lsflanneld flannel-v0.11.0-linux-amd64.tar.gz mk-docker-opts.sh README.md

6.2.3编写flannel服务脚本,并配置docker服务脚本,使其使用flannel分配的子网

[root@ZhangSiming kubernetes]# cat flannel.sh#!/bin/bash#Designed by Zhangsimingcat >/opt/kubernetes/cfg/flanneld << EOFFLANNEL_OPTIONS="--etcd-endpoints=https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379 \-etcd-cafile=/opt/etcd/ssl/ca.pem \-etcd-certfile=/opt/etcd/ssl/server.pem \-etcd-keyfile=/opt/etcd/ssl/server-key.pem"EOF#这个文件中的变量是flanneld服务配置文件中引用的环境文件,用于启动flanneld服务cat <<EOF >/usr/lib/systemd/system/flanneld.service[Unit]Description=Flanneld overlay address etcd agentAfter=network-online.target network.targetBefore=docker.service[Service]Type=notifyEnvironmentFile=/opt/kubernetes/cfg/flanneldExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS#引用FLANNEL_OPTIONS变量,不能加{}否则会出错ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env#启动后(Post)生成一个子网文件/run/flannel/subnet.env供给Docker使用Restart=on-failure[Install]WantedBy=multi-user.targetEOF#重新修改配置docker服务的配置文件,使其使用flannel分配的子网cat <<EOF >/usr/lib/systemd/system/docker.service[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyEnvironmentFile=/run/flannel/subnet.envExecStart=/usr/bin/dockerd \$DOCKER_NETWORK_OPTIONS#引用/run/flannel/subnet.env中的变量,其实就是使用flannel覆盖网络的子网来使得Docker间可以通信ExecReload=/bin/kill -s HUP \$MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reload#修改完systemctl自启动配置文件要daemon-reloadsystemctl enable flanneldsystemctl restart flanneldsystemctl restart docker#重新启动flannel服务和docker服务#我们不妨看一下flannel服务生成的/run/flannel/subnet.env文件[root@ZhangSiming kubernetes]# cat /run/flannel/subnet.envDOCKER_OPT_BIP="--bip=172.17.63.1/24"DOCKER_OPT_IPMASQ="--ip-masq=false"DOCKER_OPT_MTU="--mtu=1450"DOCKER_NETWORK_OPTIONS=" --bip=172.17.63.1/24 --ip-masq=false --mtu=1450"#这个变量就是分配的子网,被docker服务配置文件引用的

6.2.4 执行脚本,查看docker是否使用flannel覆盖网络

[root@ZhangSiming kubernetes]# sh flannel.sh[root@ZhangSiming kubernetes]# ps -elf | grep flanneld4 S root 2413 1 0 80 0 - 78971 futex_ 23:31 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem0 R root 3684 1195 0 80 0 - 28176 - 23:45 pts/0 00:00:00 grep --color=auto flanneld#启动flannel服务成功[root@ZhangSiming kubernetes]# ps -elf | grep dockerd4 S root 2485 1 1 80 0 - 166806 do_wai 23:31 ? 00:00:01 /usr/bin3.1/24 --ip-masq=false --mtu=1450#docker成功使用flannel分配的子网

K8SNode2节点就scp过去执行脚本就好了

[root@ZhangSiming kubernetes]# scp -r /opt/ root@192.168.17.133:/opt/#scp只要是复制两个,第一个就是/opt/kubernetes目录,里面包含flannel工具和服务生产脚本;第二,还要复制/opt/etcd/ssl下的自签证书过去,别忘了我们指定的证书位置是/opt/etcd/sslroot@192.168.17.133\'s password:etcd 100% 18MB 28.6MB/s 00:00etcdctl 100% 15MB 24.0MB/s 00:00etcd 100% 516 761.0KB/s 00:00ca-config.json 100% 269 3.6KB/s 00:00ca.csr 100% 956 260.6KB/s 00:00ca-csr.json 100% 194 154.4KB/s 00:00ca-key.pem 100% 1679 1.4MB/s 00:00ca.pem 100% 1265 2.1MB/s 00:00server.csr 100% 1013 768.5KB/s 00:00server-csr.json 100% 294 127.1KB/s 00:00server-key.pem 100% 1679 2.3MB/s 00:00server.pem 100% 1338 2.6MB/s 00:00container-selinux-2.10-2.el7.noarch.rpm 100% 28KB 1.1MB/s 00:00flannel-v0.11.0-linux-amd64.tar.gz 100% 9342KB 18.2MB/s 00:00flanneld 100% 34MB 33.6MB/s 00:01mk-docker-opts.sh 100% 2139 1.6MB/s 00:00README.md 100% 4300 55.0KB/s 00:00flanneld 100% 236 199.1KB/s 00:00.flannel.sh.swp 100% 12KB 11.3MB/s 00:00.flannel.sh.swn 100% 12KB 4.5MB/s 00:00flannel.sh 100% 1489 1.5MB/s 00:00#复制过来直接执行脚本[root@ZhangSiming kubernetes]# sh flannel.sh[root@ZhangSiming kubernetes]# ps -elf | grep flanneld4 S root 2663 1 0 80 0 - 95884 futex_ 00:04 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem0 R root 2908 1770 0 80 0 - 28176 - 00:05 pts/0 00:00:00 grep --color=auto flanneld[root@ZhangSiming kubernetes]# ps -elf | grep dockerd4 S root 2737 1 6 80 0 - 133445 futex_ 00:04 ? 00:00:01 /usr/bin/dockerd --bip=172.17.98.1/24 --ip-masq=false --mtu=14500 R root 2916 1770 0 80 0 - 28176 - 00:05 pts/0 00:00:00 grep --color=auto dockerd#到此所有节点覆盖flannel网络成功

6.3测试flannel网络下容器间的通信

部署成功之后,ifconfig看一下容器的网卡信息

[root@ZhangSiming kubernetes]# ifconfig | grep -EA 1 flannelflannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450inet 172.17.98.0 netmask 255.255.255.255 broadcast 0.0.0.0[root@ZhangSiming kubernetes]# ifconfig | grep -EA 1 docker0docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500inet 172.17.98.1 netmask 255.255.255.0 broadcast 172.17.98.255#可以看到flannel自己分配了个172.17.98.0网段,docker0和下面的容器都会使用这个网段的IP[root@ZhangSiming kubernetes]# route -nKernel IP routing tableDestination Gateway Genmask Flags Metric Ref Use Iface0.0.0.0 192.168.17.2 0.0.0.0 UG 100 0 0 ens32172.17.63.0 172.17.63.0 255.255.255.0 UG 0 0 0 flannel.1172.17.98.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0192.168.17.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32#并加入了路由表中通信[root@ZhangSiming kubernetes]# ifconfig | grep -EA 1 flannelflannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450inet 172.17.98.0 netmask 255.255.255.255 broadcast 0.0.0.0[root@ZhangSiming kubernetes]# ping 172.17.63.0PING 172.17.63.0 (172.17.63.0) 56(84) bytes of data.64 bytes from 172.17.63.0: icmp_seq=1 ttl=64 time=0.259 ms64 bytes from 172.17.63.0: icmp_seq=2 ttl=64 time=0.379 ms^C--- 172.17.63.0 ping statistics ---2 packets transmitted, 2 received, 0% packet loss, time 1000msrtt min/avg/max/mdev = 0.259/0.319/0.379/0.060 ms#98网段的主机ping63网主机的网关可以通#在两个节点分别启动两个测试镜像,并记录IP[root@ZhangSiming kubernetes]# docker run -it busybox#busybox是测试镜像Unable to find image 'busybox:latest' locallylatest: Pulling from library/busybox57c14dd66db0: Pull completeDigest: sha256:7964ad52e396a6e045c39b5a44438424ac52e12e4d5a25d94895f2058cb863a0Status: Downloaded newer image for busybox:latest/ # ifconfig | grep -A 1 eth0eth0 Link encap:Ethernet HWaddr 02:42:AC:11:3F:02inet addr:172.17.63.2 Bcast:172.17.63.255 Mask:255.255.255.0/ # ping 172.17.98.2#自己是63网段,ping98网段(另一个容器的)PING 172.17.98.2 (172.17.98.2): 56 data bytes64 bytes from 172.17.98.2: seq=0 ttl=62 time=0.459 ms64 bytes from 172.17.98.2: seq=1 ttl=62 time=2.668 ms#容器间也ping通,说明flannel网络已经覆盖了容器,成功#最后别忘了加入开机启动[root@ZhangSiming kubernetes]# systemctl enable flanneld

七、部署Master组件

部署Master组件是有一个顺序要求的,先部署apiserver,再部署controller-manager和scheduler。

配置组件流程和上面一致,就是:

1.自签证书准备

2.配置文件

3.systemd管理

4.启动组件

5.验证是否运行成功

7.1生成自签证书

[root@ZhangSiming ~]# mkdir K8S/k8s-cert[root@ZhangSiming ~]# cd K8S/k8s-cert#建立一个专门的K8S证书[root@ZhangSiming k8s-cert]# mv ~/k8s-cert.sh .[root@ZhangSiming k8s-cert]# lsk8s-cert.sh#生成证书脚本,类似生成Etcd证书脚本[root@ZhangSiming k8s-cert]# cat k8s-cert.shcat > ca-config.json <<EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json <<EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"#O、OU是用户、用户组验证}]}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#生成ca根证书#-----------------------cat > server-csr.json <<EOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.17.130","192.168.17.131","192.168.17.134","192.168.17.135","192.168.17.136",#上面5个IP为,Master01,Master02,LoadMaster自身IP、VIP和LoadNode的IP地址"kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"#O、OU是用户、用户组验证}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server#使用ca证书签发apiserver的证书#-----------------------cat > admin-csr.json <<EOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"#O、OU是用户、用户组验证}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin#使用ca证书签发kubeadmin证书#-----------------------cat > kube-proxy-csr.json <<EOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"#O、OU是用户、用户组验证}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy#使用ca证书签发kubeproxy证书[root@ZhangSiming k8s-cert]# sh k8s-cert.sh &>/dev/null[root@ZhangSiming k8s-cert]# lsadmin.csr ca.csr kube-proxy.csr server-csr.jsonadmin-csr.json ca-csr.json kube-proxy-csr.json server-key.pemadmin-key.pem ca-key.pem kube-proxy-key.pem server.pemadmin.pem ca.pem kube-proxy.pemca-config.json k8s-cert.sh server.csr#全部的证书生成成功

7.2部署Master组件

7.2.1部署apiserver

[root@ZhangSiming K8S]# cd k8smaster-soft/[root@ZhangSiming k8smaster-soft]# lsapiserver.sh kubernetes-server-linux-amd64.tar.gzcontroller-manager.sh scheduler.sh[root@ZhangSiming k8smaster-soft]# mkdir -p /opt/kubernetes/{bin,cfg,ssl}[root@ZhangSiming k8smaster-soft]# tar xf kubernetes-server-linux-amd64.tar.gz#解压下载好的Kubernetes二进制包[root@ZhangSiming K8S]# ls kubernetes/addons kubernetes-src.tar.gz LICENSES server#addons是一些插件[root@ZhangSiming K8S]# ls kubernetes/server/bin/apiextensions-apiserver kube-controller-manager.tarcloud-controller-manager kubectlcloud-controller-manager.docker_tag kubeletcloud-controller-manager.tar kube-proxyhyperkube kube-proxy.docker_tagkubeadm kube-proxy.tarkube-apiserver kube-schedulerkube-apiserver.docker_tag kube-scheduler.docker_tagkube-apiserver.tar kube-scheduler.tarkube-controller-manager mounterkube-controller-manager.docker_tag#其中的kube-apiserver kubectl kube-controller-manager kube-scheduler kubelet是需要的,拷贝到刚刚创建的/opt/kubernetes下的bin目录中[root@ZhangSiming K8S]# cd kubernetes/server/bin/[root@ZhangSiming bin]# cp kubelet kubectl kube-proxy kube-scheduler kube-controller-manager kube-apiserver /opt/kubernetes/bin/[root@ZhangSiming bin]# ls /opt/kubernetes/bin/kube-apiserver kubectl kube-proxykube-controller-manager kubelet kube-scheduler#拷贝命令工具成功#拷贝证书[root@ZhangSiming K8S]# cd k8s-cert/[root@ZhangSiming k8s-cert]# cp * /opt/kubernetes/ssl/[root@ZhangSiming k8s-cert]# ls /opt/kubernetes/ssl/admin.csr ca.csr kube-proxy.csr server-csr.jsonadmin-csr.json ca-csr.json kube-proxy-csr.json server-key.pemadmin-key.pem ca-key.pem kube-proxy-key.pem server.pemadmin.pem ca.pem kube-proxy.pemca-config.json k8s-cert.sh server.csr[root@ZhangSiming k8s-cert]# ls /opt/etcd/sslca-config.json ca-csr.json ca.pem server-csr.json server.pemca.csr ca-key.pem server.csr server-key.pem#Kubernetes组件的证书和etcd的证书都在指定位置[root@ZhangSiming ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379" cluster-healthmember 3a6f8c78a708ea9 is healthy: got healthy result from https://192.168.17.132:2379member 5658b2b12e105fd0 is healthy: got healthy result from https://192.168.17.131:2379member 9d10fc7919c17197 is healthy: got healthy result from https://192.168.17.130:2379cluster is healthy#Etcd集群健康

下面开始生成apiserver的配置文件和服务配置文件

[root@ZhangSiming k8smaster-soft]# cat apiserver.sh#!/bin/bashMASTER_ADDRESS=$1ETCD_SERVERS=$2#脚本要传入两个参数,一个是自身的IP,一个是Etcd集群的IPcat <<EOF >/opt/kubernetes/cfg/kube-apiserverKUBE_APISERVER_OPTS="--logtostderr=true \\#错误日志开启--v=4 \\#错误日志级别为4--etcd-servers=${ETCD_SERVERS} \\#Etcd集群的IP--bind-address=${MASTER_ADDRESS} \\#绑定自身的masterIP--secure-port=6443 \\#安全端口6443--advertise-address=${MASTER_ADDRESS} \\--allow-privileged=true \\--service-cluster-ip-range=10.0.0.0/24 \\#Kubernetes给apiserver分配的虚拟IP--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\#支持验证插件--authorization-mode=RBAC,Node \\#安全验证模式--kubelet-https=true \\--enable-bootstrap-token-auth \\#token验证方式--token-auth-file=/opt/kubernetes/cfg/token.csv \\#这个文件要先生成,给token验证读取--service-node-port-range=30000-50000 \\#虚拟IP分配的端口--tls-cert-file=/opt/kubernetes/ssl/server.pem \\--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\--client-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/server.pem \\--etcd-keyfile=/opt/etcd/ssl/server-key.pem"#下面都是证书EOFcat <<EOF >/usr/lib/systemd/system/kube-apiserver.service[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver#配置文件位置ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS#启动apiserver服务引用的参数,就是配置文件里指定的Restart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reload#system服务生效systemctl enable kube-apiserversystemctl restart kube-apiserver#生成token-auth文件[root@ZhangSiming k8smaster-soft]# touch /opt/kubernetes/cfg/token.csv[root@ZhangSiming k8smaster-soft]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '5e479c0f03a3261a0e15bf05ff272831[root@ZhangSiming k8smaster-soft]# echo "5e479c0f03a3261a0e15bf05ff272831" > /opt/kubernetes/cfg/token.csv[root@ZhangSiming k8smaster-soft]# vim /opt/kubernetes/cfg/token.csv[root@ZhangSiming k8smaster-soft]# cat /opt/kubernetes/cfg/token.csv5e479c0f03a3261a0e15bf05ff272831,kubelet-bootstrap,10001,"system:kuberlet-bootstrap"#这个后面部署Node会用到,暂时不用管。#执行脚本[root@ZhangSiming k8smaster-soft]# sh apiserver.sh 192.168.17.130 https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379#传入两个参数[root@ZhangSiming k8s-cert]# ps -elf | grep api4 S root 2536 1 77 80 0 - 71336 futex_ 20:11 ? 00:00:04 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379 --bind-address=192.168.17.130 --secure-port=6443 --advertise-address=192.168.17.130 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem0 R root 2544 2324 0 80 0 - 28176 - 20:11 pts/1 00:00:00 grep --color=auto api#成功启动apiserver服务

7.2.2部署kube-controller-manager

[root@ZhangSiming k8smaster-soft]# netstat -antup | grep 8080tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 2715/kube-apiserver#这个8080端口是apiserver的一个非安全端口,用于连接controller-manager的#生成controller-manager配置文件和服务配置文件的脚本[root@ZhangSiming k8smaster-soft]# cat controller-manager.sh#!/bin/bashMASTER_ADDRESS=$1#由于和apiserver都在本地,所以传入127.0.0.1cat <<EOF >/opt/kubernetes/cfg/kube-controller-managerKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\--v=4 \\--master=${MASTER_ADDRESS}:8080 \\#和apiserver通信地址是8080,非安全端口--leader-elect=true \\#高可用选举功能(多个controller-manager时)--address=127.0.0.1 \\--service-cluster-ip-range=10.0.0.0/24 \\#虚拟子网,和apiserver一致--cluster-name=kubernetes \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\#证书--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--experimental-cluster-signing-duration=87600h0m0s"EOFcat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-managerExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#服务配置文件和apiserver类似systemctl daemon-reloadsystemctl enable kube-controller-managersystemctl restart kube-controller-manager#执行脚本[root@ZhangSiming k8smaster-soft]# sh controller-manager.sh 127.0.0.1#传入127.0.0.1Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.[root@ZhangSiming k8smaster-soft]# ps -elf | grep controller-manager4 S root 2804 1 3 80 0 - 34587 ep_pol 20:25 ? 00:00:01 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s0 S root 2811 2324 0 80 0 - 28176 pipe_w 20:26 pts/1 00:00:00 grep --color=auto controller-manager#controller-manager服务启动成功

7.2.3部署scheduler组件

[root@ZhangSiming k8smaster-soft]# cat scheduler.sh#!/bin/bashMASTER_ADDRESS=$1cat <<EOF >/opt/kubernetes/cfg/kube-schedulerKUBE_SCHEDULER_OPTS="--logtostderr=true \\--v=4 \\#开启错误日志,等级为4--master=${MASTER_ADDRESS}:8080 \\#scheduler与apiserver连接也是通过8080端口--leader-elect"#高可用选举功能开启EOFcat <<EOF >/usr/lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-schedulerExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-schedulersystemctl restart kube-scheduler#执行脚本[root@ZhangSiming k8smaster-soft]# sh scheduler.sh 127.0.0.1Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.[root@ZhangSiming k8smaster-soft]# ps -elf | grep scheduler4 S root 2873 1 0 80 0 - 11075 futex_ 20:31 ? 00:00:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect#scheduler服务启动成功

验证apiserver、controller-manager、scheduler连接状况

[root@ZhangSiming k8smaster-soft]# /opt/kubernetes/bin/kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-1 Healthy {"health":"true"}etcd-2 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}#三者连接成功,如果报错,优先查看日志/var/log/messages

八、部署Node组件

部署Node组件流程:(证书刚刚部署Master组件的时候就签发好了)

1.将kubelet-bootstrap用户绑定到系统集群角色

2.创建kubeconfig文件

3.部署kubelet、kube-proxy组件

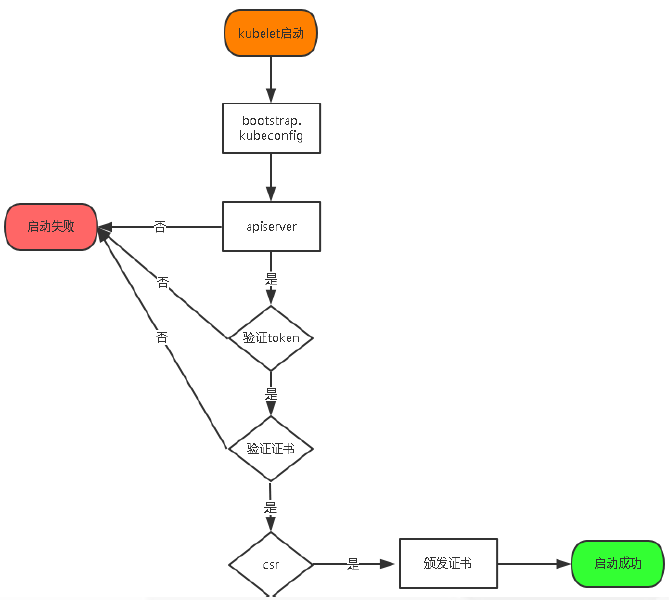

添加Node节点到K8S集群流程

1.kubelet工具启动首先需要验证集群有无kubelet-bootstrap用户;

2.验证kubeconfig配置文件;

3.之后验证连接apiserver、之后验证token、验证证书;

4.之后K8S集群(Master)csr检测到Node节点kubelet-bootstrap用户发出的证书请求,签发证书给Node节点,把Node节点放行,把Node节点加入到K8S集群中。

8.1将kubelet-bootstrap用户绑定到系统集群角色

#前提先把kubelet、kubel-proxy、kubectl和证书拷贝到Node服务器[root@ZhangSiming kubernetes]# cat cfg/token.csv5e479c0f03a3261a0e15bf05ff272831,kubelet-bootstrap,10001,"system:kuberlet-bootstrap"#token中我们指定了用户kubelet-bootstrap,但是我们需要真正的将它绑定到集群角色才可以[root@ZhangSiming kubernetes]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrapclusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created#必须在Master节点也就是K8S集群生成kubelet-bootstrap集群角色,否则后面启动kubelet服务会失败。

8.2创建kubeconfig文件

#以下操作都是在Node节点[root@ZhangSiming ~]# echo "PATH=$PATH:/opt/kubernetes/bin/" >> /etc/profile[root@ZhangSiming ~]# source /etc/profile[root@ZhangSiming ~]# which kubectl/opt/kubernetes/bin/kubectl#创建环境变量#创建kubeconfig脚本[root@ZhangSiming ~]# cat kubeconfig.sh#!/bin/bashAPISERVER=$1SSL_DIR=$2#传入两个参数,一个是apiserver地址,一个是证书目录export KUBE_APISERVER="https://$APISERVER:6443"# 设置集群参数kubectl config set-cluster kubernetes \--certificate-authority=$SSL_DIR/ca.pem \--embed-certs=true \#将证书写入kubeconfig--server=${KUBE_APISERVER} \--kubeconfig=bootstrap.kubeconfig#生成文件名# 设置客户端认证参数kubectl config set-credentials kubelet-bootstrap \--token=5e479c0f03a3261a0e15bf05ff272831 \#token复制我们刚刚/opt/kubernetes/cfg/token.csv中生成的token值--kubeconfig=bootstrap.kubeconfig# 设置上下文参数kubectl config set-context default \--cluster=kubernetes \--user=kubelet-bootstrap \#添加的用户角色--kubeconfig=bootstrap.kubeconfig# 设置默认上下文kubectl config use-context default --kubeconfig=bootstrap.kubeconfig#----------------------# 创建kube-proxy kubeconfig文件kubectl config set-cluster kubernetes \--certificate-authority=$SSL_DIR/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \--client-certificate=$SSL_DIR/kube-proxy.pem \--client-key=$SSL_DIR/kube-proxy-key.pem \--embed-certs=true \--kubeconfig=kube-proxy.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig#脚本一共生成了两个文件一个是bootstrap.kubeconfig,一个是kube-proxy.kubeconfig#把脚本移动到/opt/kubernetes/kubeconfig中执行[root@ZhangSiming kubeconfig]# pwd/opt/kubernetes/kubeconfig[root@ZhangSiming kubeconfig]# lskubeconfig.sh[root@ZhangSiming kubeconfig]# sh kubeconfig.sh 192.168.17.130 /opt/kubernetes/ssl/#这里先做一个单Master的Cluster "kubernetes" set.User "kubelet-bootstrap" set.Context "default" created.Switched to context "default".Cluster "kubernetes" set.User "kube-proxy" set.Context "default" created.Switched to context "default".[root@ZhangSiming kubeconfig]# lsbootstrap.kubeconfig kubeconfig.sh kube-proxy.kubeconfig#两个文件都成功生成[root@ZhangSiming kubeconfig]# mv bootstrap.kubeconfig kube-proxy.kubeconfig ../cfg/#把kubeconfig移动到cfg下

8.3部署kubelet组件

[root@ZhangSiming ~]# cat kubelet.sh#!/bin/bashNODE_ADDRESS=$1DNS_SERVER_IP=${2:-"10.0.0.2"}#传入Node节点IP和DNS虚拟地址(也可以自己架构一个DNS)cat <<EOF >/opt/kubernetes/cfg/kubeletKUBELET_OPTS="--logtostderr=true \\--v=4 \\#开启错误日志,级别为4--address=${NODE_ADDRESS} \\#Node节点IP--hostname-override=${NODE_ADDRESS} \\#K8S可以看到的Node标识,这里也用IP表示了--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet.config \\#三个config文件,有两个刚刚生成了,有一个在下面生成--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"EOFcat <<EOF >/opt/kubernetes/cfg/kubelet.configkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: ${NODE_ADDRESS}port: 10250#节点地址和端口cgroupDriver: cgroupfsclusterDNS:- ${DNS_SERVER_IP}#使用的DNSclusterDomain: cluster.local.failSwapOn: falseEOF#服务的配置文件cat <<EOF >/usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletAfter=docker.serviceRequires=docker.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubeletExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureKillMode=process[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kubeletsystemctl restart kubelet#执行脚本[root@ZhangSiming ~]# sh kubelet.sh 192.168.17.132 10.0.0.2#脚本执行成功[root@ZhangSiming ~]# systemctl start kubelet[root@ZhangSiming ~]# ps -elf | grep kubelet4 S root 34950 1 1 80 0 - 94480 futex_ 00:46 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --address=192.168.17.132 --hostname-override=192.168.17.132 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0#成功启动kubelet服务#看一下kubelet配置文件[root@ZhangSiming cfg]# cat kubeletKUBELET_OPTS="--logtostderr=true \--v=4 \--address=192.168.17.132 \--hostname-override=192.168.17.132 \--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \--experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \--config=/opt/kubernetes/cfg/kubelet.config \--cert-dir=/opt/kubernetes/ssl \--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"#这一行就是一个pod,后面地址要可访问,否则pod服务会启动失败

8.4部署kube-proxy组件

[root@ZhangSiming ~]# cat proxy.sh#!/bin/bashNODE_ADDRESS=$1#传入当前节点IPcat <<EOF >/opt/kubernetes/cfg/kube-proxyKUBE_PROXY_OPTS="--logtostderr=true \\--v=4 \\--hostname-override=${NODE_ADDRESS} \\#这个K8S显示标识要和kubelet对应--cluster-cidr=10.0.0.0/24 \\--proxy-mode=ipvs \\#使用ipvs传递模式,效率更高--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"#这个刚刚已经生成了EOFcat <<EOF >/usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-proxysystemctl restart kube-proxy#执行脚本[root@ZhangSiming ~]# sh proxy.sh 192.168.17.132Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.[root@ZhangSiming ~]# ps -elf | grep kube-proxy4 S root 35710 1 1 80 0 - 10397 futex_ 00:55 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.17.132 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig#成功启动服务

将节点加入K8S集群

#csr检验是否有证书请求[root@ZhangSiming kubernetes]# kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-E9_6_jyQXKprDDIPiYQTE-AmoRKnBZEWfNeDzEwMde8 13m kubelet-bootstrap Pending[root@ZhangSiming kubernetes]# kubectl certificate approve node-csr-E9_6_jyQXKprDDIPiYQTE-AmoRKnBZEWfNeDzEwMde8#将节点加入K8S集群certificatesigningrequest.certificates.k8s.io/node-csr-E9_6_jyQXKprDDIPiYQTE-AmoRKnBZEWfNeDzEwMde8 approved[root@ZhangSiming kubernetes]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.17.132 Ready <none> 8s v1.12.1#成功加入#同样加入另外一个节点[root@ZhangSiming kubernetes]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.17.132 Ready <none> 10m v1.12.1192.168.17.133 Ready <none> 4s v1.12.1#成功加入两个节点到K8S集群,完成了单Master节点多个Node节点的K8S集群

九、部署一个Nginx测试示例

pod是K8S的最小部署单元,由多个容器组成,是具体跑我们的业务的。

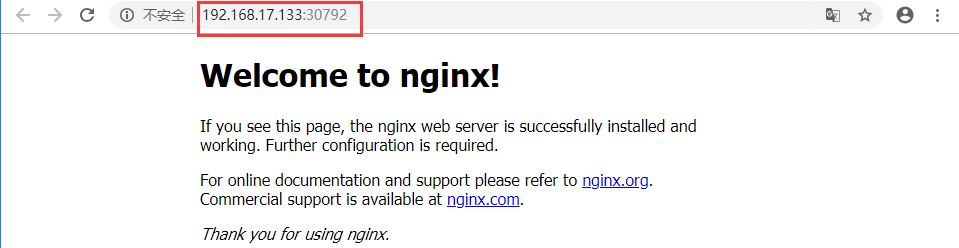

[root@ZhangSiming ~]# kubectl get nodesNAME STATUS ROLES AGE VERSION192.168.17.132 Ready <none> 3d9h v1.12.1192.168.17.133 Ready <none> 3d9h v1.12.1#K8S集群两个节点都已经准备好了[root@ZhangSiming ~]# kubectl get podsNo resources found.#现在还没有部署pod[root@ZhangSiming ~]# kubectl run nginx --image=nginx#启动一个Nginx镜像作为podkubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.deployment.apps/nginx created[root@ZhangSiming ~]# kubectl get pods -o wide#-o wide表示详细地看pods信息NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-dbddb74b8-6882f 1/1 Running 0 4m56s 172.17.22.2 192.168.17.132 <none>#可以看到部署了一个pod,调度器把pod分配到了192.168.17.132节点[root@ZhangSiming ~]# kubectl get allNAME READY STATUS RESTARTS AGEpod/nginx-dbddb74b8-6882f 1/1 Running 0 23sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 3d14hNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeployment.apps/nginx 1 1 1 1 23s#我们刚刚的命令开启了一个deployment、一个service和一个pod。NAME DESIRED CURRENT READY AGEreplicaset.apps/nginx-dbddb74b8 1 1 1 23s[root@ZhangSiming ~]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort#开放nginxpod的80端口(target-port),到服务器的80端口(port)service/nginx exposed[root@ZhangSiming ~]# kubectl get svc nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEnginx NodePort 10.0.0.211 <none> 80:30792/TCP 10s#可以看到服务,内网(Node节点)访问用10.0.0.211:80;外网(PC)访问用Node节点ip:30792

访问测试

- Node节点间访问

[root@ZhangSiming ~]# curl -I 10.0.0.211:80HTTP/1.1 200 OKServer: nginx/1.15.8Date: Sun, 24 Feb 2019 03:14:05 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 25 Dec 2018 09:56:47 GMTConnection: keep-aliveETag: "5c21fedf-264"Accept-Ranges: bytes[root@ZhangSiming ~]# curl -I 10.0.0.211:80HTTP/1.1 200 OKServer: nginx/1.15.8Date: Sun, 24 Feb 2019 03:14:05 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 25 Dec 2018 09:56:47 GMTConnection: keep-aliveETag: "5c21fedf-264"Accept-Ranges: bytes#两个节点都访问成功

- 浏览器访问

[root@ZhangSiming ~]# tail -3 /opt/kubernetes/cfg/kubelet.configauthentication:anonymous:enabled: true#在节点的kubelet配置文件协上述3行,表示kubelet允许匿名用户验证[root@ZhangSiming ~]# systemctl restart kubelet[root@ZhangSiming ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous#绑定system:anonymous角色到系统最大权限,用于访问pod的日志clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created[root@ZhangSiming ~]# kubectl logs nginx-dbddb74b8-6882f#查看nginxpod的日志10.0.0.211 - - [24/Feb/2019:02:55:24 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"172.17.52.0 - - [24/Feb/2019:02:55:34 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"172.17.52.0 - - [24/Feb/2019:02:57:56 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36" "-"2019/02/24 02:57:56 [error] 6#6: *3 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.17.52.0, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "192.168.17.133:30792", referrer: "http://192.168.17.133:30792/"172.17.52.0 - - [24/Feb/2019:02:57:56 +0000] "GET /favicon.ico HTTP/1.1" 404 555 "http://192.168.17.133:30792/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36" "-"

测试实例至此部署成功。

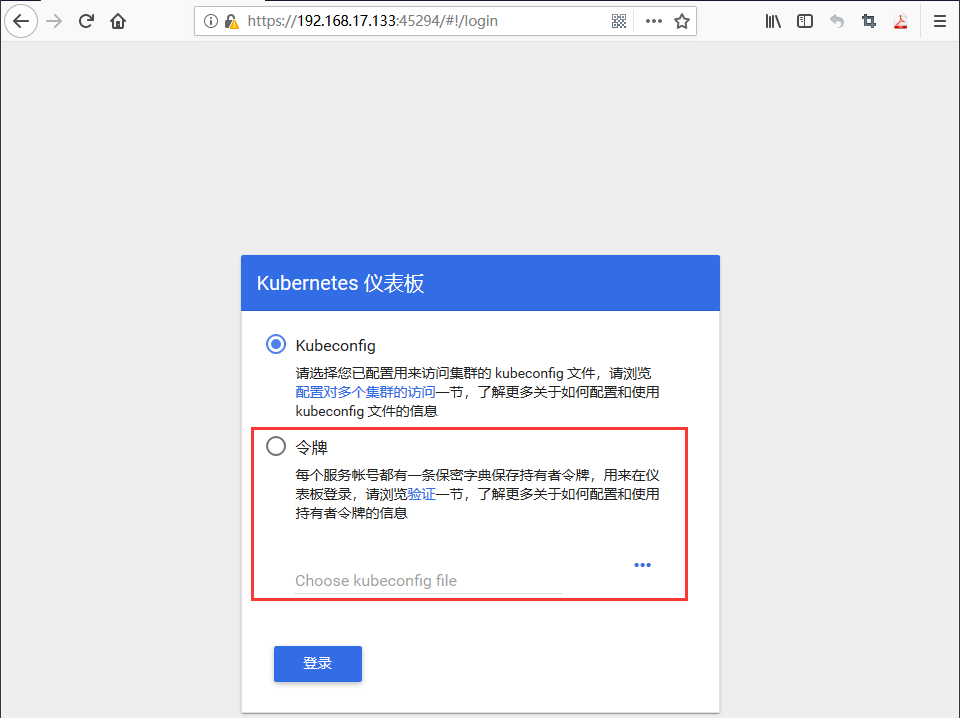

十、部署K8S的Web UI(Dashboard)

10.1下载K8S的Web UI部署yaml文件

[root@ZhangSiming dashboard]# pwd/root/K8S/kubernetes/cluster/addons/dashboard[root@ZhangSiming dashboard]# lsdashboard-configmap.yaml dashboard-secret.yaml OWNERSdashboard-controller.yaml dashboard-service.yaml README.mddashboard-rbac.yaml MAINTAINERS.md#这里面都是部署K8SWebUI的yaml文件,yaml文件主要是用于解耦部署pod的过程,一次性把多条部署内容结合为一个文档,方便部署与修改[root@ZhangSiming dashboard]# cat dashboard-controller.yamlapiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: kubernetes-dashboardaddonmanager.kubernetes.io/mode: Reconcilename: kubernetes-dashboardnamespace: kube-system---apiVersion: apps/v1kind: Deploymentmetadata:name: kubernetes-dashboardnamespace: kube-systemlabels:k8s-app: kubernetes-dashboardkubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilespec:selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardannotations:scheduler.alpha.kubernetes.io/critical-pod: ''seccomp.security.alpha.kubernetes.io/pod: 'docker/default'spec:priorityClassName: system-cluster-criticalcontainers:- name: kubernetes-dashboardimage: registry.cn-hangzhou.aliyuncs.com/kuberneters/kubernetes-dashboard-amd64#这里替换为上面这个地址,因为默认的镜像地址是国外的,国内不能访问resources:limits:cpu: 100mmemory: 300Mirequests:cpu: 50mmemory: 100Miports:- containerPort: 8443protocol: TCPargs:# PLATFORM-SPECIFIC ARGS HERE- --auto-generate-certificatesvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs- name: tmp-volumemountPath: /tmplivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboardtolerations:- key: "CriticalAddonsOnly"operator: "Exists"

- 阿里云docker hub镜像下载地址:

#create所有的yaml文件,K8SWebUIpod就部署好了[root@ZhangSiming dashboard]# kubectl create -f dashboard-configmap.yamlconfigmap/kubernetes-dashboard-settings created[root@ZhangSiming dashboard]# kubectl create -f dashboard-controller.yamlserviceaccount/kubernetes-dashboard createddeployment.apps/kubernetes-dashboard created[root@ZhangSiming dashboard]# kubectl create -f dashboard-rbac.yamlrole.rbac.authorization.k8s.io/kubernetes-dashboard-minimal createdrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created[root@ZhangSiming dashboard]# kubectl create -f dashboard-secret.yamlsecret/kubernetes-dashboard-certs createdsecret/kubernetes-dashboard-key-holder created[root@ZhangSiming dashboard]# kubectl get pods -n kube-system -o wide#由于是系统组件,所以要用get pods -n kube-system方式查看NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEkubernetes-dashboard-5f5bfdc89f-wf5sw 1/1 Running 0 2m59s 172.17.52.2 192.168.17.133 <none>#开放外部访问service[root@ZhangSiming dashboard]# tail -6 dashboard-service.yamltype: NodePort#NodePort指定外部访问类型selector:k8s-app: kubernetes-dashboardports:- port: 443#开放为Node访问用8443端口targetPort: 8443#pod的443端口[root@ZhangSiming dashboard]# kubectl create -f dashboard-service.yamlservice/kubernetes-dashboard created[root@ZhangSiming dashboard]# kubectl get -n kube-system svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-dashboard NodePort 10.0.0.161 <none> 443:45294/TCP 5m14s

- web UI访问

注意:一定要是用safari浏览器或者firefox浏览器才可以进入。

#我们选择令牌token方式验证进入[root@ZhangSiming kubernetes]# cat k8s-admin.yamlapiVersion: v1kind: ServiceAccount#将WebUI使用的ServiceAccount账户绑定到K8S管理员账户,最高权限cluster-adminmetadata:name: dashboard-adminnamespace: kube-system---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: dashboard-adminsubjects:- kind: ServiceAccountname: dashboard-adminnamespace: kube-systemroleRef:kind: ClusterRolename: cluster-adminapiGroup: rbac.authorization.k8s.io[root@ZhangSiming kubernetes]# kubectl create -f k8s-admin.yamlserviceaccount/dashboard-admin createdclusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created[root@ZhangSiming kubernetes]# kubectl get secret -n kube-systemNAME TYPE DATA AGEdashboard-admin-token-h4dht kubernetes.io/service-account-token 3 14sdefault-token-h6kgb kubernetes.io/service-account-token 3 3d15hkubernetes-dashboard-certs Opaque 0 31mkubernetes-dashboard-key-holder Opaque 2 31mkubernetes-dashboard-token-5mk8p kubernetes.io/service-account-token 3 31m[root@ZhangSiming kubernetes]# kubectl describe secret -n kube-system dashboard-admin-token-h4dht#看一下这个dashboard-admin-token-h4dht的值Name: dashboard-admin-token-h4dhtNamespace: kube-systemLabels: <none>Annotations: kubernetes.io/service-account.name: dashboard-adminkubernetes.io/service-account.uid: 4917ffb2-37eb-11e9-9874-000c29192b70Type: kubernetes.io/service-account-tokenData====ca.crt: 1359 bytesnamespace: 11 bytestoken: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4taDRkaHQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDkxN2ZmYjItMzdlYi0xMWU5LTk4NzQtMDAwYzI5MTkyYjcwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.AS1DzFtgZhQsy-Ns4U1-e8ffhbEKW20BM0rkwKS1EL_p-ewdX1BFIleIVDdykdNQk1coiY3gzciCUpD1qHQ92Q_p-gbLY9Lq8C4u8DTtC07L_NIf2H178-HdjjQc_LDlIU0oJ1MQ5iT51RV4VZHIe0dtK_kA2162OxB45lepqVpDSBaBDi1SARujRiOtqYkd6KD9GygSFDtvb0zB7GmpZM7lTEy0g9CXSzRwg6K1EUWpAeOjk3qHbJN55AGWemtneSYA2Vi_Z84sSi9B_OFShqAb-Lo0ol9b81NNE15s03zlttkC59BA2QRsY9_aK5iQ2OUBkirwyyzRQShMllZ9rA#复制上面这个token,浏览器验证,进入K8S WebUI界面

成功进入WebUI,注意token中间不能有换行。

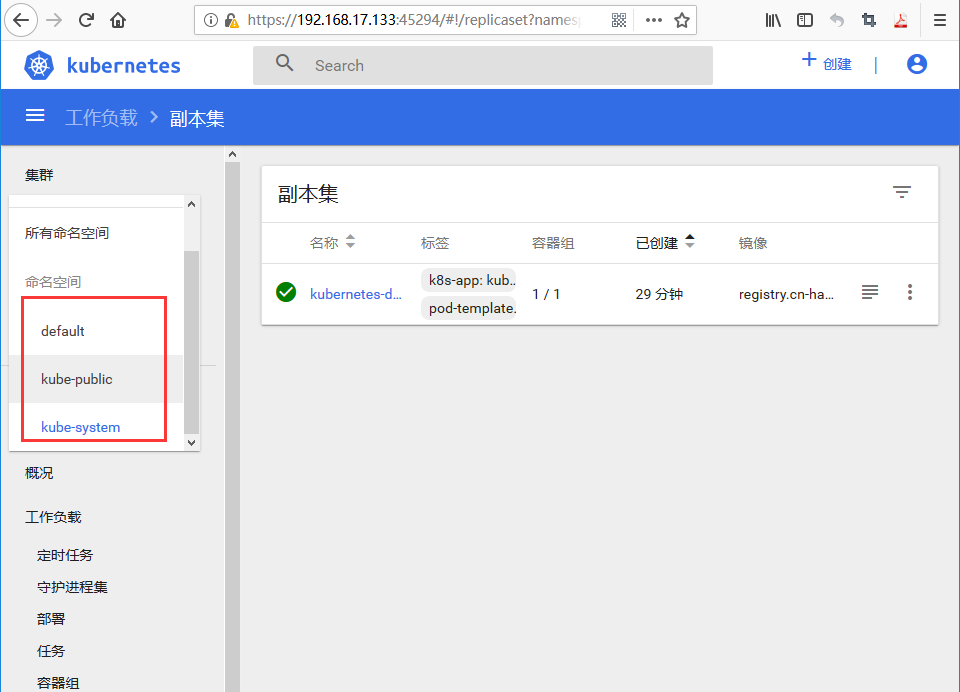

K8S的Web UI一般调试的时候用的比较多,生产环境用的比较少。

可以看不同命名空间的容器pod情况,一个命名空间就是一个虚拟集群,我们的WebUIpod就是在kube-system空间的,nginx是在defalut空间的,可以切换查看日志或者容器shell界面等操作......

十一、部署K8SMaster节点高可用架构

K8S集群中,主要是Master集群需要高可用,因为Node集群全部是覆盖网络,即使其中一个Node宕机,我们也可以通过其他存活Node访问服务(pod自动漂移),所以不存在单点问题;

而Master组件的高可用主要是apiserver的高可用,因为scheduler和controller-manager都具有自身的选举参数进行高可用。

#部署好一台和Master01完全一样的Master节点[root@ZhangSiming cfg]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-0 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-2 Healthy {"health":"true"}[root@ZhangSiming cfg]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.17.132 Ready <none> 3d11h v1.12.1192.168.17.133 Ready <none> 3d11h v1.12.1#虽然Master02没有连接到Node节点,但是由于Etcd数据库都连接了,所以可以看到Etcd中的数据,检测到节点。

- 部署Master apiserver的Load Balance

#这里选用Nginx反向代理作为Master apiserver的负载均衡[root@ZhangSiming ~]# cat nginx.sh#!/bin/bash#designed by ZhangSimingid nginxif [ $? != 0 ];thenuseradd nginx -s /sbin/nologin -Mfi#创建Nginx程序用户yum -y install openssl-devel pcre-devel gcc gcc-c++ make#安装依赖包cd /tmptar xf nginx-1.10.2.tar.gz -C /usr/srccd /usr/src/nginx-1.10.2/./configure --user=nginx --group=nginx --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module --with-stream#这里注意编译的时候启用了一个--with-stream模块,这个模块的功能是开启Nginx四层负载均衡的功能(Nginx1.9version之后新增加的功能),如果不开启这个功能,那么我们连接apiserver还需要ca证书,比较麻烦,所以我们用的是Nginx的4层负载均衡功能makemake install#编译安装Nginxln -s /usr/local/nginx/sbin/nginx /usr/local/sbinrm -rf /tmp/nginx-1.10.2.tar.gz#进行四层负载均衡Nginx的配置[root@ZhangSiming ~]# cd /usr/local/nginx/[root@ZhangSiming nginx]# vim conf/nginx.conf[root@ZhangSiming nginx]# sed -n '16,24p' conf/nginx.confstream {#stream模块的支持,stream与http模块同级upstream k8s-apiserver {server 192.168.17.130:6443;server 192.168.17.131:6443;#两个Masterapi的连接ip与端口,注意这里apiserver用的安全端口6443连接,master其他组件与apiserver连接用的非安全端口8080}server {listen 0.0.0.0:6443;#由于后面我们要监听VIP过来的访问,所以这里指定为0.0.0.0proxy_pass k8s-apiserver;#这里区别于http模块推反向代理池,反向代理池名字前面是不加http://的,需要注意}[root@ZhangSiming nginx]# nginx -s reload

- Node节点修改

#需要把所有连接Master节点的都连接到Nginx L4负载均衡器[root@ZhangSiming cfg]# pwd/opt/kubernetes/cfg[root@ZhangSiming cfg]# grep 130 *bootstrap.kubeconfig: server: https://192.168.17.130:6443flanneld:FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.17.130:2379,https://192.168.17.131:2379,https://192.168.17.132:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"kubelet.kubeconfig: server: https://192.168.17.130:6443kube-proxy.kubeconfig: server: https://192.168.17.130:6443[root@ZhangSiming cfg]# vim bootstrap.kubeconfig[root@ZhangSiming cfg]# vim kubelet.kubeconfig[root@ZhangSiming cfg]# vim kube-proxy.kubeconfig[root@ZhangSiming cfg]# grep 134 *bootstrap.kubeconfig: server: https://192.168.17.134:6443kubelet.kubeconfig: server: https://192.168.17.134:6443kube-proxy.kubeconfig: server: https://192.168.17.134:6443#修改之后重启[root@ZhangSiming cfg]# systemctl restart kubelet[root@ZhangSiming cfg]# systemctl restart kube-proxy

- 部署K8SMasterBP(一模一样的备用服务器)

[root@ZhangSiming yum.repos.d]# vim nginx.repo[root@ZhangSiming yum.repos.d]# cat nginx.repo[nginx]name=nginx.repobaseurl=http://nginx.org/packages/centos/7/$basearch/gpgcheck=0enabled=1[root@ZhangSiming yum.repos.d]# yum -y install nginx#从官网yum源安装nginx[root@ZhangSiming yum.repos.d]# nginx -Vnginx version: nginx/1.14.2#Nginx新版本1.11版本之后支持stream模块里面支持记录日志功能#编辑配置文件[root@ZhangSiming yum.repos.d]# sed -n '12,23p' /etc/nginx/nginx.confstream {log_format main "$remote_addr--->$upstream_addr time:$time_local $status";access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 192.168.17.130:6443;server 192.168.17.131:6443;}server {listen 0.0.0.0:6443;#由于后面我们要监听VIP过来的访问,所以这里指定为0.0.0.0proxy_pass k8s-apiserver;}}[root@ZhangSiming yum.repos.d]# nginx

- 做好LB的Keepalived高可用

#Keepalived主节点配置文件[root@ZhangSiming etc]# cat keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {# 接收邮件地址notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}# 邮件发送地址notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTER}#前面两个地址没啥用vrrp_script check_nginx{script "/etc/keepalived/nginx.sh"}#这里注意上下空格,苦逼的格式.....浪费我好几个小时#检测nginx脚本vrrp_instance VI_1 {state MASTERinterface ens32virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 100 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.17.135/24}track_script{check_nginx}#这里注意上下空格,苦逼的格式.....浪费我好几个小时}[root@ZhangSiming etc]# cat /etc/keepalived/nginx.sh#!/bin/bashif [ `netstat -antup | grep nginx | wc -l ` -eq 0 ];thensystemctl stop keepalived.servicefi[root@ZhangSiming etc]# systemctl start keepalived.service[root@ZhangSiming etc]# chmod +x /etc/keepalived/nginx.sh#Keepalived备节点配置文件[root@ZhangSiming etc]# cat keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {# 接收邮件地址notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}# 邮件发送地址notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTER}vrrp_script check_nginx{script "/etc/keepalived/nginx.sh"}vrrp_instance VI_1 {state BACKUPinterface ens32virtual_router_id 51 # 这个ID不一致的话会出现高可用裂脑priority 90 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.17.135/24}track_script{check_nginx}}[root@ZhangSiming etc]# ls /etc/keepalived/nginx.sh/etc/keepalived/nginx.sh[root@ZhangSiming etc]# systemctl start keepalived.service[root@ZhangSiming etc]# chmod +x /etc/keepalived/nginx.sh[root@ZhangSiming keepalived]# ip addr | grep 192.168inet 192.168.17.134/24 brd 192.168.17.255 scope global noprefixroute ens32inet 192.168.17.135/24 scope global secondary ens32#192.168.17.135就是我们的VIP

- 将vip接入K8S集群

#将Node连接LB的物理IP改为连接VIP即可[root@ZhangSiming cfg]# grep 134 *bootstrap.kubeconfig: server: https://192.168.17.134:6443kubelet.kubeconfig: server: https://192.168.17.134:6443kube-proxy.kubeconfig: server: https://192.168.17.134:6443[root@ZhangSiming cfg]# vim bootstrap.kubeconfig[root@ZhangSiming cfg]# vim kubelet.kubeconfig[root@ZhangSiming cfg]# vim kube-proxy.kubeconfig[root@ZhangSiming cfg]# systemctl restart kubelet[root@ZhangSiming cfg]# systemctl restart kube-proxy[root@ZhangSiming cfg]# grep 135 *bootstrap.kubeconfig: server: https://192.168.17.135:6443kubelet.kubeconfig: server: https://192.168.17.135:6443kube-proxy.kubeconfig: server: https://192.168.17.135:6443

- 测试高可用

#停止LB主的nginx[root@ZhangSiming keepalived]# nginx -s stop[root@ZhangSiming keepalived]# ip addr | grep 192.168inet 192.168.17.134/24 brd 192.168.17.255 scope global noprefixroute ens32#在node节点操作[root@ZhangSiming cfg]# systemctl restart kubelet#动态查看LB备k8s日志[root@ZhangSiming keepalived]# tail -f /var/log/nginx/k8s-access.log192.168.17.132 192.168.17.131:6443 24/Feb/2019:18:08:27 +0800 200192.168.17.132 192.168.17.131:6443 24/Feb/2019:18:08:28 +0800 200192.168.17.132 192.168.17.131:6443 24/Feb/2019:18:08:28 +0800 200#K8S集群Master节点查看node状态[root@ZhangSiming ~]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.17.132 Ready <none> 3d17h v1.12.1192.168.17.133 Ready <none> 3d16h v1.12.1#Node还是Ready状态,实现高可用!

多Master节点高可用K8S架构至此构建完毕,部署过程繁杂,细心,出问题及时看日志。