@zhangsiming65965

2019-10-29T01:48:18.000000Z

字数 17364

阅读 226

kubernetes后续配置

Kubernetes系列

---Author:张思明 ZhangSiming

---Mail:siming_zhang@shannonai.com

---QQ:1030728296

1.进行rbac生成admin和nsadmin的config

- 生成admin-config的token

这里创建一个serviceaccount,直接绑定clusterrole:cluster-admin的权限,之后读取生成的token即可。

#!/bin/bash#Usage: bash k8stest-admin-generateconfig.shIP="nlb-k8s-test-apiserver-1f07f2e6c2c2b42c.elb.cn-northwest-1.amazonaws.com.cn"KUBE_APISERVER="https://$IP:6443"kubectl create serviceaccount shannon-adminkubectl create clusterrolebinding shannon-admin --clusterrole=cluster-admin --serviceaccount=default:shannon-adminTOKEN=`kubectl get secret $(kubectl get secrets | grep shannon-admin | awk '{print $1}') -o jsonpath={.data.token}|base64 -d`echo "${TOKEN}"

- 定义k8stest-admin的clusterrole和命名空间管理员的role:允许用户访问定义的管理员命名空间的全部资源,允许查看所有命名空间,允许创建全局crd和具有全局clusterrole和clusterrolebinding的权限。

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:name: k8stest-adminrules:- apiGroups:- ""resources:- namespacesverbs:- get- list- watch- apiGroups:- "kubeapps.com"resources:- apprepositoriesverbs:- get- list- watch- apiGroups:- "apiextensions.k8s.io"resources:- customresourcedefinitionsverbs:- create- update- get- list- watch- delete- apiGroups:- "rbac.authorization.k8s.io"resources:- clusterrolesverbs:- create- update- get- list- watch- apiGroups:- "rbac.authorization.k8s.io"resources:- clusterrolebindingsverbs:- create- update- get- list- watch

- 根据上面的clusterrole和role生成nsadmin的config,给普通用户使用

#!/bin/bash#Usage: /bin/bash k8stest-namespaceadmin-generateconfig.sh test prod devIP="nlb-k8s-test-apiserver-1f07f2e6c2c2b42c.elb.cn-northwest-1.amazonaws.com.cn"KUBE_APISERVER="https://$IP:6443"USER=shannonCLUSTER=shannontestfor i in $@dokubectl create namespace $idonekubectl create serviceaccount ${USER} --namespace kube-systemfor i in $@dokubectl create rolebinding k8stest-ns-$i --clusterrole=cluster-admin --serviceaccount=kube-system:${USER} --namespace $idonekubectl create clusterrolebinding k8stest-admin --clusterrole=k8stest-admin --serviceaccount=kube-system:${USER}kubectl config set-cluster ${CLUSTER} --insecure-skip-tls-verify=true --server=${KUBE_APISERVER} --kubeconfig=k8stest-admin.kubeconfigfor i in $@dokubectl config set-context $i-admin@${CLUSTER} --cluster=${CLUSTER} --user=${USER} --namespace=$i --kubeconfig=k8stest-admin.kubeconfigdoneTOKEN=`kubectl get secret -n kube-system $(kubectl get secrets -n kube-system | grep ${USER} | awk '{print $1}') -o jsonpath={.data.token}|base64 -d`kubectl config set-credentials ${USER} --token=$TOKEN --kubeconfig=k8stest-admin.kubeconfigkubectl config use-context $1-admin@${CLUSTER} --kubeconfig=k8stest-admin.kubeconfig

2.使用kubernetes-dashboard-v2.0

- 部署dashboard-v2.0.yml:

---apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-system---kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemspec:ports:- port: 443targetPort: 8443selector:k8s-app: kubernetes-dashboard---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-certsnamespace: kube-systemtype: Opaque---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-csrfnamespace: kube-systemtype: Opaquedata:csrf: ""---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-key-holdernamespace: kube-systemtype: Opaque---kind: ConfigMapapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-settingsnamespace: kube-system---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemrules:# Allow Dashboard to get, update and delete Dashboard exclusive secrets.- apiGroups: [""]resources: ["secrets"]resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]verbs: ["get", "update", "delete"]# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]resourceNames: ["kubernetes-dashboard-settings"]verbs: ["get", "update"]# Allow Dashboard to get metrics.- apiGroups: [""]resources: ["services"]resourceNames: ["heapster", "dashboard-metrics-scraper"]verbs: ["proxy"]- apiGroups: [""]resources: ["services/proxy"]resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]verbs: ["get"]---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardrules:# Allow Metrics Scraper to get metrics from the Metrics server- apiGroups: ["metrics.k8s.io"]resources: ["pods", "nodes"]verbs: ["get", "list", "watch"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: kubernetes-dashboardnamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kube-system---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardspec:containers:- name: kubernetes-dashboardimage: kubernetesui/dashboard:v2.0.0-beta1imagePullPolicy: Alwaysports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates- --namespace=kube-system# Uncomment the following line to manually specify Kubernetes API server Host# If not specified, Dashboard will attempt to auto discover the API server and connect# to it. Uncomment only if the default does not work.# - --apiserver-host=http://my-address:portvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboard# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-metrics-scrapername: dashboard-metrics-scrapernamespace: kube-systemspec:ports:- port: 8000targetPort: 8000selector:k8s-app: kubernetes-metrics-scraper---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: kubernetes-metrics-scrapername: kubernetes-metrics-scrapernamespace: kube-systemspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-metrics-scrapertemplate:metadata:labels:k8s-app: kubernetes-metrics-scraperspec:containers:- name: kubernetes-metrics-scraperimage: kubernetesui/metrics-scraper:v1.0.0ports:- containerPort: 8000protocol: TCPlivenessProbe:httpGet:scheme: HTTPpath: /port: 8000initialDelaySeconds: 30timeoutSeconds: 30serviceAccountName: kubernetes-dashboard# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---#允许匿名用户通过token的方式外部访问dashboardkind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: kubernetes-dashboard-anonymousrules:- apiGroups: [""]resources: ["services/proxy"]resourceNames: ["https:kubernetes-dashboard:"]verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]- nonResourceURLs: ["/ui", "/ui/*", "/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/*"]verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: kubernetes-dashboard-anonymousroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kubernetes-dashboard-anonymoussubjects:- kind: Username: system:anonymous

- 部署metrics_server

kubernetes-dashboard的v2.0版本支持"metrics_server"

kubespray部署metrics_server: (部署、rbac一套做好)

$ cat kubespray/inventory/aws-nx-k8s-test/group_vars/k8s-cluster/addons.ymlmetrics_server_enabled: truemetrics_server_kubelet_insecure_tls: truemetrics_server_metric_resolution: 60smetrics_server_kubelet_preferred_address_types: "InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP"#需要两个镜像:1.registry.cn-beijing.aliyuncs.com/shannonai-k8s/metrics-server-amd64:v0.3.32.registry.cn-beijing.aliyuncs.com/shannonai-k8s/addon-resizer:1.8.3

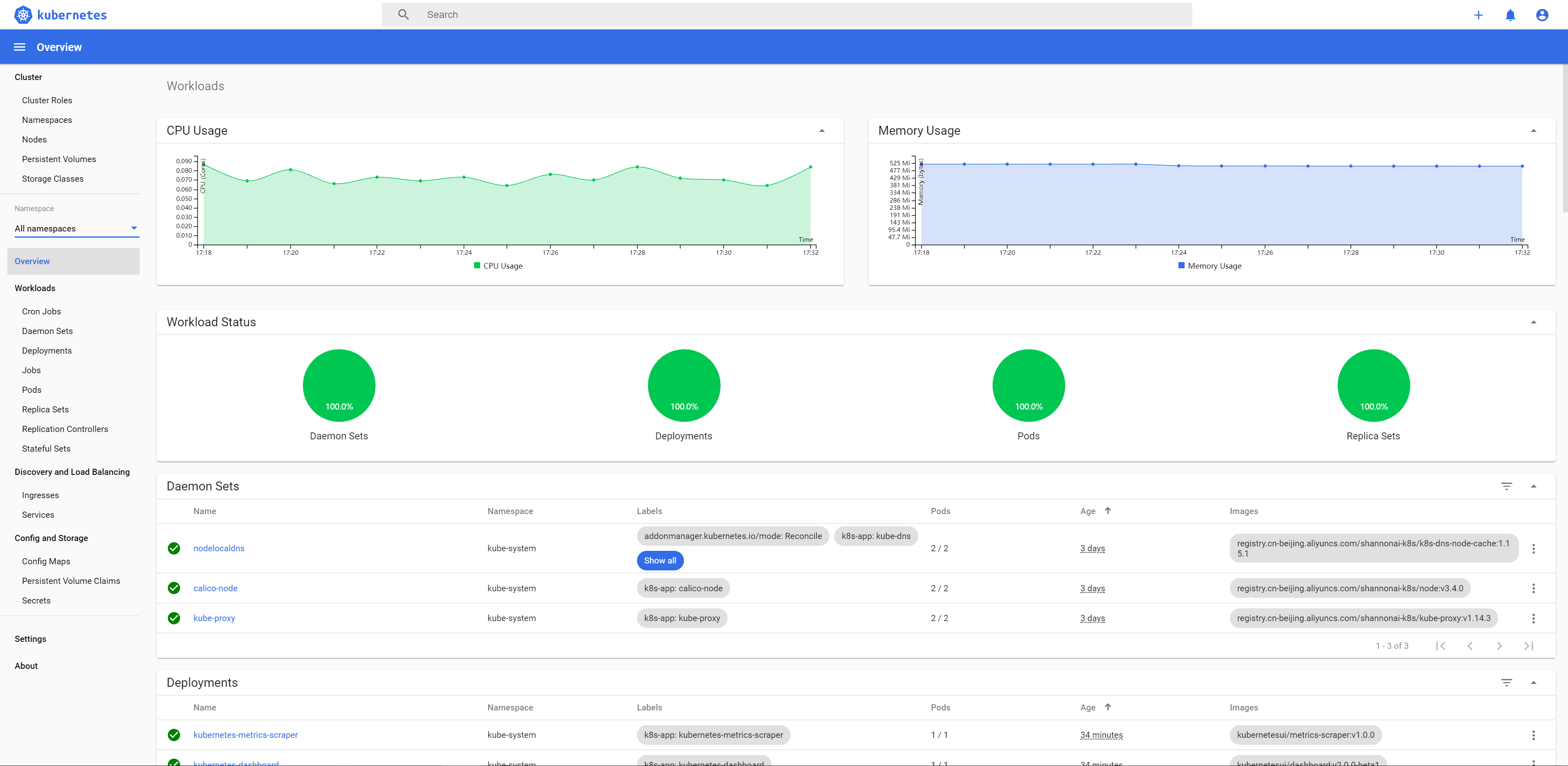

- 效果图

2.挂载集群容器内数据到本地(nfs方式)

1.配置nfs

♥ 使用kubectl本地需要安装NFS-server

#!/bin/bashDIR="挂载目录"apt-get updateapt install -y nfs-kernel-servermkdir -p $DIRchown -R nobody:nogroup $DIRchmod -R 777 $DIRecho "$DIR *(rw,sync,no_root_squash,no_subtree_check)" >> /etc/exportsexportfs -asystemctl restart nfs-kernel-serversystemctl enable nfs-kernel-server

♥ k8s集群内部所有节点安装NFS-client

apt-get install -y nfs-common

2.创建pv

kind: PersistentVolumeapiVersion: v1metadata:name: PVNAMElabels:app.kubernetes.io/name: LABELspec:storageClassName: YOURPROJECTcapacity:storage: 3GiaccessModes:- ReadWriteOnce#删除pvc之后pv资源自动释放,适合测试使用~persistentVolumeReclaimPolicy: Recyclenfs:server: NFSSERVER-IPpath: DIR

3.创建pvc

kind: PersistentVolumeClaimapiVersion: v1metadata:name: PVCNAMEnamespace: PVCNAMESPACEspec:accessModes:- "ReadWriteOnce"resources:requests:storage: "3Gi"storageClassName: "YOURPROJECT"selector:matchLabels:app.kubernetes.io/name: LABEL

4.Pod控制器挂载引用pvc

#比如deployment等...containers:volumeMounts:- name: MOUNTNAMEmountPath: "容器里的地址"...volumes:- name: MOUNTNAMEpersistentVolumeClaim: PVCNAME...

3.coredns、dns-autoscaler、nodelocaldns详解

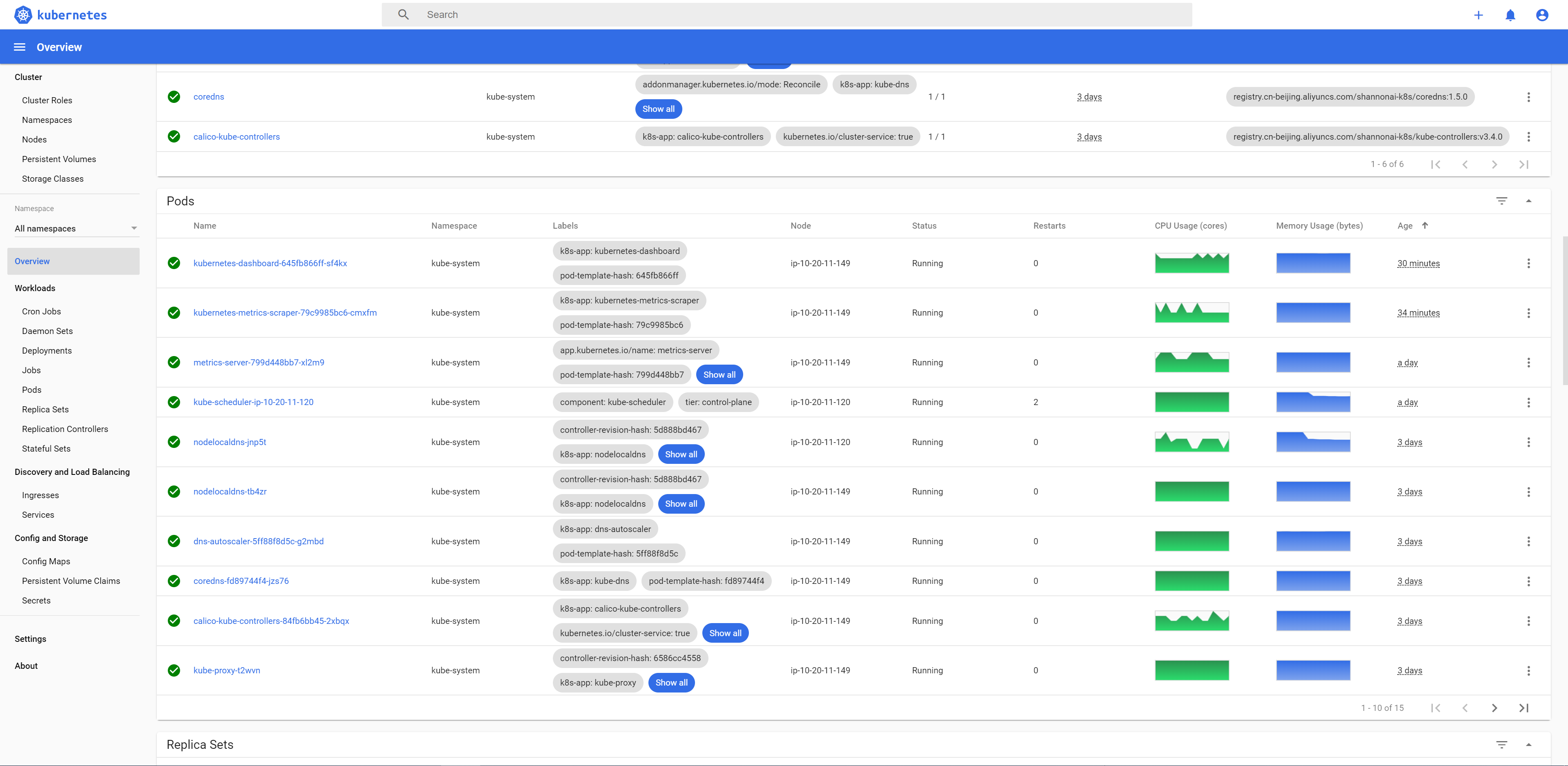

kubespray部署之后,会在集群中部署deployment:coredns、dns-autoscaler和daemonset:nodelocaldns,接下来我们针对这三个Pod进行研究与分析。

- coredns扩容问题

传统扩容方式"kubectl scale -n kube-system replicaset coredns-fd89744f4 --replicas=2"在这里不再生效,原因是coredns的副本数由dns-autoscaler进行管控,dns-autoscaler定义的coredns副本数由他的配置文件控制。

$ kubectl get configmap dns-autoscaler -n kube-systemNAME DATA AGEdns-autoscaler 1 8d$ kubectl get configmap dns-autoscaler -n kube-system -o yamlapiVersion: v1data:linear: '{"coresPerReplica":256,"min":1,"nodesPerReplica":16,"preventSinglePointFailure":false}'kind: ConfigMapmetadata:creationTimestamp: "2019-08-06T05:11:51Z"name: dns-autoscalernamespace: kube-systemresourceVersion: "1101773"selfLink: /api/v1/namespaces/kube-system/configmaps/dns-autoscaleruid: b34ad82f-b808-11e9-bba7-025e0c22fba8#修改linear中的min为2即可把最小副本数更新为2

dns-autoscaler控制的coredns副本数的计算公式为

replicas = max( ceil( cores * 1/coresPerReplica ) , ceil( nodes * 1/nodesPerReplica ) ),可见linear中的"coresPerReplica"、"nodesPerReplica"和"min"决定了corends的副本数;当集群的节点比较多的时候,core数主要决定,当集群节点比较少的时候,node数主要决定,目前由于节点数比较少,副本数还是走的min值。

- 宁夏集群访问北京区的服务,coredns怎么设置问题

我们希望coredns可以实现三个需求:

1.去找我们gitlab、harbor这样的公司内部服务走DNS:172.31.15.168解析;

2.去www.baidu.com或者其他正常访问走默认的DNS;

3.去找Kubernetes内部服务的请求走内部解析直接访问。

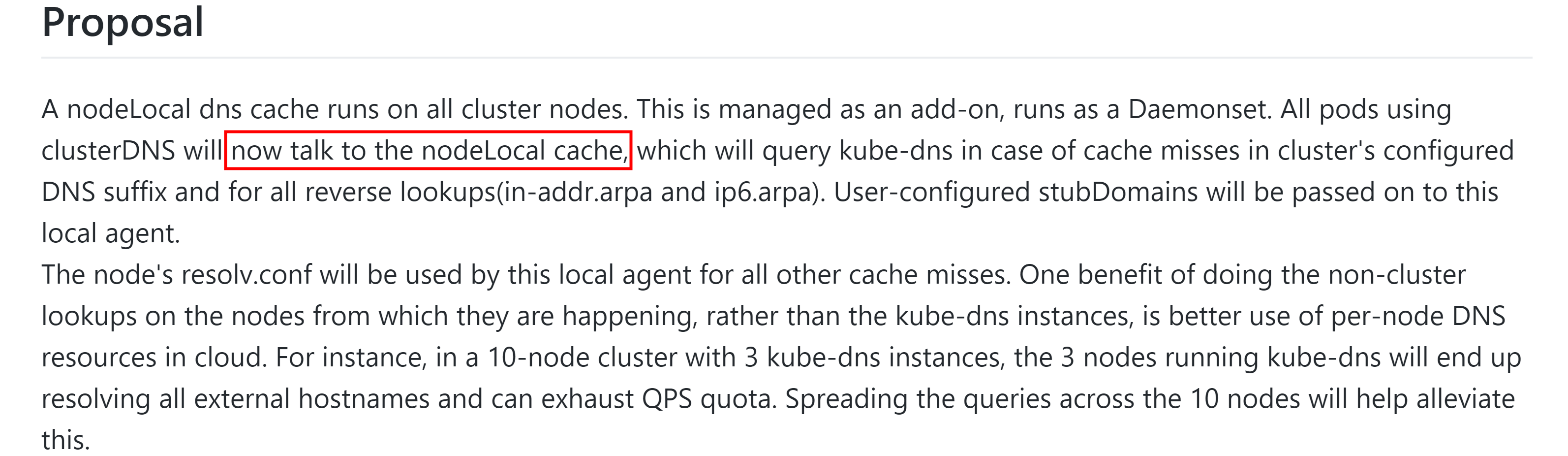

看两张官方的介绍:

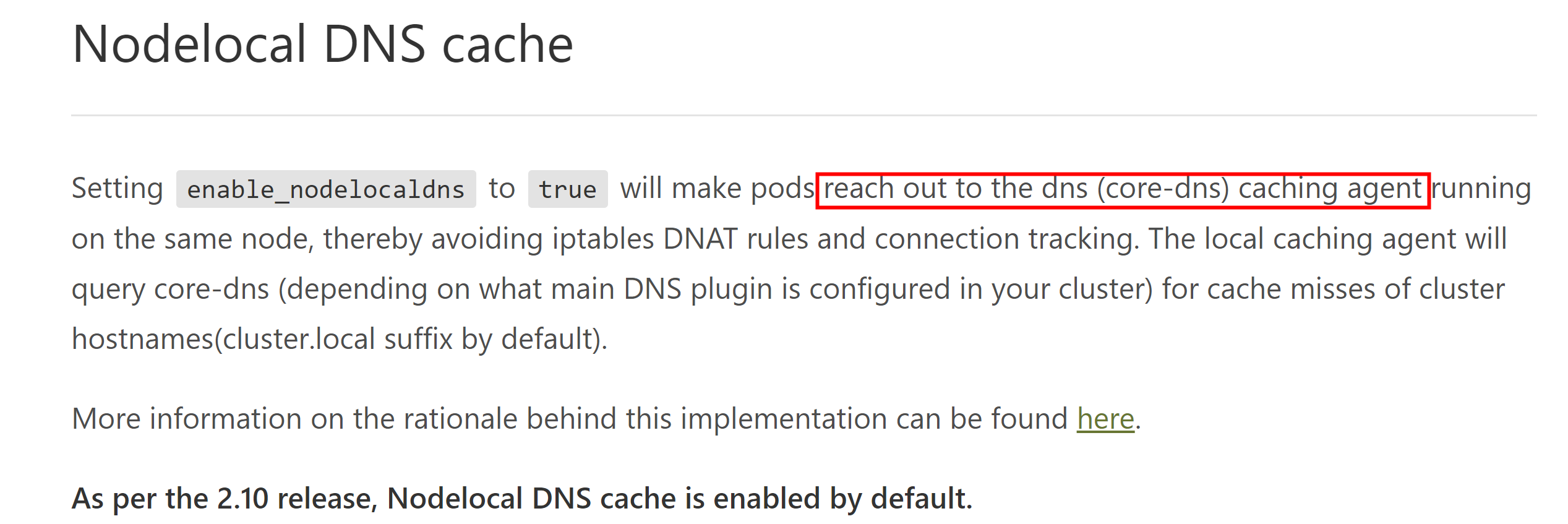

也就是说,在启用了nodelocaldns的功能之后,每个node会有一个daemonset启动的nodelocaldns,节点上的Pod将不再去找集群中的其他coredns解析DNS,直接找相同node的nodelocaldns去解析,加快了解析速率,避免了一些集群中的网络问题;

所以要定制DNS,需要修改nodelocaldns的configmap才行。

$ kubectl get configmap nodelocaldns -n kube-system -o yaml apiVersion: v1data:Corefile: |cluster.local:53 {errorscache {success 9984 30denial 9984 5}reloadloopbind 169.254.25.10forward . 172.60.0.3 {force_tcp}prometheus :9253#默认这个health后面的8080将会作为监听端口占用在每个node,如果想过要更改监听端口,可以在这里更改,之后需要在daemonset中更改健康探测端口health 169.254.25.10:8080}in-addr.arpa:53 {errorscache 30reloadloopbind 169.254.25.10forward . 172.60.0.3 {force_tcp}prometheus :9253}ip6.arpa:53 {errorscache 30reloadloopbind 169.254.25.10forward . 172.60.0.3 {force_tcp}prometheus :9253}.:53 {errorscache 30reloadloopbind 169.254.25.10forward . /etc/resolv.confprometheus :9253}shannonai.com:53 {errorscache 30reloadloopbind 169.254.25.10forward . 172.31.15.168prometheus :9253}#可以看到加入了shannonai.com的服务,意思是所有以"shannonai.com"为后缀的dns解析都去找forward定义的dns解析,本例为172.31.15.168地址

nodelocaldns相关参考地址:

Kubespray对于nodelocaldns的解释

官方对于nodelocaldns的解释

Corefile的插件使用手册

测试dns解析是否成功

一个test自带nslookup的测试yaml:

apiVersion: apps/v1beta1kind: Deploymentmetadata:name: testspec:replicas: 1template:metadata:labels:app: testspec:containers:- name: testimage: harbor.shannonai.com/test/busyboxcommand:- sleep- "36000"imagePullPolicy: IfNotPresentrestartPolicy: Always

翻墙下载镜像方法:curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- 镜像地址:版本

开始测试:

#切入一个集群中的Pod$ kubectl get podsNAME READY STATUS RESTARTS AGEnginx-deployment-85f4877f96-pbnhw 1/1 Running 0 19h$ kubectl exec -it nginx-deployment-85f4877f96-pbnhw bash#测试集群内部DNS解析Pod$ nslookup kubernetes.defaultServer: 169.254.25.10Address: 169.254.25.10#53Name: kubernetes.default.svc.cluster.localAddress: 172.60.0.1#测试集群对外正常的DNS解析Pod$ nslookup www.baidu.comServer: 169.254.25.10Address: 169.254.25.10#53Non-authoritative answer:www.baidu.com canonical name = www.a.shifen.com.Name: www.a.shifen.comAddress: 220.181.38.150Name: www.a.shifen.comAddress: 220.181.38.149#测试找北京内网服务的DNS解析nslookup git.shannonai.comServer: 169.254.25.10Address: 169.254.25.10#53Name: git.shannonai.comAddress: 172.31.51.21

附:Pod中的/etc/resolv.conf详解

#kubespray代码中定义了nodelocaldns的IP地址kubespray/inventory/aws-nx-k8s-prod/group_vars/k8s-cluster/k8s-cluster.yml136:nodelocaldns_ip: 169.254.25.10Pod$ cat /etc/resolv.confnameserver 169.254.25.10search default.svc.cluster.local svc.cluster.local cluster.local cn-northwest-1.compute.internaloptions ndots:5#上面的nameserver找的是nodelocaldns的地址#之后在访问的地址点数小于5点的时候(ndots),回去search中一个一个进行试匹配解析,这种一般优先提供给集群内部服务做解析;之后有自定义比如我们的nodelocal配置,再继续进行匹配解析。

创建Pod的时候,默认的dnsPolicy为"ClusterFirst",优先采用coredns的配置进行dns解析;如果想要修改Pod启动的时候"/etc/resolv.conf"的数值,可以在Pod字段选项的"dnsConfig"中配置,详情请见:Pod-dnsconfig

4.tiller(helm2)授权

安装helm2:

#!/bin/bashmkdir -pv helm && cd helmwget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gztar xf helm-v2.11.0-linux-amd64.tar.gzsudo mv linux-amd64/helm /usr/local/binrm -rf linux-amd64

初始化helm2:

helm init --upgrade -i registry.cn-beijing.aliyuncs.com/shannonai-k8s/tiller:v2.13.1 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts --tiller-namespace kube-system

查看tiller的serviceaccount:

$ kubectl get serviceaccount -n kube-system | grep tillertiller 1 3h21m

给tiller做rbac授权:

---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata:name: kubernetes-tillerroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: tillernamespace: kube-system

修复部署:

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

授权普通用户使用kube-system命名空间下的tiller:

apiVersion: v1kind: ServiceAccountmetadata:name: USERnamespace: NAMESPACE---apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata:name: use-tillernamespace: kube-systemrules:- apiGroups:- ""resources:- pods/portforwardverbs:- create- apiGroups:- ""resources:- podsverbs:- list---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:name: use-tiller-bindingnamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: use-tillersubjects:- kind: ServiceAccountname: USERnamespace: NAMESPACE

之后生成普通用户的config即可

5.部署kubeapps

引用官方的chart部署kubeapps:v1.4.2

$ helm repo add bitnami https://charts.bitnami.com/bitnami$ helm fetch bitnami/kubeapps$ tar xf kubeapps-2.0.4.tgz$ cd kubeapps/$ vim values.yaml...修改内容...annotations:kubernetes.io/ingress.class: traefik-internal...hosts:- name: kubeapps-nx.shannonai.compath: /...initialRepos:- name: azure-mirror-stableurl: http://mirror.azure.cn/kubernetes/charts/- name: azure-mirror-incubatorurl: http://mirror.azure.cn/kubernetes/charts-incubator/- name: bitnamiurl: https://charts.bitnami.com/bitnami- name: shannonaiurl: https://chartmuseum.shannonai.com/...修改内容...#开始部署$ helm install --name kubeapps-aws-nx --namespace ci-cd .

访问dashboard:

6.部署prometheus+grafana

$ git clone ssh://git@git.shannonai.com:2222/shannon/charts.git$ cd prometheus-operator$ helm repo add kube-state-metrics https://chartmuseum.shannonai.com/$ helm repo add prometheus-node-exporter https://chartmuseum.shannonai.com/$ helm repo add grafana https://chartmuseum.shannonai.com/$ helm dep update$ vim values.yaml...修改内容...修改内容包括:ingress.class、三个ingress域名、ldap域名改为"ldap.shannonai.com"、两个"storageClassName"...修改内容...$ rm -rf templates/grafana/dashboards/ceph.yaml$ vim vim templates/alertmanager/alert-dingtalk.yaml...修改内容...修改钉钉报警url和base64加密的报警信息模板...修改内容...#开始部署安装$ helm install --name prometheus-aws-nx --namespace monitor .

注意:

1.注意servicemonitor的select需要匹配service的label;

2.如果promethues的target显示"0/0up",更换"templates/exporters/"下的yaml为最新版是一个解决方向;

3.scheduler的servicemonitor问题仍未解决,但是其重要性不是很大,可以暂时忽略...

访问dashboard:

7.部署fluentd-elasticsearch

$ git clone ssh://git@git.shannonai.com:2222/shannon/charts.git$ cd fluentd-elasticsearch-nx#创建具有访问aws-elasticsearch的权限的用户的secret$ cat cretificate.yaml#由于secret是Opaque类型,"ACCESS_KEY_ID"、"ACCESS_KEY_ID"需要base64加密:echo -n "xxxx" | base64apiVersion: v1data:ACCESS_KEY_ID: QUtJQTNQTkhaNUdURlFRWjc0QUg=SECRET_ACCESS_KEY: eWVHYmRuNzdWSFJBY3I3K0tSM1pjTHp3ODlZWWphSXNNcnZwd3I2Lw==kind: Secretmetadata:labels:app: fluentdname: fluentd-aws-nx-es-logs-secretnamespace: kube-systemtype: Opaque$ vim values.yaml...修改内容...elasticsearch的地址,前缀设置为fluentd#以secret的方式传入环境变量secret:ACCESS_KEY_ID:secret_name: fluentd-aws-nx-es-logs-secretsecret_key: ACCESS_KEY_IDSECRET_ACCESS_KEY:secret_name: fluentd-aws-nx-es-logs-secretsecret_key: SECRET_ACCESS_KEY...修改内容...#创建"system-node-critical"、"system-cluster-critical"的PriorityClass,注意这两个只能被kube-system命名空间的资源下被引用。$ cat PriorityClass.yaml# NOTE: "system-node-critical" and "system-cluster-critical" can only be used in ns kube-systemapiVersion: scheduling.k8s.io/v1beta1kind: PriorityClassmetadata:name: system-node-criticalvalue: 2000001000---apiVersion: scheduling.k8s.io/v1beta1kind: PriorityClassmetadata:name: system-cluster-criticalvalue: 2000000000#开始部署$ helm install --namespace kube-system --name fluentd-elasticsearch-nx .