@zhangsiming65965

2019-03-18T08:01:49.000000Z

字数 20194

阅读 236

ELK日志分析平台(分离式部署ELKStack)

消息队列与数据库缓存

---Author:张思明 ZhangSiming

---Mail:1151004164@cnu.edu.cn

---QQ:1030728296

如果对技术文档中的内容有任何疑问,欢迎加微信:zhangsiming422或者QQ:1030728296一起讨论学习!

一、ELK Stack日志收集平台概述

1.1需求背景

- 开发人员为了排查问题,经常上线服务器去查询项目日志

- 服务器越来越多,项目越来越多,日志类型越来越多

一般传统的分析日志,直接在日志文件中进行sed、grep、awk分析即可,但是这种方式在大量日志数据的环境下比较繁杂,费时费力,难以得到高效率的数据及直观的呈现形式,于是就有了构建一套集中式日志系统,统一收集日志分析,可以提高日志分析效率。

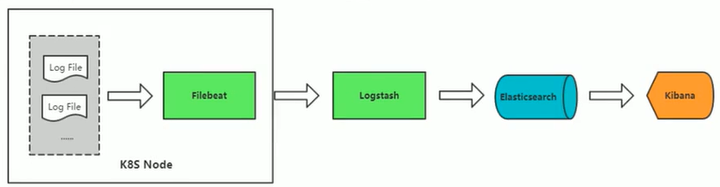

1.2Filebeat+ELK主流日志收集流程

日志就是数据,数据就可以产生价值。由于公司对日志收集的需求,引进Filebeat+ELK Stack平台进行日志收集分析。

1.Filebeat从生产环境收集到日志数据(Log File);

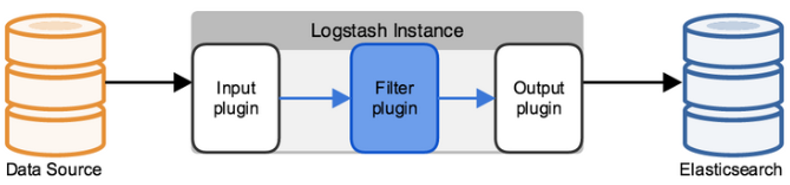

2.Filebeat把数据传输到Logstash进行日志过滤筛查;其中包含三部分:input、filter、output,含有丰富的过滤插件,可以有效地过滤优化日志数据;

3.Logstash把优化后的日志数据写入Elasticsearch数据库存储;

4.Kibana从Elasticsearch数据库拿取日志数据在Web界面显示给相关人员查看分析。

二、部署ELK Stack日志平台

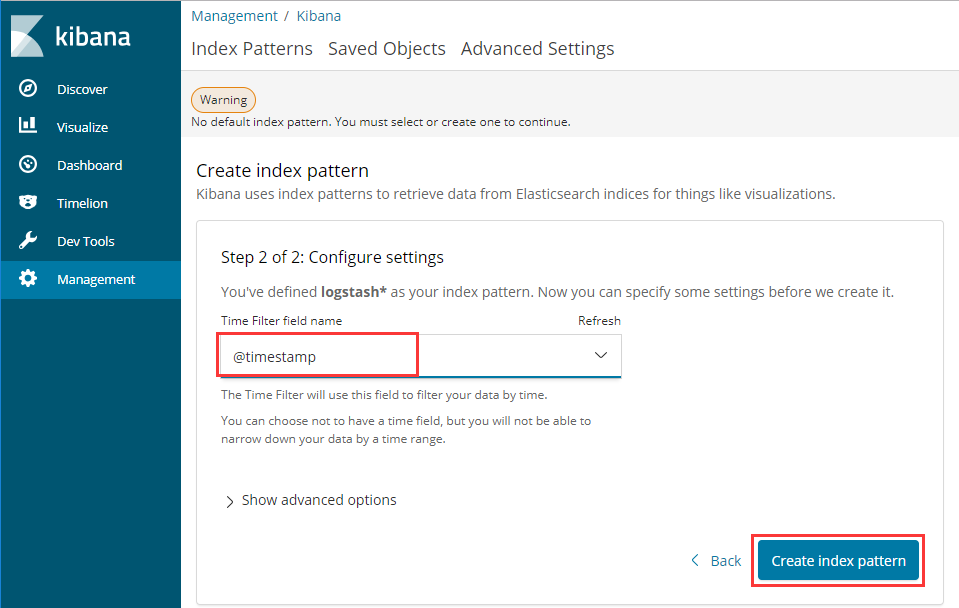

2.1部署Kibana

Kibana用于展现数据,他具有Web界面,但是他本身不存储数据,只是从数据库中拿取。

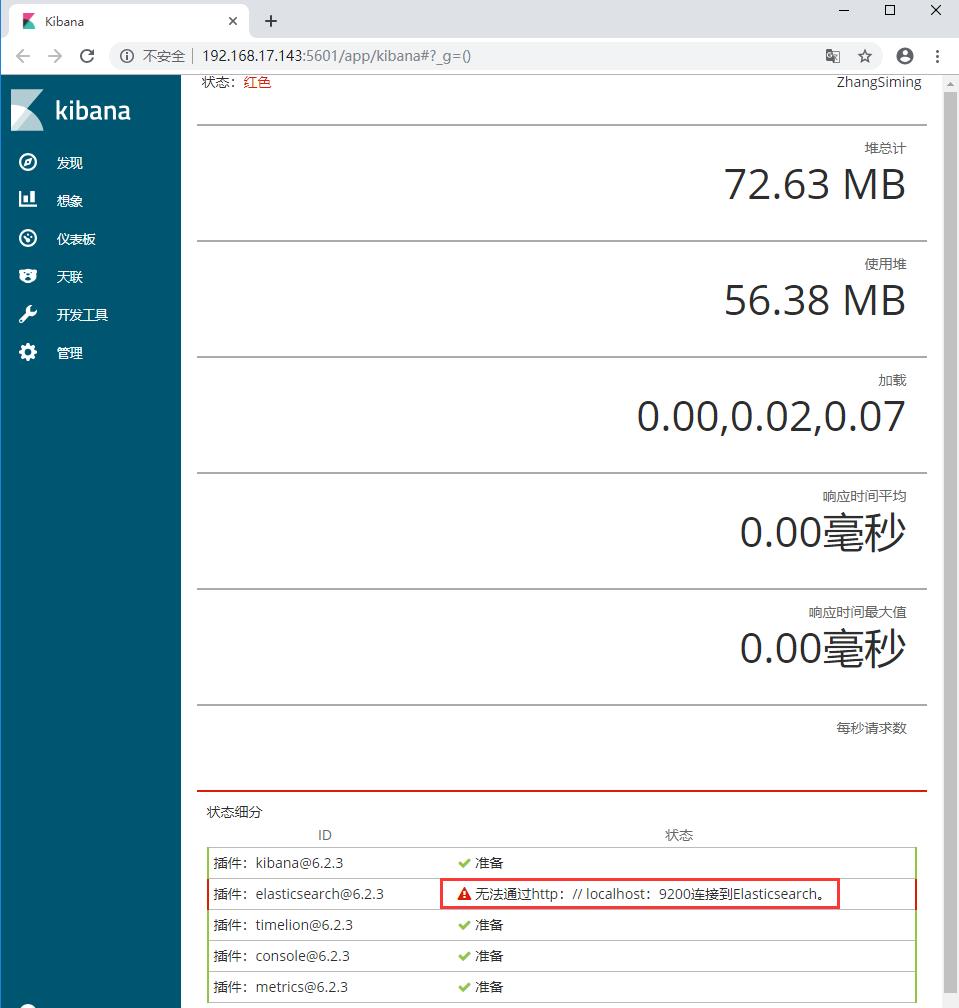

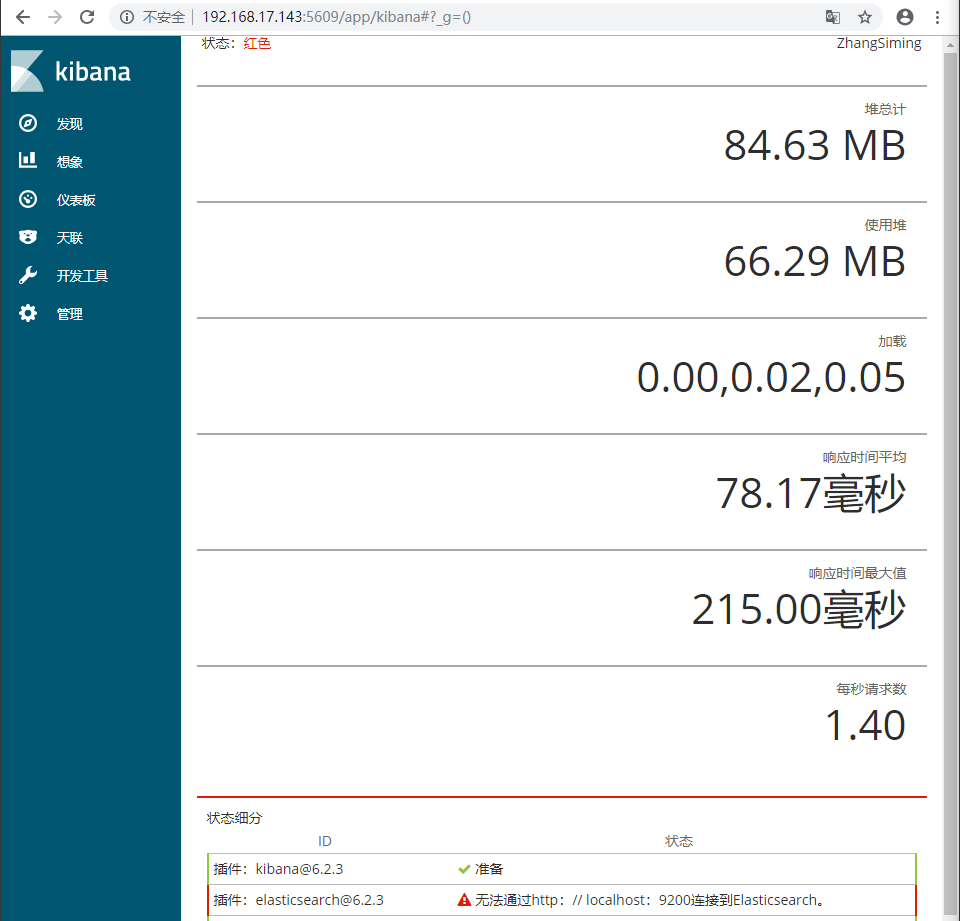

[root@ZhangSiming ~]# lsanaconda-ks.cfg common_install.sh kibana-6.2.3-linux-x86_64.tar.gz[root@ZhangSiming ~]# useradd -s /sbin/nologin -M elk#创建elk程序用户[root@ZhangSiming ~]# tar xf kibana-6.2.3-linux-x86_64.tar.gz -C /usr/local/[root@ZhangSiming ~]# mv /usr/local/kibana-6.2.3-linux-x86_64/ /usr/local/kibana[root@ZhangSiming ~]# cd /usr/local/kibana/[root@ZhangSiming kibana]# vim config/kibana.yml[root@ZhangSiming kibana]# sed -n '2p;7p' config/kibana.ymlserver.port: 5601server.host: "0.0.0.0"[root@ZhangSiming kibana]# chown -R elk.elk /usr/local/kibana/[root@ZhangSiming kibana]# vim /usr/local/kibana/bin/start.sh[root@ZhangSiming kibana]# cat /usr/local/kibana/bin/start.shnohup /usr/local/kibana/bin/kibana >> /tmp/kibana.log 2>> /tmp/kibana.log &#nohup ... &表示防止session终止或者ctrl+c类型前台终止服务[root@ZhangSiming kibana]# chmod a+x /usr/local/kibana/bin/start.sh[root@ZhangSiming kibana]# su -s /bin/bash elk '/usr/local/kibana/bin/start.sh'[root@ZhangSiming kibana]# ps -elf | grep elk | grep -v grep0 S elk 11918 1 27 80 0 - 316236 ep_pol 16:54 pts/0 00:00:03 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli[root@ZhangSiming kibana]# netstat -antup | grep 5601tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 11918/node#启动成功,监听5601端口[root@ZhangSiming kibana]# tail /tmp/kibana.log{"type":"log","@timestamp":"2019-03-07T08:55:30Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"Unable to revive connection: http://localhost:9200/"}{"type":"log","@timestamp":"2019-03-07T08:55:30Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"No living connections"}{"type":"log","@timestamp":"2019-03-07T08:55:32Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"Unable to revive connection: http://localhost:9200/"}{"type":"log","@timestamp":"2019-03-07T08:55:32Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"No living connections"}{"type":"log","@timestamp":"2019-03-07T08:55:35Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"Unable to revive connection: http://localhost:9200/"}{"type":"log","@timestamp":"2019-03-07T08:55:35Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"No living connections"}{"type":"log","@timestamp":"2019-03-07T08:55:37Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"Unable to revive connection: http://localhost:9200/"}{"type":"log","@timestamp":"2019-03-07T08:55:37Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"No living connections"}{"type":"log","@timestamp":"2019-03-07T08:55:40Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"Unable to revive connection: http://localhost:9200/"}{"type":"log","@timestamp":"2019-03-07T08:55:40Z","tags":["warning","elasticsearch","admin"],"pid":11918,"message":"No living connections"}#没有连接到Elasticsearch,因为还没有配置

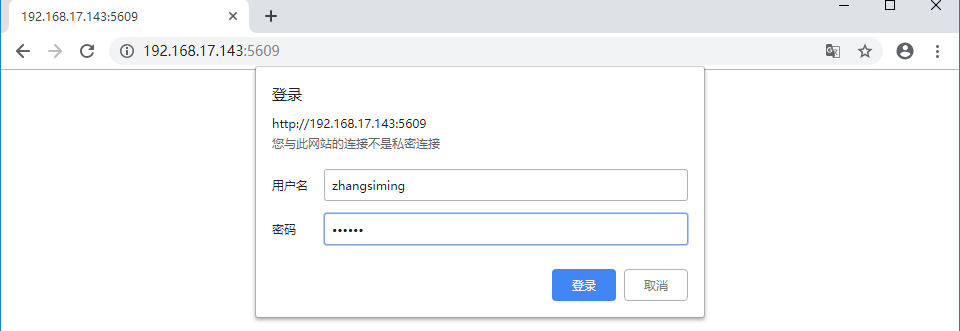

- 使用Nginx来对Kibana进行访问限制

#源码安装Nginx[root@ZhangSiming ~]# lsanaconda-ks.cfg kibana-6.2.3-linux-x86_64.tar.gz nginx_install.shcommon_install.sh nginx-1.10.2.tar.gz[root@ZhangSiming ~]# cat nginx_install.sh#!/bin/bashyum -y install pcre-devel openssl-develtar xf nginx-1.10.2.tar.gz -C /usr/src/cd /usr/src/nginx-1.10.2/useradd -s /sbin/nologin -M nginx./configure --user=nginx --group=nginx --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_modulemake && make installln -s /usr/local/nginx/sbin/\* /usr/local/sbin/[root@ZhangSiming ~]# sh nginx_install.sh[root@ZhangSiming ~]# nginx -Vnginx version: nginx/1.10.2built by gcc 4.8.5 20150623 (Red Hat 4.8.5-28) (GCC)built with OpenSSL 1.0.2k-fips 26 Jan 2017TLS SNI support enabledconfigure arguments: --user=nginx --group=nginx --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module[root@ZhangSiming ~]# cd /usr/local/nginx/[root@ZhangSiming nginx]# vim conf/nginx.conf[root@ZhangSiming nginx]# cat conf/nginx.confworker_processes 1;events {worker_connections 1024;}http {include mime.types;default_type application/octet-stream;sendfile on;keepalive_timeout 65;log_format main '$remote_addr - $remote_user [$time_local] "$request"''$status $body_bytes_sent "$http_referer"''"$http_user_agent""$http_x_forwarded_for"';server {listen 5609;server_name www.kibana.com;access_log /usr/local/nginx/logs/kibana_access.log main;error_log /usr/local/nginx/logs/kibana_error.log error;location / {auth_basic "elk auth";auth_basic_user_file /usr/local/nginx/conf/htpasswd;proxy_pass http://127.0.0.1:5601;#Nginx也可以allow、denyip限制,限制}}}#使用openssl生成密码文件[root@ZhangSiming nginx]# openssl passwd -cryptPassword:Verifying - Password:WFaQjx/45ljv.[root@ZhangSiming nginx]# vim /usr/local/nginx/conf/htpasswd[root@ZhangSiming nginx]# cat /usr/local/nginx/conf/htpasswdzhangsiming:WFaQjx/45ljv.#账号和加密的密码#Kibana限制只能本地访问,重启Kibana[root@ZhangSiming kibana]# vim config/kibana.yml[root@ZhangSiming kibana]# sed -n '7p' config/kibana.ymlserver.host: "127.0.0.1"[root@ZhangSiming kibana]# ps -elf | grep kibana0 S elk 11918 1 0 80 0 - 321323 ep_pol 16:54 pts/0 00:00:06 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli0 R root 14502 1180 0 80 0 - 28176 - 17:16 pts/0 00:00:00 grep --color=auto kibana[root@ZhangSiming kibana]# kill -9 11918[root@ZhangSiming kibana]# su -s /bin/bash elk '/usr/local/kibana/bin/start.sh'[root@ZhangSiming nginx]# nginx#启动Nginx

2.2部署Elasticsearch

Elasticsearch是用来存储日志数据的,需要jdk环境才能运行。

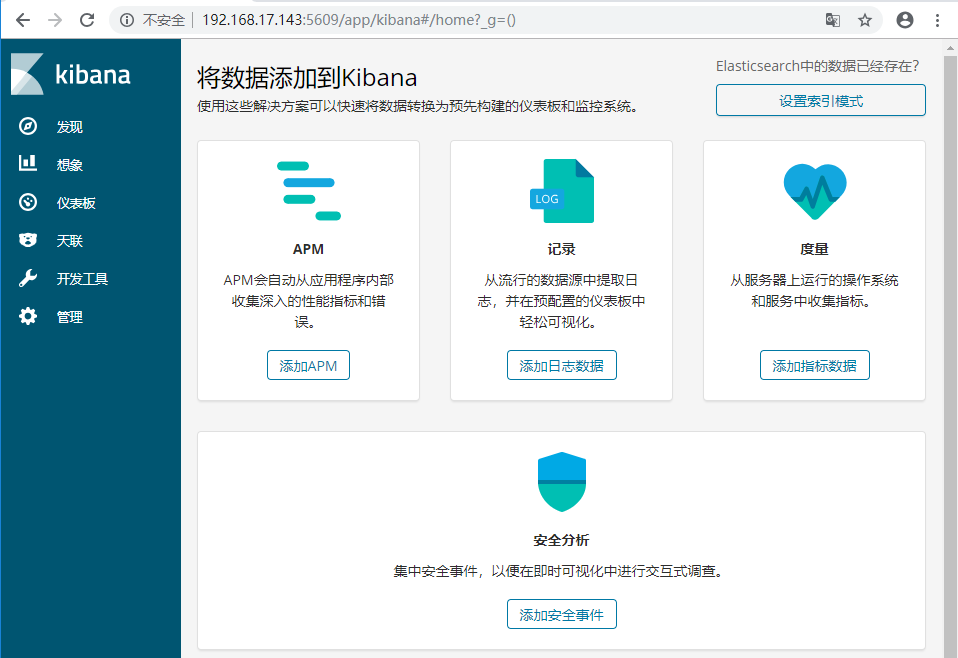

#部署Jdk环境[root@ZhangSiming ~]# lsanaconda-ks.cfg elasticsearch-6.2.3.tar.gzcommon_install.sh jdk-8u60-linux-x64.tar.gz[root@ZhangSiming ~]# tar xf jdk-8u60-linux-x64.tar.gz -C /usr/local/[root@ZhangSiming ~]# mv /usr/local/jdk1.8.0_60/ /usr/local/jdk[root@ZhangSiming ~]# vim /etc/profile[root@ZhangSiming ~]# tail -3 /etc/profileexport JAVA_HOME=/usr/local/jdk/export PATH=$PATH:$JAVA_HOME/binexport CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH[root@ZhangSiming ~]# . /etc/profile[root@ZhangSiming ~]# java -versionjava version "1.8.0_60"Java(TM) SE Runtime Environment (build 1.8.0_60-b27)Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)#安装Elasticsearch[root@ZhangSiming ~]# tar xf elasticsearch-6.2.3.tar.gz -C /usr/local[root@ZhangSiming ~]# mv /usr/local/elasticsearch-6.2.3/ /usr/local/elasticsearch[root@ZhangSiming ~]# cd /usr/local/elasticsearch[root@ZhangSiming elasticsearch]# lsbin lib logs NOTICE.txt README.textileconfig LICENSE.txt modules plugins[root@ZhangSiming elasticsearch]# vim config/elasticsearch.yml[root@ZhangSiming elasticsearch]# sed -n '33p;37p;55p;59p' config/elasticsearch.ymlpath.data: /usr/local/elasticsearch/datapath.logs: /usr/local/elasticsearch/logsnetwork.host: 0.0.0.0http.port: 9200#Kibana要连接这个地址才能获取Elasticsearch的数据[root@ZhangSiming elasticsearch]# useradd -s /sbin/nologin elk[root@ZhangSiming elasticsearch]# chown -R elk.elk /usr/local/elasticsearch/[root@ZhangSiming elasticsearch]# vim config/jvm.options[root@ZhangSiming elasticsearch]# sed -n '22,23p' config/jvm.options-Xms100M-Xmx100M#为了防止虚拟机内存跑满,限制JVM堆内存为100M大小,取消伸缩区[root@ZhangSiming elasticsearch]# vim bin/start.sh[root@ZhangSiming elasticsearch]# cat bin/start.sh/usr/local/elasticsearch/bin/elasticsearch -d >> /tmp/elasticsearch.log 2>> /tmp/elasticsearch.log#启动脚本,daemon的方式运行Elasticsearch[root@ZhangSiming elasticsearch]# chmod a+x bin/start.sh[root@ZhangSiming elasticsearch]# su -s /bin/bash elk '/usr/local/elasticsearch/bin/start.sh'[root@ZhangSiming elasticsearch]# ps -elf | grep elk | grep -v grep0 S elk 1408 1 35 80 0 - 537021 futex_ 17:43 pts/0 00:00:03 /usr/local/jdk//bin/java -Xms100M -Xmx100M -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch.T7KaOQi4 -XX:+HeapDumpOnOutOfMemoryError -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/local/elasticsearch -Des.path.conf=/usr/local/elasticsearch/config -cp /usr/local/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d#切换到Kibana服务器,修改配置文件[root@ZhangSiming kibana]# vim config/kibana.yml[root@ZhangSiming kibana]# sed -n '21p' config/kibana.ymlelasticsearch.url: "http://192.168.17.139:9200"#正确连接Elasticsearch[root@ZhangSiming kibana]# ps -elf | grep kibana | grep -v grep0 S elk 14524 1 0 80 0 - 321729 ep_pol 17:16 pts/0 00:00:07 /usr/local/kibana/bin/../node/bin/node --no-warnings /usr/local/kibana/bin/../src/cli[root@ZhangSiming kibana]# kill -9 14524[root@ZhangSiming kibana]# /usr/local/kibana/bin/start.sh

- 查看Elasticsearch日志文件

[root@ZhangSiming elasticsearch]# tail -10 logs/elasticsearch.log[2019-03-07T17:50:55,927][INFO ][o.e.b.BootstrapChecks ] [L_zSuq3] bound or publishing to a non-loopback address, enforcing bootstrap checks[2019-03-07T17:50:55,945][ERROR][o.e.b.Bootstrap ] [L_zSuq3] node validation exception[3] bootstrap checks failed[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536][5]: max number of threads [3802] for user [elk] is too low, increase to at least [4096][6]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144][2019-03-07T17:50:56,186][INFO ][o.e.n.Node ] [L_zSuq3] stopping ...[2019-03-07T17:50:56,209][INFO ][o.e.n.Node ] [L_zSuq3] stopped[2019-03-07T17:50:56,209][INFO ][o.e.n.Node ] [L_zSuq3] closing ...[2019-03-07T17:50:56,237][INFO ][o.e.n.Node ] [L_zSuq3] closed#我们可以看到Elasticsearch是被强制关闭了的,对于非监听127.0.0.1的Elasticsearch,需要修改一些内核参数,我们根据日志提示修改[root@ZhangSiming elasticsearch]# vim /etc/security/limits.conf[root@ZhangSiming elasticsearch]# sed -n '61,64p' /etc/security/limits.conf* soft nofile 65536* hard nofile 65536elk soft nproc 4096elk hard nproc 4096elk soft memlock unlimitedelk hard memlock unlimited[root@ZhangSiming elasticsearch]# vim /etc/sysctl.conf[root@ZhangSiming elasticsearch]# sed -n '12p' /etc/sysctl.confvm.max_map_count=262144[root@ZhangSiming elasticsearch]# sysctl -pvm.max_map_count = 262144[root@ZhangSiming elasticsearch]# vim config/elasticsearch.yml[root@ZhangSiming elasticsearch]# sed -n '43,44p' config/elasticsearch.ymlbootstrap.memory_lock: truebootstrap.system_call_filter: false[root@ZhangSiming elasticsearch]# exit#重新启动Elasticsearch[root@ZhangSiming ~]# su -s /bin/bash elk '/usr/local/elasticsearch/bin/start.sh'[root@ZhangSiming ~]# ps -elf | grep elasticsearch | grep -v grep0 S elk 2395 1 14 80 0 - 567583 futex_ 18:34 pts/0 00:00:15 /usr/local/jdk//bin/java -Xms100M -Xmx100M -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch.yF7qH5Hg -XX:+HeapDumpOnOutOfMemoryError -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/local/elasticsearch -Des.path.conf=/usr/local/elasticsearch/config -cp /usr/local/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d[root@ZhangSiming ~]# netstat -antup | grep 9200tcp6 0 0 :::9200 :::* LISTEN 2395/javatcp6 0 0 192.168.17.139:9200 192.168.17.143:38452 ESTABLISHED 2395/javatcp6 0 0 192.168.17.139:9200 192.168.17.143:38454 ESTABLISHED 2395/java#成功启动

2.3部署Logstash

Logstash是用来读取日志,正则分析日志,发送给Elasticsearch的,拥有非常多的过滤插件,很强大。

#Logstash需要Jdk环境[root@ZhangSiming ~]# tar xf jdk-8u60-linux-x64.tar.gz -C /usr/local/[root@ZhangSiming ~]# mv /usr/local/jdk1.8.0_60/ /usr/local/jdk[root@ZhangSiming ~]# vim /etc/profile[root@ZhangSiming ~]# tail -3 /etc/profileexport JAVA_HOME=/usr/local/jdk/export PATH=$PATH:$JAVA_HOME/binexport CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH[root@ZhangSiming ~]# . /etc/profile[root@ZhangSiming ~]# java -versionjava version "1.8.0_60"Java(TM) SE Runtime Environment (build 1.8.0_60-b27)Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)#安装Logstash[root@ZhangSiming ~]# tar xf logstash-6.2.3.tar.gz -C /usr/local/[root@ZhangSiming ~]# mv /usr/local/logstash-6.2.3/ /usr/local/logstash[root@ZhangSiming ~]# cd /usr/local/logstash[root@ZhangSiming logstash]# vim config/jvm.options[root@ZhangSiming logstash]# sed -n '6,7p' config/jvm.options-Xms150M-Xmx150M#修改JVM堆内存150M大小,取消伸缩区#Logstash默认没有,需要自己创建[root@ZhangSiming ~]# vim /usr/local/logstash/config/logstash.conf[root@ZhangSiming ~]# cat /usr/local/logstash/config/logstash.confinput {file {path => "/usr/local/nginx/logs/access.log"}}output {elasticsearch {hosts => ["http://192.168.17.139:9200"]}}[root@ZhangSiming ~]# useradd -s /sbin/nologin -M elk[root@ZhangSiming ~]# vim /usr/local/logstash/bin/start.sh[root@ZhangSiming ~]# cat /usr/local/logstash/bin/start.sh#!/bin/bashnohup /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf >> /tmp/logstash.log 2>>/tmp/logstash.log &#logstash并没有监听端口,因此不需要用elk用户来启动[root@ZhangSiming ~]# chmod a+x /usr/local/logstash/bin/start.sh[root@ZhangSiming ~]# /usr/local/logstash/bin/start.sh[root@ZhangSiming ~]# ps -ef | grep logstashroot 3935 1 5 19:08 pts/0 00:00:00 /usr/local/jdk//bin/java -Xms150M -Xmx150M -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -cp /usr/local/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/local/logstash/logstash-core/lib/jars/commons-compiler-3.0.8.jar:/usr/local/logstash/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/local/logstash/logstash-core/lib/jars/google-java-format-1.5.jar:/usr/local/logstash/logstash-core/lib/jars/guava-22.0.jar:/usr/local/logstash/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-annotations-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-databind-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-dataformat-cbor-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/janino-3.0.8.jar:/usr/local/logstash/logstash-core/lib/jars/javac-shaded-9-dev-r4023-3.jar:/usr/local/logstash/logstash-core/lib/jars/jruby-complete-9.1.13.0.jar:/usr/local/logstash/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-api-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/local/logstas/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash -f /usr/local/logstash/config/logstash.confroot 3960 1191 0 19:08 pts/0 00:00:00 grep --color=auto logstash

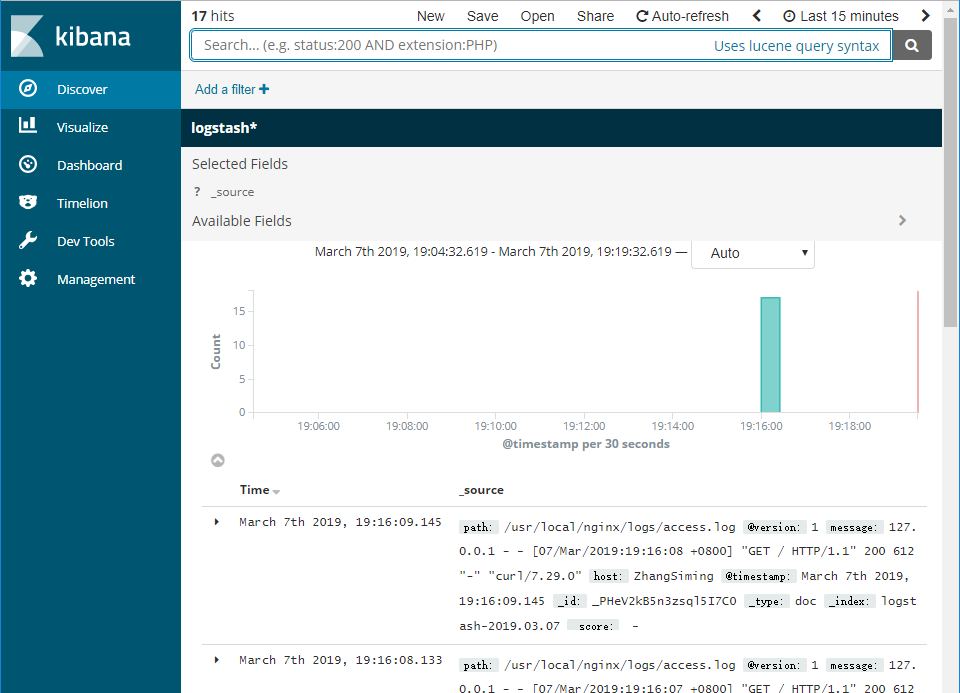

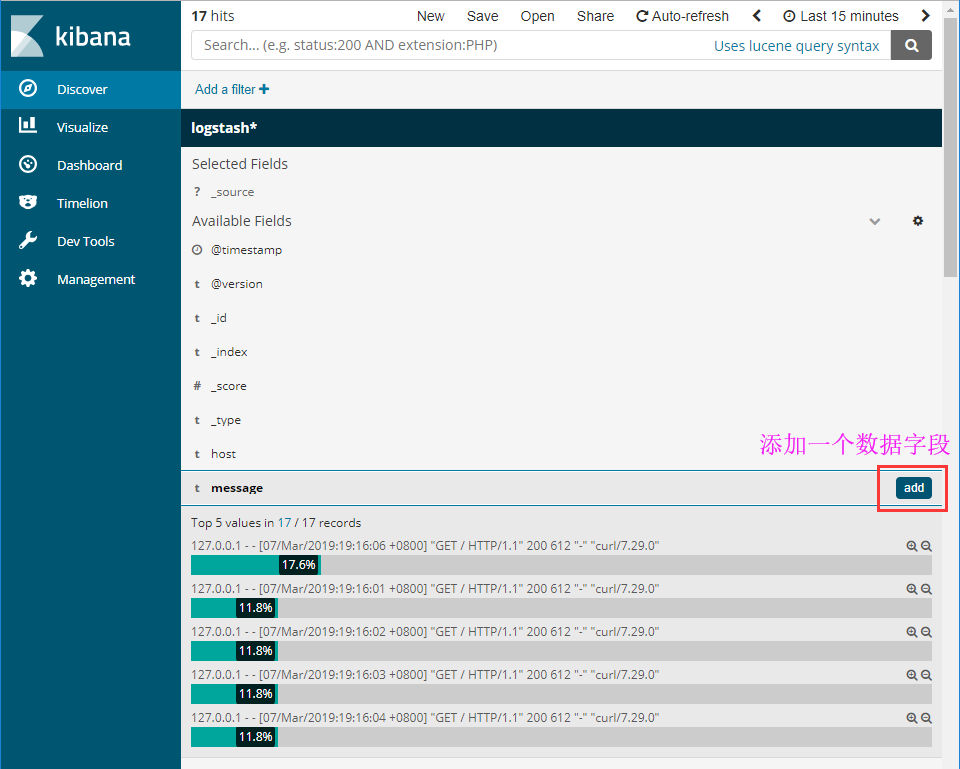

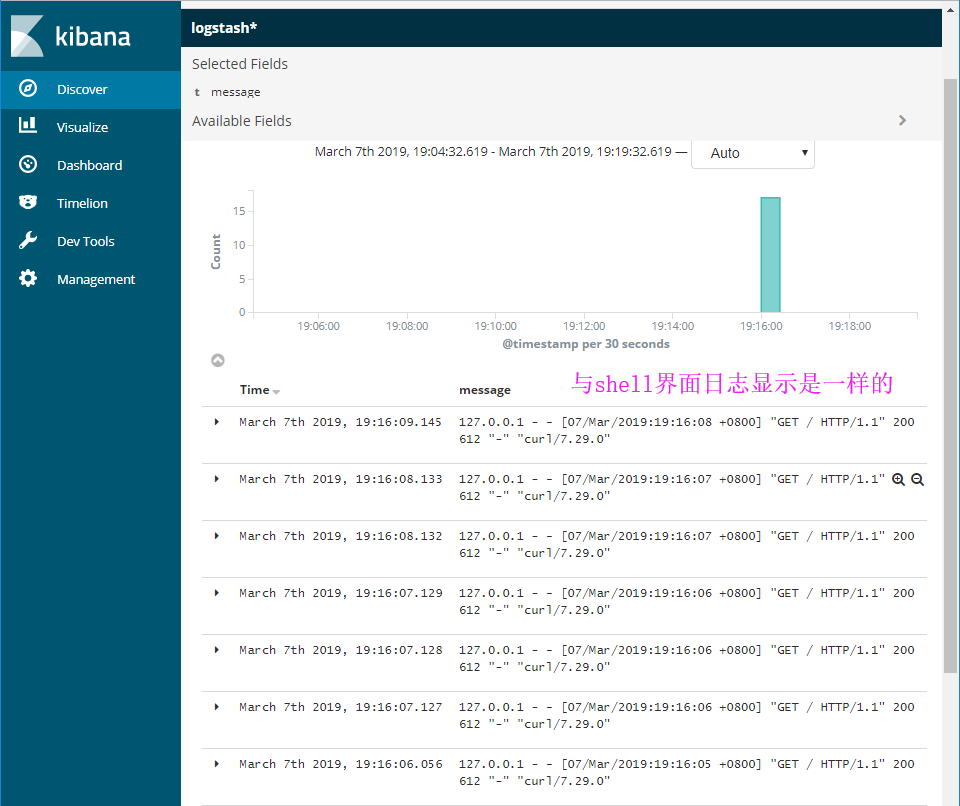

[root@ZhangSiming logstash]# tail /usr/local/nginx/logs/access.log127.0.0.1 - - [07/Mar/2019:19:16:04 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:04 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:05 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:05 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:06 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:06 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:06 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:07 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:07 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"127.0.0.1 - - [07/Mar/2019:19:16:08 +0800] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0"

Logstash部署成功,至此ELKStack部署完成

三、Logstash使用详解(一)

3.1Logstash工作原理详解

Logstash使用管道方式进行日志的搜集处理和输出。有点类似于管道命令xxx|ccc|ddd,xxx执行完了会执行ccc,然后执行ddd。

- 输入input ---> 处理过滤filter(不是必须的) ---> 输出output

3.2常用命令

- -f:通过这个命令可以指定Logstash的配置文件,根据配置文件配置logstash;

- -e:后面跟着字符串,该字符串可以被当作logstash的配置(如果是""则默认使用stdin作为输入,stdout作为输出);

- -l:日志输出的地址(默认就是stdout直接在控制台中输出);

- -t:测试配置文件是否正确,然后退出。

3.3Logstash配置文件

Logstash处理流程经历了三个阶段,配置文件也同样由三个模块组成:input、filter、output。

#指定多个来源数据的配置文件书写方式input {file { path => "/var/log/messages" type => "syslog" }file { path => "/var/log/apache/access.log" type => "apache" }}

3.4利用logstash的正则进行日志信息的抓取测试

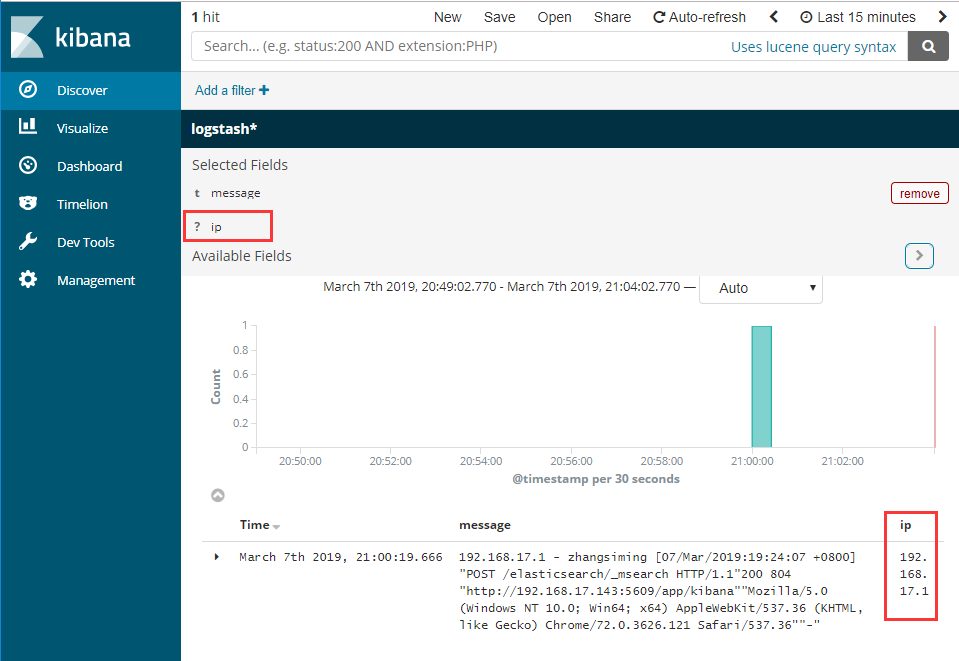

#修改Logstash配置文件[root@ZhangSiming logstash]# vim config/logstash.conf[root@ZhangSiming logstash]# cat config/logstash.confinput {stdin{} #从标准输入读取数据}filter {grok {match => {"message" => '(?<ip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}).*'}}}output {elasticsearch { #如果要输入到elasticsearch里,那么需要删除掉stdout{}hosts => ["http://192.168.17.139:9200"]}stdout { #只将信息输出到屏幕上codec => rubydebug #用于正则提取测试,将正则抓取结果输出到屏幕上}}#重启Logstash[root@ZhangSiming logstash]# kill -9 3935[root@ZhangSiming logstash]# /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf192.168.17.1 - zhangsiming [07/Mar/2019:19:24:07 +0800] "POST /elasticsearch/_msearch HTTP/1.1"200 804 "http://192.168.17.143:5609/app/kibana""Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36""-"{"message" => "192.168.17.1 - zhangsiming [07/Mar/2019:19:24:07 +0800] \"POST /elasticsearch/_msearch HTTP/1.1\"200 804 \"http://192.168.17.143:5609/app/kibana\"\"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36\"\"-\"","host" => "ZhangSiming","ip" => "192.168.17.1",#成功过滤出ip"@version" => "1","@timestamp" => 2019-03-07T13:00:19.666Z}#将标准输出写入Elasticsearch[root@ZhangSiming logstash]# vim config/logstash.conf[root@ZhangSiming logstash]# tail -4 config/logstash.conf# stdout {# codec => rubydebug# }}[root@ZhangSiming logstash]# bin/start.sh

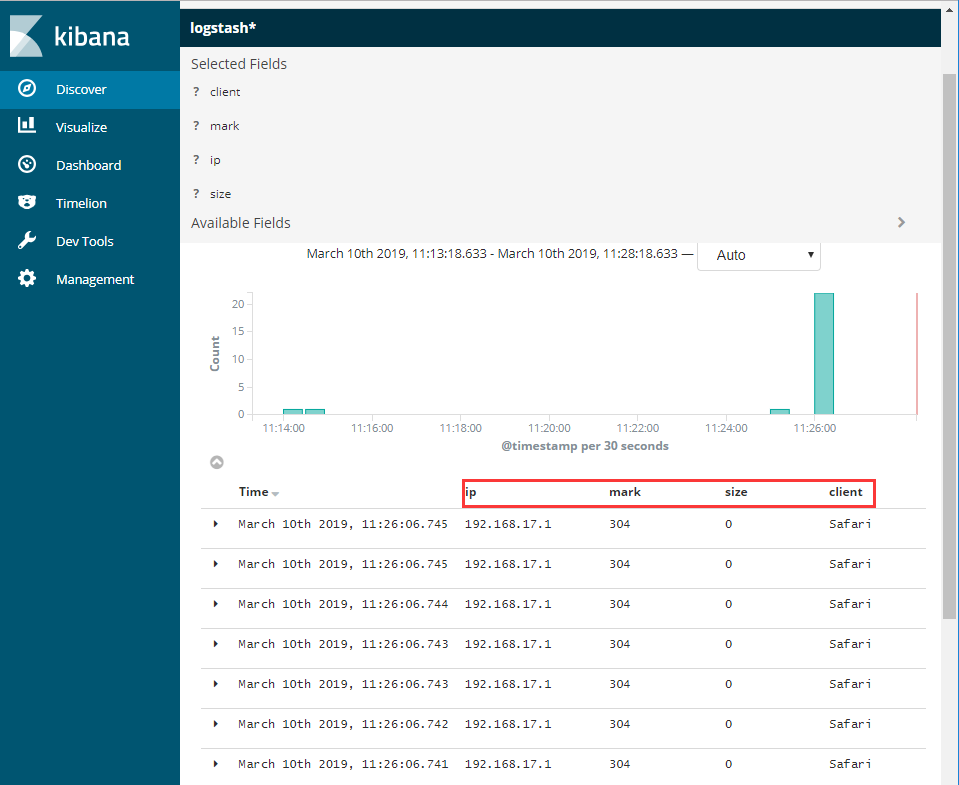

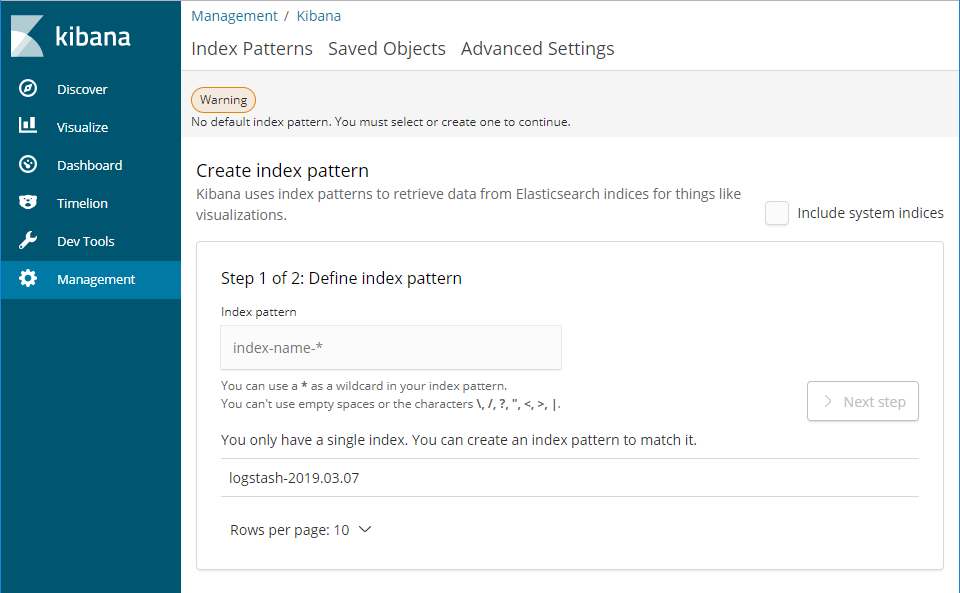

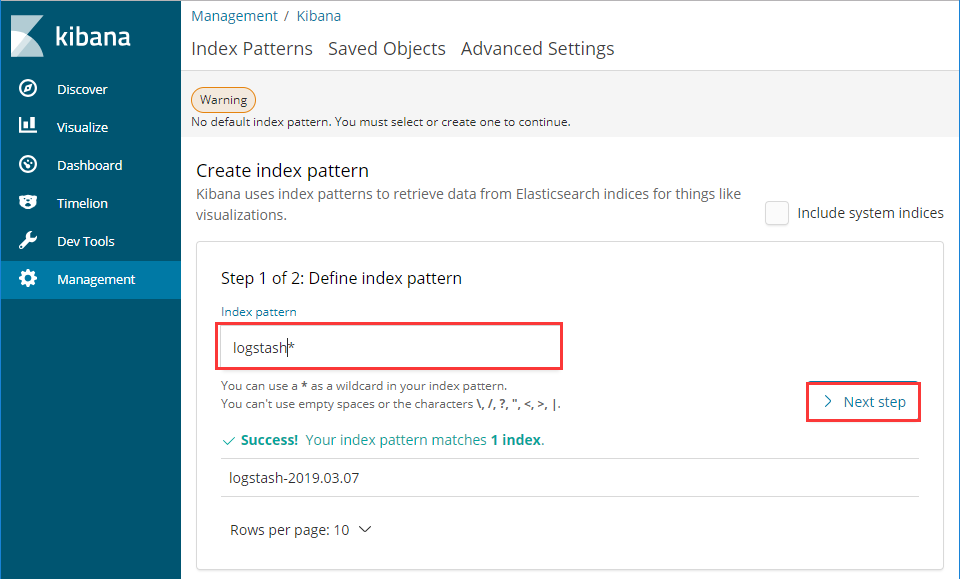

#继续修改Logstash配置文件,定义多个字段[root@ZhangSiming logstash]# cat config/logstash.confinput {file {path => "/usr/local/nginx/logs/access.log"}}filter {grok {match => {"message" => '(?<ip>[0-9.]+) .*HTTP/1.1" (?<mark>[0-9]+) (?<size>[0-9]+) ".*121 (?<client>[a-zA-Z]+).*'}}}output {elasticsearch {hosts => ["http://192.168.17.139:9200"]}}#重启Logstash[root@ZhangSiming logstash]# ps -elf | grep logstash0 S root 18003 1 25 80 0 - 583828 futex_ 21:11 pts/0 00:01:43 /usr/local/jdk//bin/java -Xms150M -Xmx150M -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -cp /usr/local/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/local/logstash/logstash-core/lib/jars/commons-compiler-3.0.8.jar:/usr/locallogstash/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/local/logstash/logstash-core/lib/jars/google-java-format-1.5.jar:/usr/local/logstash/logstash-core/lib/jars/guava-22.0.jar:/usr/local/logstash/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-annotations-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-databind-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/jackson-dataformat-cbor-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/janino-3.0.8.jar:/usr/local/logstash/logstash-core/lib/jars/javac-shaded-9-dev-r4023-3.jar:/usr/local/logstash/logstash-core/lib/jars/jruby-complete-9.1.13.0.jar:/usr/local/logstash/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-api-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-core-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.9.1.jar:/usr/local/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/local/logstash/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash -f /usr/local/logstash/config/logstash.conf0 R root 18078 1191 0 80 0 - 28176 - 21:18 pts/0 00:00:00 grep --color=auto logstash[root@ZhangSiming logstash]# kill -9 18003[root@ZhangSiming logstash]# bin/start.sh