@zhangsiming65965

2019-03-24T05:46:48.000000Z

字数 33656

阅读 182

Kubernetes深入学习(上)

K8S

---Author:张思明 ZhangSiming

---Mail:1151004164@cnu.edu.cn

---QQ:1030728296

如果有梦想,就放开的去追;

因为只有奋斗,才能改变命运。

一、kubectl命令行管理工具

1.1kubectl管理命令概要

- 基础命令

| 命令 | 描述 |

|---|---|

| create | 通过文件名或者标准输入创建资源,例如-f+.yaml文件创建或者创建集群角色等... |

| expose | 将一个资源公开为一个新的service |

| run | 在集群中运行一个特定的镜像 |

| set | 在对象上设置特定的功能 |

| get | 查看一个或多个资源,常见的有get pods或者get svc,get node等...还支持-o wide查看详细信息或者-n kube-system指定不同的命名空间等 |

| explain | 文档参考资料 |

| edit | 使用默认的编辑器编辑一个资源 |

| delete | 通过文件名、标准输入、资源名称或者标签选择器来删除资源 |

- 部署命令

| 命令 | 描述 |

|---|---|

| rollout | 管理资源的发布 |

| rolling-update | 对给定的复制控制器滚动更新 |

| scale | 扩容或缩容Pod数量,Deployment、ReplicaSet、RC或Job |

| autoscale | 创建一个自动选择扩容或缩容并设置Pod数量 |

- 集群管理命令

| 命令 | 描述 |

|---|---|

| certificate | 修改证书资源 |

| cluster-info | 显示集群信息 |

| top | 显示资源(CPU/Memory/Storage)使用。需要先部署Heapster |

| cordon | 标记节点不可调度,标记了就不会有pod调度过来 |

| uncordon | 标记节点可调度 |

| drain | 驱逐节点上的应用,准备下线维护 |

| taint | 修改节点taint标记 |

- 故障诊断和调试命令

| 命令 | 描述 |

|---|---|

| describe | 查看特定资源或资源组的详细信息 |

| logs | 在一个Pod中打印一个容器日志。如果Pod只有一个容器,容器名称是可选的 |

| attach | 附加到一个运行的容器 |

| exec | 执行命令到容器 |

| port-forward | 转发一个或多个本地端口到一个pod |

| proxy | 运行一个proxy到KubernetesAPIserver |

| cp | 拷贝文件或目录到容器中 |

| auth | 检查授权 |

- 高级命令

| 命令 | 描述 |

|---|---|

| apply | 通过文件名或标准输入对资源应用配置 |

| patch | 使用补丁修改、更新资源的字段 |

| replace | 通过文件名或标准输入替换一个资源 |

| convert | 不同的API版本之间转换配置文件 |

- 设置命令

| 命令 | 描述 |

|---|---|

| label | 更新资源上的标签 |

| annotate | 更新资源上的注释 |

| completion | 用于实现kubectl工具自动补全 |

- 其他命令

| 命令 | 描述 |

|---|---|

| api-versions | 打印受支持的API版本 |

| config | 生成或者修改kubeconfig文件(用于访问API,比如配置认证信息) |

| help | 所有命令帮助 |

| plugin | 运行一个命令行插件 |

| version | 打印客户端和服务版本信息 |

1.2kubectl管理应用程序生命周期

流程:

创建-->发布-->更新-->回滚-->删除

- 创建项目

#创建一个label为nginx的项目,3个副本(高可用),使用nginx1.14版本镜像,端口为80[root@ZhangSiming ~]# kubectl run nginx --replicas=3 --image=nginx:1.10 --port=80kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.deployment.apps/nginx created#K8S项目的发布是由镜像发布的,不是jar包或者war包,发布之后由scheduler自动调度到合适的Node[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-7b67cfbf9f-6t7z9 1/1 Running 0 18snginx-7b67cfbf9f-96v9d 1/1 Running 0 18snginx-7b67cfbf9f-q5hgl 1/1 Running 0 18s#事实上,除了pod,操作的run命令是生产了deployment,是用来管理更新项目的[root@ZhangSiming ~]# kubectl get deployments,podsNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeployment.extensions/nginx 3 3 3 3 3m29sNAME READY STATUS RESTARTS AGEpod/nginx-7b67cfbf9f-6t7z9 1/1 Running 0 3m28spod/nginx-7b67cfbf9f-96v9d 1/1 Running 0 3m28spod/nginx-7b67cfbf9f-q5hgl 1/1 Running 0 3m28s

- 发布服务供访问

[root@ZhangSiming ~]# kubectl expose deployment nginx --port=100 --type=NodePort --target-port=80 --name=nginx-service#指定deployment更新项目,开放服务供外界连接#--port表示供Node集群访问端口#--type=NodePort这个类型是供外界访问的类型#--target-port是容器内的服务端口service/nginx-service exposed[root@ZhangSiming ~]# kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 27hnginx NodePort 10.0.0.211 <none> 80:30792/TCP 28hnginx-service NodePort 10.0.0.55 <none> 100:35373/TCP 3s#测试访问:[root@ZhangSiming ~]# curl -I 10.0.0.55:100HTTP/1.1 200 OKServer: nginx/1.14.2Date: Mon, 25 Feb 2019 07:08:54 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 04 Dec 2018 14:44:49 GMTConnection: keep-aliveETag: "5c0692e1-264"Accept-Ranges: bytes

- 更新项目

[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-7b67cfbf9f-6t7z9 1/1 Running 0 20mnginx-7b67cfbf9f-96v9d 1/1 Running 0 20mnginx-7b67cfbf9f-q5hgl 1/1 Running 0 20m[root@ZhangSiming ~]# kubectl describe pods nginx-7b67cfbf9f-q5hgl | grep 1.10Image: nginx:1.10Normal Pulling 20m kubelet, 192.168.17.132 pulling image "nginx:1.10"Normal Pulled 20m kubelet, 192.168.17.132 Successfully pulled image "nginx:1.10"#查看好现在是nginx1.14的镜像版本,实现CI好了docker镜像,想要把服务更新为nginx1.10版本[root@ZhangSiming ~]# kubectl set image deployment/nginx nginx=nginx1.14deployment.extensions/nginx image updated#根据deployment更新nginx镜像为1.14[root@ZhangSiming ~]# kubectl set image deployment/nginx nginx=nginx:1.14deployment.extensions/nginx image updated[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-787b58fd95-psw9g 1/1 Running 0 2m7snginx-787b58fd95-vxtr4 1/1 Running 0 2m7snginx-7b67cfbf9f-m6mp2 0/1 ContainerCreating 0 2snginx-d87b556b7-tmbj6 0/1 Terminating 0 64s#更新是平滑更新,停止一个pod副本更新一个pod,不影响正常服务[root@ZhangSiming ~]# kubectl describe pod nginx-7b67cfbf9f-cksvf | grep 1.14Image: nginx:1.14Normal Pulled 2m17s kubelet, 192.168.17.132 Container image "nginx:1.1" already present on machine#成功更新nginx为1.14版本

- 更新回滚

#更新的如果有问题,要及时回滚[root@ZhangSiming ~]# kubectl rollout history deployment.apps/nginxdeployment.apps/nginxREVISION CHANGE-CAUSE1 <none>4 <none>5 <none>[root@ZhangSiming ~]# kubectl rollout undo deployment.apps/nginx --to-revision=1deployment.apps/nginx[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-787b58fd95-7pmnd 1/1 Running 0 2snginx-787b58fd95-rcg9d 0/1 ContainerCreating 0 0snginx-7b67cfbf9f-m6mp2 1/1 Running 0 8m31snginx-7b67cfbf9f-scs5h 1/1 Terminating 0 8m28s[root@ZhangSiming ~]# kubectl describe pod nginx-787b58fd95-7pmnd | grep 1.10Image: nginx:1.10Normal Pulled 60s kubelet, 192.168.17.132 Container image "nginx:1.10" already present on machine#成功回滚到了nginx1.10版本

- 删除项目

#我们主要就是部署了deployment管理和service服务,只要删除这两个,pod自然就没了[root@ZhangSiming ~]# kubectl delete deployment/nginxdeployment.extensions "nginx" deleted[root@ZhangSiming ~]# kubectl delete svc/nginx-serviceservice "nginx-service" deleted[root@ZhangSiming ~]# kubectl get podsNo resources found.#注意如果不删除deployment直接删除pod,是没有用的,因为deployment会自动生成pod

1.3kubectl远程连接K8S集群进行管理

我们上面管理K8S集群都是通过Master节点的本地kubuctl管理的,这是因为kubectl默认连接的本地的apiserver,可以进行管理。但是如果工作中我们需要远程管理怎么办?

需要生成一个kubectl配置文件,在远程节点上才能管理K8S集群。

#在Node节点上[root@ZhangSiming ~]# cd /opt/kubernetes/ssl/#利用kubectl生成kubectl连接K8S集群的配置文件[root@ZhangSiming ssl]# cat kubeconfig.sh#!/bin/bashkubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=https://192.168.17.135:6443 \--kubeconfig=config#连接的是MasterVIPkubectl config set-credentials cluster-admin \--certificate-authority=ca.pem \--embed-certs=true \--client-key=admin-key.pem \--client-certificate=admin.pem \--kubeconfig=configkubectl config set-context default \--cluster=kubernetes \--user=cluster-admin \--kubeconfig=config#上下文连接kubectl config use-context default --kubeconfig=config[root@ZhangSiming ssl]# sh kubeconfig.shCluster "kubernetes" set.User "cluster-admin" set.Context "default" created.Switched to context "default".[root@ZhangSiming ssl]# mv config /opt/kubernetes/cfg[root@ZhangSiming ssl]# kubectl --kubeconfig=/opt/kubernetes/cfg/config get allNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 28h#访问成功

二、YAML文件(资源编排)

使用kubecrl管理配置K8S集群的时候,一条一条输入的话太慢,而且也不好修改也不好调试。可以把所有的部署配置都写到一个yaml文件中,直接kubectl create -f 执行这个yaml文件,达到一键部署的效果,更适合后期的修改调试。

2.1yaml文件格式说明

YAML是一种简介的非标记语言。

语法格式

- 缩进表示层级关系;

- 不支持制表符"Tab",使用空格进行缩进;

- 通常开头缩进2个空格;

- 字符后缩进1个空格,如冒号、逗号等;

- "---"表示YAML格式,一个文件的开始;

- "#"表示注释。

2.2YAML文件创建资源对象

#yaml文件编写[root@ZhangSiming ssl]# cat nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentlabels:app: nginxspec:replicas: 3selector:matchLabels:app: nginx#以上是控制器定义template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:1.15.4ports:- containerPort: 80#这些是被管理对象定义[root@ZhangSiming ssl]# cat nginx-service.yamlapiVersion: v1kind: Servicemetadata:name: nginx-servicelabels:app: nginxspec:type: NodePortports:- port: 80targetPort: 80selector:app: nginx#执行YAML文件生成项目[root@ZhangSiming ssl]# kubectl create -f nginx-deployment.yamldeployment.apps/nginx-deployment created[root@ZhangSiming ssl]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-deployment-d55b94fd-qd8ds 1/1 Running 0 30snginx-deployment-d55b94fd-swmm5 1/1 Running 0 30snginx-deployment-d55b94fd-z4npc 1/1 Running 0 30s[root@ZhangSiming ssl]# kubectl create -f nginx-service.yamlservice/nginx-service created[root@ZhangSiming ssl]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 28hnginx-service NodePort 10.0.0.189 <none> 80:30177/TCP 5s

创建什么资源,创建什么对象是要在一个文件中写全的。相比于kubectl,YAML文件更容易留存,修改调试,不再像kubectl一条一条输入繁琐。

在部署一些微服务或者复杂的服务的时候,YAML文件方式的优点就大大体现出来了。

2.3导出YAML文件留存

实际工作中,YAML字段太多记不住,我们可以从网上下载YAML模板进行修改,也可以进行YAML文件导出。

#把不是真实存在的资源对象试运行之后导出yaml文件[root@ZhangSiming ssl]# kubectl run nginx --replicas=3 --image=nginx:1.14 --port=80 --dry-run -o yaml > ~/nginx-deployment.yaml#-o指定输出格式,可选的有YAML和json等#--dry-run表示测试运行,不会真的运行kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.[root@ZhangSiming ssl]# cat nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentlabels:app: nginxspec:replicas: 3selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:1.15.4ports:- containerPort: 80#把已经存在的资源对象的YAML文件导出来[root@ZhangSiming ssl]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-deployment-d55b94fd-qd8ds 1/1 Running 0 23mnginx-deployment-d55b94fd-swmm5 1/1 Running 0 23mnginx-deployment-d55b94fd-z4npc 1/1 Running 0 23m[root@ZhangSiming ssl]# kubectl get deployment.apps/nginx-deployment --export -o yaml > ~/nginx-deployment.yaml[root@ZhangSiming ssl]# cat ~/nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:labels:app: nginxname: nginx-deploymentreplicas: 3selector:matchLabels:app: nginxtype: RollingUpdatetemplate:metadata:labels:app: nginxspec:containers:- image: nginx:1.15.4imagePullPolicy: IfNotPresentname: nginxports:- containerPort: 80protocol: TCP#可能比较多,不认识的,或者为{}的空段可以删除#如果哪个字段不会写了,可以explain进行查看[root@ZhangSiming ssl]# kubectl explain deployment

三、深入理解Pod对象

5.1Pod的介绍与容器的分类

5.1.1Pod介绍

- 最小部署单元;

- 一组容器的集合,将一些紧密协作的容器放到一个pod中;

- 一个pod中的容器共享网络命名空间;

- pod是短暂的,刚开始设置的时候是无状态的pod,调度到哪个Node节点都不用考虑。

5.1.2Pod中容器的分类

Infrastructure Container:基础容器,创建Pod的时候自动拉取kubelet配置文件中指定的镜像仓库进行构建,作用是维护整个Pod网络空间;

InitContainers:初始化容器,先于业务容器开始执行,完成一些初始化操作,哪个业务容器先运行之类的;

Containers:业务容器,所有业务容器并行启动,真正执行业务。

5.2Pod镜像拉取策略

当你创建一个资源对象的时候,镜像的构建是有一个镜像拉取策略的,由部署K8S项目的yaml文件的imagePullPolicy字段管控。

imagePullPolicy取值有三种:

1.IfNotPresent:默认值,镜像在Node节点上不存在时才拉取;

2.Always:每次创建Pod 都会重新拉取一次镜像;

3.Never:Pod 永远不会主动拉取这个镜像。

[root@ZhangSiming ~]# kubectl get deploy/nginx-deployment -o yaml | grep imagePullPolicyimagePullPolicy: IfNotPresent#查看已经运行的资源的镜像拉取策略

如果是公开的镜像仓库,可以直接根据Pod镜像拉取策略拉取镜像部署Pod;但是如果是私有云镜像仓库Harbor,还需要认证,就要在yaml文件添加一条凭证进行认证。

#在已经登录Harbor的服务器获取认证信息[root@ZhangSiming ~]# docker login -uadmin -paptx65965697 www.yunjisuan2.comWARNING! Using --password via the CLI is insecure. Use --password-stdin.WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded[root@ZhangSiming kubernetes]# cat /root/.docker/config.json | base64 -w 0ewoJImF1dGhzIjogewoJCSJ3d3cueXVuamlzdWFuMi5jb20iOiB7CgkJCSJhdXRoIjogIllXUnRhVzQ2WVhCMGVEWTFPVFkxTmprMyIKCQl9Cgl9LAoJIkh0dHBIZWFkZXJzIjogewoJCSJVc2VyLUFnZW50IjogIkRvY2tlci1DbGllbnQvMTguMDkuMSAobGludXgpIgoJfQp9#不换行的复制下来备用#切换到Master节点[root@ZhangSiming ~]# cat centos.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: deploy-centosspec:replicas: 1selector:matchLabels:app: centostemplate:metadata:labels:app: centosspec:containers:- name: app-centosimage: www.yunjisuan2.com/library/mongo#从我们自己的Harbor上拉取镜像imagePullPolicy: Always#拉取策略是每次构建都必须重新拉取imagePullSecrets:- name: registry-pull-secret#这是验证信息,在下面生成[root@ZhangSiming ~]# cat centos-secret.yamlapiVersion: v1kind: Secretmetadata:name: registry-pull-secretdata:.dockerconfigjson: ewoJImF1dGhzIjogewoJCSJ3d3cueXVuamlzdWFuMi5jb20iOiB7CgkJCSJhdXRoIjogIllXUnRhVzQ2WVhCMGVEWTFPVFkxTmprMyIKCQl9Cgl9LAoJIkh0dHBIZWFkZXJzIjogewoJCSJVc2VyLUFnZW50IjogIkRvY2tlci1DbGllbnQvMTguMDkuMSAobGludXgpIgoJfQp9type: kubetnetes.io/dockerconfigjson#这是生成验证Harbor的凭据[root@ZhangSiming ~]# kubectl create -f centos-secret.yamlsecret/registry-pull-secret created[root@ZhangSiming ~]# kubectl get secretsNAME TYPE DATA AGEdefault-token-f2kfs kubernetes.io/service-account-token 3 7d16hregistry-pull-secret kubetnetes.io/dockerconfigjson 1 7s[root@ZhangSiming ~]# kubectl create -f centos.yamldeployment.apps/deploy-centos created#查看拉取镜像结果[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEdeploy-centos-6fc88f94c9-xbqtr 1/1 Running 0 55s#拉取成功,一定要拉取已经有的镜像哦~

5.3Pod进行资源限制

[root@ZhangSiming ~]# cat limit.yamlapiVersion: v1kind: Podmetadata:name: frontendspec:containers:- name: dbimage: mysqlenv:- name: MYSQL_ROOT_PASSWORDvalue: "password"resources:requests:memory: "64Mi"cpu: "250m"limits:memory: "128Mi"cpu: "500m"- name: wpimage: wordpressresources:requests:memory: "64Mi"cpu: "250m"limits:memory: "128Mi"cpu: "500m"#字段是resources,requests是调度的时候有这么多资源才会调度到指定node,limits是运行最高占用资源不超过多少[root@ZhangSiming ~]# kubectl create -f limit.yamlpod/frontend created[root@ZhangSiming ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEfrontend 2/2 Running 0 5m29s 172.17.3.3 192.168.17.132 <none>[root@ZhangSiming ~]# kubectl describe nodes 192.168.17.132#可以查看到限制资源的信息

5.4Pod重启策略

- Always:当容器终止退出后,总是重启容器,默认策略;

- OnFailure:当容器异常退出(退出状态码非0)时,才重启容器;

- Never::当容器终止退出,从不重启容器.

一直运行的容器,重启策略设置为Always就可以了,有特殊需求再改。

5.5Pod健康检查(Probe探针)

Probe有以下两种类型:

- livenessProbe

如果检查失败,将杀死容器,根据Pod的restartPolicy(三种重启策略)来操作;- readinessProbe

如果检查失败,Kubernetes会把Pod从service endpoints中剔除,用户就访问不了了。

Probe支持以下三种检查方法:

- httpGet

发送HTTP请求,返回200-400范围状态码为成功;- exec

执行Shell命令返回状态码是0为成功;- tcpSocket

发起TCP Socket建立成功,看socket进程有没有响应。

[root@ZhangSiming ~]# cat healthy.yamlapiVersion: v1kind: Podmetadata:labels:test: livenessname: liveness-execspec:containers:- name: livenessimage: busyboxargs:- /bin/sh- -c- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthylivenessProbe:exec:command:- cat- /tmp/healthyinitialDelaySeconds: 5periodSeconds: 5#在30秒之后的健康检查会触发重启pod[root@ZhangSiming ~]# kubectl create -f healthy.yamlpod/liveness-exec created[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEliveness-exec 1/1 Running 0 7s[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEliveness-exec 1/1 Running 0 19s[root@ZhangSiming ~]# kubectl get podsNAME READY STATUS RESTARTS AGEliveness-exec 1/1 Running 1 33s

5.6Pod调度约束

默认的调度是根据每个节点的资源利用率进行调度,已经很合理了。实际工作中我们还可以根据需求进行控制调度。

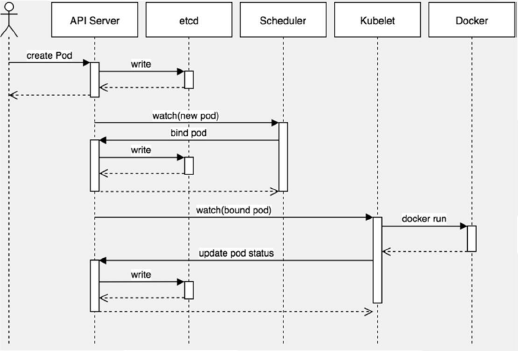

Pod发布流程

1.用户在通过kubectl创建Pod;

2.apiserver写入Pod信息到Etcd-cluster中;

3.Scheduler通过watch获取到Pod信息,根据设置的调度算法返回合理的调度节点信息到Etcd集群,之后返回apiserver;

4.调度节点的kubelet通过watch检测到自己是被选举出来的调度节点,之后在自身部署Pod;

5.启动docker容器引擎运行服务;

6.kubelet更新信息回到Etcd-cluster集群之后,返回给apiserver,用户就可以看到Pod部署状况了。

调度约束

- nodeName用于将Pod调度到指定的Node名称上

- nodeSelector用于将Pod调度到匹配Label的Node上

- 使用NodeName

[root@ZhangSiming ~]# cat sche.yamlapiVersion: v1kind: Podmetadata:name: pod-examplelabels:app: nginxspec:nodeName: 192.168.17.132#nodeName是跳过调度器,强制调度containers:- name: nginximage: nginx:1.15[root@ZhangSiming ~]# kubectl create -f sche.yamlpod/pod-example created[root@ZhangSiming ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEpod-example 1/1 Running 0 16s 172.17.3.3 192.168.17.132 <none>

- 使用NodeSelector

[root@ZhangSiming ~]# kubectl label nodes 192.168.17.132 team=anode/192.168.17.132 labeled#给Node一个label[root@ZhangSiming ~]# kubectl get nodes --show-labelsNAME STATUS ROLES AGE VERSION LABELS192.168.17.132 Ready <none> 7d16h v1.12.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=192.168.17.132,team=a192.168.17.133 NotReady <none> 7d15h v1.12.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=192.168.17.133[root@ZhangSiming ~]# vim sche.yaml[root@ZhangSiming ~]# cat sche.yamlapiVersion: v1kind: Podmetadata:name: pod-examplelabels:app: nginxspec:nodeSelector:team: a#通过调度器调度到team:a的node节点containers:- name: nginximage: nginx:1.15[root@ZhangSiming ~]# kubectl create -f sche.yamlpod/pod-example created[root@ZhangSiming ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEpod-example 1/1 Running 0 25s 172.17.3.3 192.168.17.132 <none>

5.7Pod故障排查

5.7.1Pod的五种状态

| 状态 | 描述 |

|---|---|

| Pending | Pod创建已经提交到Kubernetes。但是,因为某种原因而不能顺利创建。例如下载镜像慢,调度不成功 |

| Running | Pod已经绑定到一个节点,并且已经创建了所有容器。至少有一个容器正在运行中,或正在启动或重新启动 |

| Succeeded | Pod中的所有容器都已成功终止,不会重新启动 |

| Failed | Pod的所有容器均已终止,且至少有一个容器已在故障中终止。也就是说,容器要么以非零状态退出,要么被系统终止 |

| Unknown | 由于某种原因apiserver无法获得Pod的状态,通常是由于Master与Pod所在主机kubelet通信时出错 |

[root@ZhangSiming ~]# kubectl describe TYPE/NAME#查看事件,可以看事件位置,是卡在拉取镜像,还是卡在调度[root@ZhangSiming ~]# kubectl logs TYPE/NAME [-c CONTAINER]#查看容器里的日志[root@ZhangSiming ~]# kubectl exec POD [-c CONTAINER] --COMMAND [args...]#可以进入到容器中查看应用的状态

四、深入理解Service(与外界连通)

1.Service是为了防止Pod失联,Service可以动态感知Pod的ip变化;

2.定义一组Pod的访问策略,把一些Pod关联起来,同时起到负载均衡的效果,把请求平均分到Pod的副本上;

3.Service的三种类型:ClusterIP、NodePort、LoadBalance;

4.Service的底层实现主要有Iptables和IPVS梁总网络模式,这两种网络模式决定了如何去转发请求的流量,和怎么起到负载均衡的作用。

4.1Pod与Service的关系

通过Pod指定标签,Service上使用Selector匹配标签关联Service与Pod。

Service实现了Pod的4层负载均衡(TCP/UDP),默认是根据endpoints控制台检测到的Podip地址进行轮询算法调度服务。

4.2Service定义

[root@ZhangSiming ~]# vim service.yaml[root@ZhangSiming ~]# cat service.yamlkind: ServiceapiVersion: v1metadata:name: my-servicenamespace: defaultspec:clusterIP: 10.0.0.123#Service默认是ClusterIP类型,如果不指定默认分配一个随机的ClusterIPselector:app: nginx#这个selector是为了匹配用的,好让K8S知道这个是哪个Pod的Serviceports:- name: httpprotocol: TCPport: 80#Service的80端口targetPort: 80##创建Service[root@ZhangSiming ~]# kubectl create -f service.yamlservice/my-service created[root@ZhangSiming ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service ClusterIP 10.0.0.123 <none> 80/TCP 6s[root@ZhangSiming ~]# kubectl get endpointsNAME ENDPOINTS AGEkubernetes 192.168.17.130:6443 21dmy-service <none> 66s#endpoints是可以查看Service关联到哪些Pod的,这里我们没有关联

解释:Service是为了防止Pod失联,Service可以动态感知Pod的ip变化

实际是由endpoints来感知的,当Pod重启有了新的IP,endpoints控制器感知到新的IP,之后将新的IP关联到Service,才可以访问。

#可以describe查看Service详细信息[root@ZhangSiming ~]# kubectl describe svc my-serviceName: my-serviceNamespace: defaultLabels: <none>Annotations: <none>Selector: app=nginxType: ClusterIPIP: 10.0.0.123Port: http 80/TCPTargetPort: 80/TCPEndpoints: <none>Session Affinity: NoneEvents: <none>

4.3Service类型

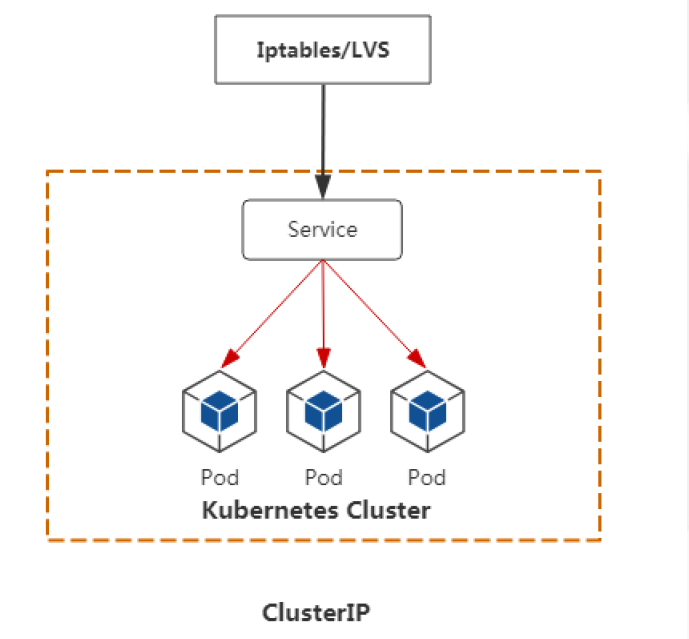

- ClusterIP:默认,分配一个集群内部可以访问的虚拟IP,主要用于内部应用的连接。

- NodePort:在每个Node上分配一个端口作为外部访问入口,主要用于外部应用的访问。

LoadBalancer:工作在特定的Cloud Provider上,例如Google Cloud,AWS,OpenStack

ClusterIP类型

这种方式是用于集群内部组件之间访问的,是默认的Service类型。Service只是逻辑上将多个Pod关联起来,底层是Iptables或者IPVS实现的负载均衡,只需要知道Service的clusterip即可。

YAML格式:

[root@ZhangSiming ~]# cat service.yamlapiVersion: v1kind: Servicemetadata:name: my-servicespec:selector:app: Aports:- protocol: TCPport: 80targetPort: 8080

- NodePort类型

NodePort类型的Service不仅仅会生成一个CLusterIP类型的集群内部访问端口,还会在每个关联的Pod上都开放监听一个端口,可以通过这个端口在集群外部访问到Pod。

NodeIP一般是不固定的,开放的Port一般是需要固定的。

YAML格式:

[root@ZhangSiming ~]# cat service.yamlapiVersion: v1kind: Servicemetadata:name: my-servicespec:selector:app: Aports:- protocol: TCPport: 80targetPort: 8080nodePort: 30001#固定nodePort端口type: NodePort[root@ZhangSiming ~]# kubectl apply -f service.yaml#更新服务Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl applyservice/my-service configured[root@ZhangSiming ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.123 <none> 80:30001/TCP 29m#80是ClusterIP访问端口;30001是NodePort访问端口#Node节点[root@ZhangSiming ~]# netstat -antup | grep 30001tcp6 0 0 :::30001 :::* LISTEN 884/kube-proxy

- LoadBalance类型

LoadBalance类型一般是工作在公有云上的,不适用于自身创建的K8S集群。用户访问会先连接公有云上的LB负载均衡,LB负载均衡自动关联到下面Service的IP进行访问。

LoadBalance提供特定云提供商底层LB接口。利于AWS、Google、Openstack,自动调用负载均衡关联底层IP。

4.4Service代理模式

实际上Service的底层流量转发与负载均衡实现是由K8SNode节点的kube-proxy进行的。

[root@ZhangSiming kubernetes]# cat cfg/kube-proxyKUBE_PROXY_OPTS=\"--logtostderr=true \--v=4 \--hostname-override=192.168.17.132 \--cluster-cidr=10.0.0.0/24 \--proxy-mode=ipvs \#我们现在使用的是IPVS作为Service底层代理--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

- iptables

#切换IPVS代理模式到iptables代理模式[root@ZhangSiming kubernetes]# vim cfg/kube-proxy[root@ZhangSiming kubernetes]# cat cfg/kube-proxyKUBE_PROXY_OPTS="--logtostderr=true \--v=4 \--hostname-override=192.168.17.132 \--cluster-cidr=10.0.0.0/24 \--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"[root@ZhangSiming kubernetes]# systemctl restart kube-proxy.service[root@ZhangSiming kubernetes]# ps -elf | grep kube-proxy4 S root 21842 1 1 80 0 - 10661 futex_ 14:40 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.17.132 --cluster-cidr=10.0.0.0/24 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig0 R root 21969 1315 0 80 0 - 28176 - 14:40 pts/0 00:00:00 grep --color=auto kube-proxy

- Master节点

[root@ZhangSiming ~]# kubectl create -f nginx.yamldeployment.apps/nginx created[root@ZhangSiming ~]# kubectl create -f service.yamlservice/my-service created[root@ZhangSiming ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.133 <none> 80:30001/TCP 27s#找出Service关联的集群PodIP

- Node节点

[root@ZhangSiming kubernetes]# iptables-save | grep 10.0.0.133-A KUBE-SERVICES ! -s 10.0.0.0/24 -d 10.0.0.133/32 -p tcp -m comment --comment "default/my-service: cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ-A KUBE-SERVICES -d 10.0.0.133/32 -p tcp -m comment --comment "default/my-service: cluster IP" -m tcp --dport 80 -j KUBE-SVC-KEAUNL7HVWWSEZA6[root@ZhangSiming kubernetes]# iptables-save | grep KUBE-SVC-KEAUNL7HVWWSEZA6:KUBE-SVC-KEAUNL7HVWWSEZA6 - [0:0]-A KUBE-NODEPORTS -p tcp -m comment --comment "default/my-service:" -m tcp --dport 30001 -j KUBE-SVC-KEAUNL7HVWWSEZA6-A KUBE-SERVICES -d 10.0.0.133/32 -p tcp -m comment --comment "default/my-service: cluster IP" -m tcp --dport 80 -j KUBE-SVC-KEAUNL7HVWWSEZA6-A KUBE-SVC-KEAUNL7HVWWSEZA6 -m statistic --mode random --probability 0.33332999982 -j KUBE-SEP-JQ4W2NCACGVYJ6IM-A KUBE-SVC-KEAUNL7HVWWSEZA6 -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-YVQHBEOICAVG6NCJ-A KUBE-SVC-KEAUNL7HVWWSEZA6 -j KUBE-SEP-H32GYTWB4BTUZQJM#可以看出是轮询算法,从上往下匹配,第一个0.3概率,之后1:1概率实现Service的轮询转发

- IPVS

iptables方式的弊端:

1.会创建规则(更新的话,是非增量式更新,比较耗费资源);

2.iptables规则是从上到下进行逐条匹配的,如果规模较大,可能会有延迟。

引入IPVS:

基于此,引入了IPVS方式代理。

好处是:任何处理都是基于内核进行处理的,效率高,可以解决iptables遇到的问题。

- Master节点

[root@ZhangSiming ~]# kubectl get endpointsNAME ENDPOINTS AGEkubernetes 192.168.17.130:6443 21dmy-service 172.17.83.4:80,172.17.83.5:80,172.17.83.6:80 38m[root@ZhangSiming ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.133 <none> 80:30001/TCP 39m

- Node节点

[root@ZhangSiming kubernetes]# ipvsadm -ln | grep -A 3 10.0.0.133TCP 10.0.0.133:80 rr-> 172.17.83.4:80 Masq 1 0 0-> 172.17.83.5:80 Masq 1 0 0-> 172.17.83.6:80 Masq 1 0 0#基于内核态的IPVS负载均衡要比基于用户态的iptables负载均衡要好的多的多,效率大得多,所以一般kube-proxy我们选择IPVS代理模式。

综上所述,Service代理模式iptables与IPVS对比

| iptables | IPVS |

|---|---|

| 灵活,功能强大(可以在数据包不同阶段对包进行操作) | 工作在内核态,有更好的性能 |

| 规则遍历匹配和更新,呈线性时延 | 调度算法丰富:rr,wrr,lc,wlc,ip hash... |

轮询算法等配置都在kube-proxy配置文件中配置。

4.5DNS(coredns)

DNS服务监视Kubernetes API,为每一个Service创建DNS记录用于域名解析。

[root@ZhangSiming ~]# cat /opt/kubernetes/cfg/kubelet.configkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: 192.168.17.132port: 10250cgroupDriver: cgroupfsclusterDNS:- 10.0.0.2clusterDomain: cluster.local.#这里的clusterDomain和clusterDNS要在后面创建coreDNS进行对应修改failSwapOn: falseauthentication:anonymous:enabled: true[root@ZhangSiming ~]# vim coredns.yaml.sed[root@ZhangSiming ~]# cat coredns.yaml.sed# Warning: This is a file generated from the base underscore template file: coredns.yaml.baseapiVersion: v1kind: ServiceAccountmetadata:name: corednsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:kubernetes.io/bootstrapping: rbac-defaultsaddonmanager.kubernetes.io/mode: Reconcilename: system:corednsrules:- apiGroups:- ""resources:- endpoints- services- pods- namespacesverbs:- list- watch- apiGroups:- ""resources:- nodesverbs:- get---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsaddonmanager.kubernetes.io/mode: EnsureExistsname: system:corednsroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:corednssubjects:- kind: ServiceAccountname: corednsnamespace: kube-system---apiVersion: v1kind: ConfigMapmetadata:name: corednsnamespace: kube-systemlabels:addonmanager.kubernetes.io/mode: EnsureExistsdata:Corefile: |.:53 {errorshealthkubernetes cluster.local in-addr.arpa ip6.arpa {#修改coreDNS域pods insecureupstreamfallthrough in-addr.arpa ip6.arpa}prometheus :9153forward . /etc/resolv.confcache 30loopreloadloadbalance}---apiVersion: apps/v1kind: Deploymentmetadata:name: corednsnamespace: kube-systemlabels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "CoreDNS"spec:# replicas: not specified here:# 1. In order to make Addon Manager do not reconcile this replicas parameter.# 2. Default is 1.# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.strategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1selector:matchLabels:k8s-app: kube-dnstemplate:metadata:labels:k8s-app: kube-dnsannotations:seccomp.security.alpha.kubernetes.io/pod: 'docker/default'spec:priorityClassName: system-cluster-criticalserviceAccountName: corednstolerations:- key: "CriticalAddonsOnly"operator: "Exists"nodeSelector:beta.kubernetes.io/os: linuxcontainers:- name: corednsimage: coredns/coredns:1.3.1#修改拉取的镜像源imagePullPolicy: IfNotPresentresources:limits:memory: 170Mirequests:cpu: 100mmemory: 70Miargs: [ "-conf", "/etc/coredns/Corefile" ]volumeMounts:- name: config-volumemountPath: /etc/corednsreadOnly: trueports:- containerPort: 53name: dnsprotocol: UDP- containerPort: 53name: dns-tcpprotocol: TCP- containerPort: 9153name: metricsprotocol: TCPlivenessProbe:httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 60timeoutSeconds: 5successThreshold: 1failureThreshold: 5readinessProbe:httpGet:path: /healthport: 8080scheme: HTTPsecurityContext:allowPrivilegeEscalation: falsecapabilities:add:- NET_BIND_SERVICEdrop:- allreadOnlyRootFilesystem: truednsPolicy: Defaultvolumes:- name: config-volumeconfigMap:name: corednsitems:- key: Corefilepath: Corefile---apiVersion: v1kind: Servicemetadata:name: kube-dnsnamespace: kube-systemannotations:prometheus.io/port: "9153"prometheus.io/scrape: "true"labels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "CoreDNS"spec:selector:k8s-app: kube-dnsclusterIP: 10.0.0.2#修改clusterIPports:- name: dnsport: 53protocol: UDP- name: dns-tcpport: 53protocol: TCP- name: metricsport: 9153protocol: TCP#启动coredns[root@ZhangSiming ~]# kubectl create -f coredns.yaml.sedserviceaccount/coredns createdclusterrole.rbac.authorization.k8s.io/system:coredns createdclusterrolebinding.rbac.authorization.k8s.io/system:coredns createdconfigmap/coredns createddeployment.apps/coredns createdservice/kube-dns created[root@ZhangSiming ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-64479cf49b-6mgpv 0/1 Running 0 17skubernetes-dashboard-5f5bfdc89f-zkc9n 1/1 Running 6 24d#测试DNS解析[root@ZhangSiming ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.133 <none> 80:30001/TCP 126m#coredns解析的是Service的NAME和CLUSTER-IP对应关系[root@ZhangSiming ~]# kubectl run -it --image=busybox:1.28.4 --rm --restart=Never shIf you don\'t see a command prompt, try pressing enter./ # nslookup kubernetesServer: 10.0.0.2Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: kubernetesAddress 1: 10.0.0.1 kubernetes.default.svc.cluster.local/ # nslookup my-serviceServer: 10.0.0.2Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: my-serviceAddress 1: 10.0.0.133 my-service.default.svc.cluster.local#成功解析

- 进行不同命名空间的Service解析

[root@ZhangSiming ~]# kubectl get svc -n defaultNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.133 <none> 80:30001/TCP 131m[root@ZhangSiming ~]# kubectl run -it --image=busybox:1.28.4 --rm --restart=Never sh -n kube-systemIf you don't see a command prompt, try pressing enter./ # nslookup my-serviceServer: 10.0.0.2Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localnslookup: can't resolve 'my-service'/ # nslookup my-service.defaultServer: 10.0.0.2Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: my-service.defaultAddress 1: 10.0.0.133 my-service.default.svc.cluster.local#在Service的NAME后面加.namespace可以跨命名空间进行Service的DNS解析

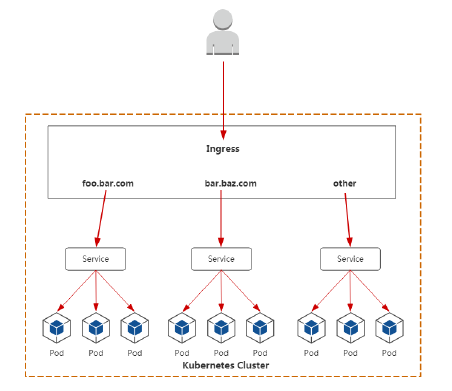

五、Ingress学习

5.1K8S的服务类别

集群内部服务:

CLUSTERIP:供给集群内部组件连接访问专用。

对外服务:

NodePort:Pod开放监听一个端口,供给外部连接;

- Ingress:Ingress与Service相关联,通过Ingress Controller实现Pod的负载均衡,支持TCP/UDP 4层和HTTP 7层,是暴露应用对外访问的最佳方式;

- LoadBalance:连接公有云底层LB进行外部访问。

5.2Ingress Controller部署

通过Ingress Controller控制器关联域名和服务,进行暴露服务对外访问。

Ingress Controller控制器YAML下载地址:

https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

[root@ZhangSiming ~]# mkdir ingress[root@ZhangSiming ~]# cd ingress/[root@ZhangSiming ingress]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml--2019-03-21 18:44:09-- https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yamlResolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.228.133Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.228.133|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 5976 (5.8K) [text/plain]Saving to: ‘mandatory.yaml’100%[======================================>] 5,976 --.-K/s in 0s2019-03-21 18:44:10 (38.5 MB/s) - ‘mandatory.yaml’ saved [5976/5976][root@ZhangSiming ingress]# lsmandatory.yaml#查看Ingress ControllerYAML文件[root@ZhangSiming ingress]# cat mandatory.yamlapiVersion: v1kind: Namespacemetadata:name: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: nginx-configurationnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: tcp-servicesnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: udp-servicesnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---apiVersion: v1kind: ServiceAccountmetadata:name: nginx-ingress-serviceaccountnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRolemetadata:name: nginx-ingress-clusterrolelabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxrules:- apiGroups:- ""resources:- configmaps- endpoints- nodes- pods- secretsverbs:- list- watch- apiGroups:- ""resources:- nodesverbs:- get- apiGroups:- ""resources:- servicesverbs:- get- list- watch- apiGroups:- "extensions"resources:- ingressesverbs:- get- list- watch- apiGroups:- ""resources:- eventsverbs:- create- patch- apiGroups:- "extensions"resources:- ingresses/statusverbs:- update---apiVersion: rbac.authorization.k8s.io/v1beta1kind: Rolemetadata:name: nginx-ingress-rolenamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxrules:- apiGroups:- ""resources:- configmaps- pods- secrets- namespacesverbs:- get- apiGroups:- ""resources:- configmapsresourceNames:# Defaults to "<election-id>-<ingress-class>"# Here: "<ingress-controller-leader>-<nginx>"# This has to be adapted if you change either parameter# when launching the nginx-ingress-controller.- "ingress-controller-leader-nginx"verbs:- get- update- apiGroups:- ""resources:- configmapsverbs:- create- apiGroups:- ""resources:- endpointsverbs:- get---apiVersion: rbac.authorization.k8s.io/v1beta1kind: RoleBindingmetadata:name: nginx-ingress-role-nisa-bindingnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: nginx-ingress-rolesubjects:- kind: ServiceAccountname: nginx-ingress-serviceaccountnamespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata:name: nginx-ingress-clusterrole-nisa-bindinglabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: nginx-ingress-clusterrolesubjects:- kind: ServiceAccountname: nginx-ingress-serviceaccountnamespace: ingress-nginx---apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-ingress-controllernamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxspec:replicas: 1selector:matchLabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxtemplate:metadata:labels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxannotations:prometheus.io/port: "10254"prometheus.io/scrape: "true"spec:hostNetwork: true#使用宿主机网络,意思是调度到Node节点使用本地的网络,80,443端口都会在本地体现。也就是说,本地的80、443端口不能被占用,否则启动不起来serviceAccountName: nginx-ingress-serviceaccountcontainers:- name: nginx-ingress-controllerimage: lizhenliang/nginx-ingress-controller:0.20.0args:- /nginx-ingress-controller- --configmap=$(POD_NAMESPACE)/nginx-configuration- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services- --udp-services-configmap=$(POD_NAMESPACE)/udp-services- --publish-service=$(POD_NAMESPACE)/ingress-nginx- --annotations-prefix=nginx.ingress.kubernetes.iosecurityContext:allowPrivilegeEscalation: truecapabilities:drop:- ALLadd:- NET_BIND_SERVICE# www-data -> 33runAsUser: 33env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: KUBERNETES_MASTERvalue: http://192.168.17.130:8080#需要加入这一行,否则会报错ports:- name: httpcontainerPort: 80- name: httpscontainerPort: 443livenessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 10readinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPperiodSeconds: 10successThreshold: 1timeoutSeconds: 10---#部署Ingress Controller控制器[root@ZhangSiming ingress]# kubectl create -f mandatory.yamlnamespace/ingress-nginx createdconfigmap/nginx-configuration createdconfigmap/tcp-services createdconfigmap/udp-services createdserviceaccount/nginx-ingress-serviceaccount createdclusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole createdrole.rbac.authorization.k8s.io/nginx-ingress-role createdrolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding createdclusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding createddeployment.apps/nginx-ingress-controller created[root@ZhangSiming ingress]# kubectl get pods -n ingress-nginxNAME READY STATUS RESTARTS AGEnginx-ingress-controller-7d8dc989d6-qzcll 1/1 Running 0 4m52s#启动成功

5.2Ingress规则编写

我们已经部署好了Ingress Controller控制器,也就是说可以提供Ingress对外访问服务了,但是具体怎么提供对外服务,我们需要自己定义Ingress规则。

[root@ZhangSiming ingress]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 21dmy-service NodePort 10.0.0.21 <none> 80:30001/TCP 9s[root@ZhangSiming ingress]# vim ingress.yaml[root@ZhangSiming ingress]# cat ingress.yamlapiVersion: extensions/v1beta1kind: Ingressmetadata:name: example-ingressspec:rules:- host: example.foo.com#域名http:paths:- backend:serviceName: my-service#绑定NodePort的ServiceservicePort: 80#端口写K8S集群内部端口[root@ZhangSiming ingress]# kubectl create -f ingress.yamlingress.extensions/example-ingress created[root@ZhangSiming ingress]# kubectl get ingressNAME HOSTS ADDRESS PORTS AGEexample-ingress example.foo.com 80 12s