@Arslan6and6

2016-08-29T02:24:01.000000Z

字数 8908

阅读 626

【作业二十二】殷杰

第十二章、大数据协作框架之Oozie

---Oozie WorkFlow中Action练习及Coordinate使用

作业描述:

依据课堂讲解和官方 Reference,对 Oozie WorkFlow 中的常见的 Action 练习测试,认真阅读

Oozie 官方文档,提升英文阅读能力,具体要求如下:

1)MapReduce Action 配置的要点及如何进行巧妙的配置

2)Shell Action 配置时多处注意事项,企业常用

3)如何使用 Oozie 中 Coordinate 调度 WorkFlow

1)MapReduce Action 配置的要点及如何进行巧妙的配置

配置的要点

job.properties

---------定义workflow的位置

workflow.xml

---------start

---------action

---------mapreduce / shell

---------error

---------ok

---------kill

---------end

lib

---------存放job任务需要的资源(jar包)

具体配置步骤:

1.复制 /opt/modules/oozie-4.0.0-cdh5.3.6/examples/apps/map-reduce 到新建目录 /opt/modules/oozie-4.0.0-cdh5.3.6/oozie-apps 中,以便使用examples中的map-reduce模板

2.在HDFS创建 /user/beifeng/oozie-apps

3.将要使用的 map-reduce jar包复制到 oozie-apps/map-reduce/lib/

cp /home/beifeng/jar/swordcount.jar /opt/modules/oozie-4.0.0-cdh5.3.6/oozie-apps/map-reduce/lib/

4.job.properties配置 ,注意对应目录

nameNode=hdfs://hadoop-senior.ibeifeng.com:8020jobTracker=hadoop-senior.ibeifeng.com:8032queueName=defaultexamplesRoot=oozie-apps/map-reduceoozie.wf.application.path=${nameNode}/user/beifeng/${examplesRoot}/workflow.xmloutputDir=map-reduce-swc

5.workflow.xml配置

workflow名称及输入目录配置,输出目录在此不做更改

//定义workflow名称, name="..."中不超过20个字符,否则会报错<workflow-app xmlns="uri:oozie:workflow:0.2" name="swc-map-reduce-wf">//定义预先删除目录<delete path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data/${outputDir}"/>//定义输入目录<property><name>mapred.input.dir</name><value>/user/${wf:user()}/${examplesRoot}/input-data</value></property>

根据mapred.input.dir在/opt/modules/oozie-4.0.0-cdh5.3.6/oozie-apps/map-reduce创建input-data目录

复制测试文件sort.txt至input-data目录,便于mapred.input.dir有文件夹及资源可用

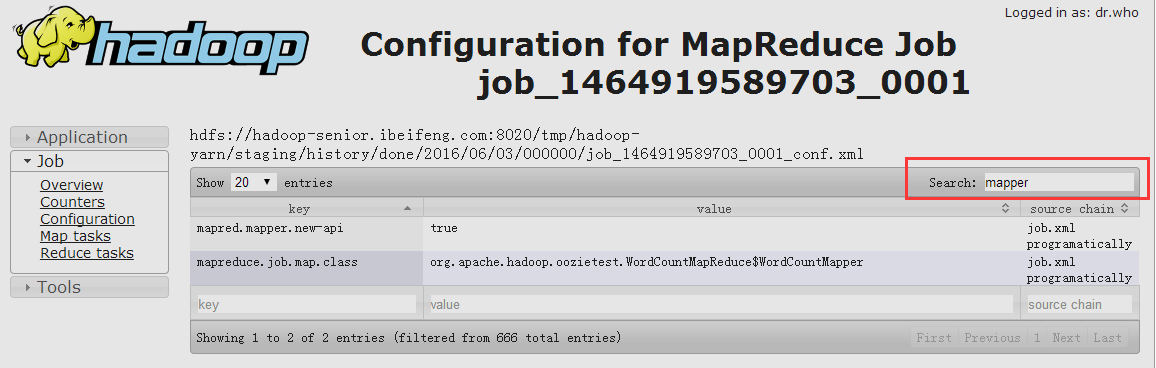

mapper配置

<property><name>mapred.mapper.class</name><value>org.apache.oozie.example.SampleMapper</value></property>

更改上面mapred.mapper.class原配置并启用新API,参照预先运行jar包参数

//启用新API,防止兼容性报错<property><name>mapred.mapper.new-api</name><value>true</value></property>//参照预先运行jar包参数更改 mapred.mapper.class<property><name>mapreduce.job.map.class</name><value>org.apache.hadoop.oozietest.WordCountMapReduce$WordCountMapper</value></property>

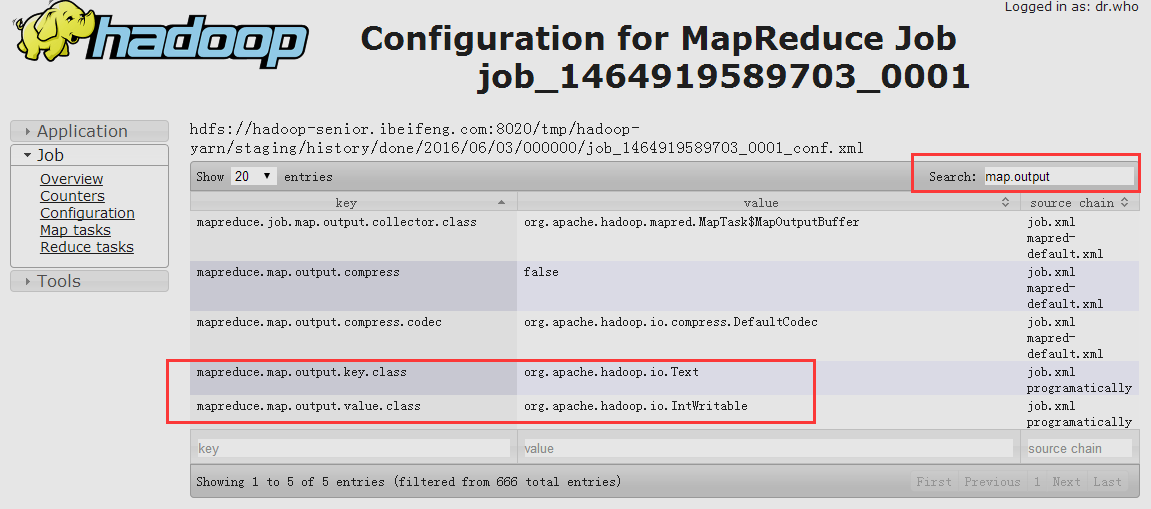

添加mapreduce.map.output.key.class和mapreduce.map.output.value.class

<property><name>mapreduce.map.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.map.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property>

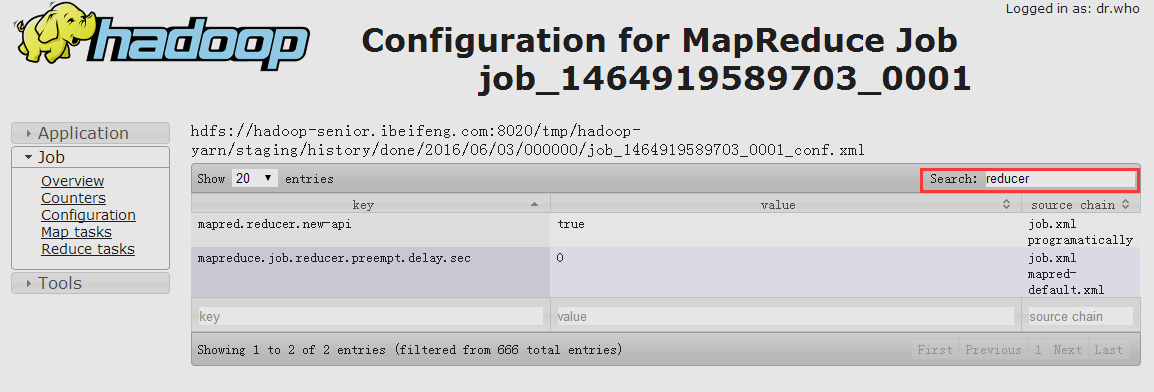

reducer配置

<property><name>mapred.reducer.new-api</name><value>true</value></property>

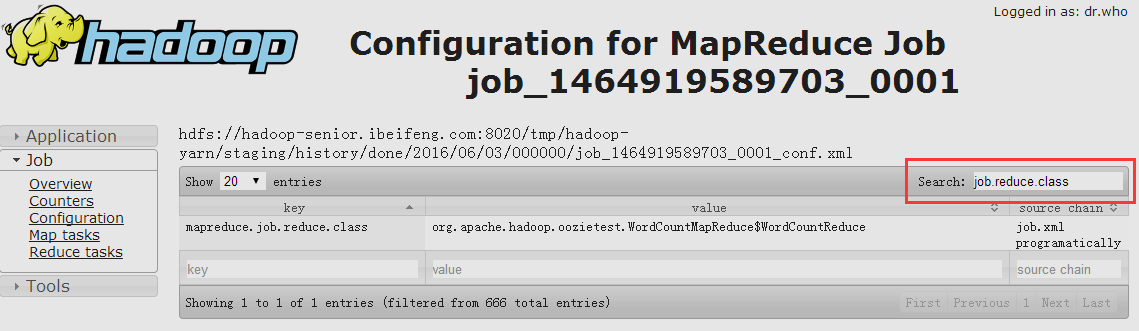

<property><name>mapreduce.job.reduce.class</name><value>org.apache.hadoop.oozietest.WordCountMapReduce$WordCountReduce</value></property>

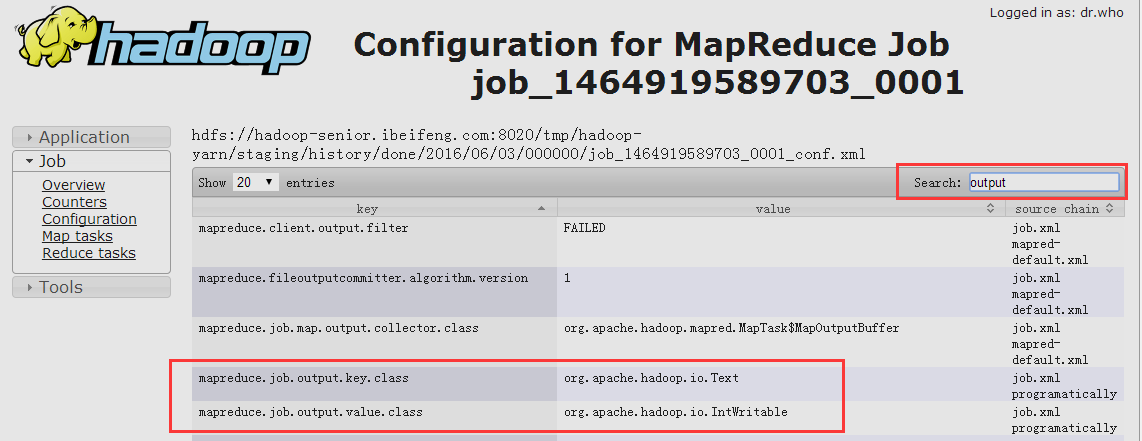

<property><name>mapreduce.job.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.job.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property>

workflow配置全文如下

<workflow-app xmlns="uri:oozie:workflow:0.2" name="swc-map-reduce-wf"><start to="mr-node"/><action name="mr-node"><map-reduce><job-tracker>${jobTracker}</job-tracker><name-node>${nameNode}</name-node><prepare><delete path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data/${outputDir}"/></prepare><configuration><property><name>mapred.job.queue.name</name><value>${queueName}</value></property><!--mapper--><property><name>mapred.mapper.new-api</name><value>true</value></property><property><name>mapreduce.job.map.class</name><value>org.apache.hadoop.oozietest.WordCountMapReduce$WordCountMapper</value></property><property><name>mapreduce.map.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.map.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property><!--reducer--><property><name>mapred.reducer.new-api</name><value>true</value></property><property><name>mapreduce.job.reduce.class</name><value>org.apache.hadoop.oozietest.WordCountMapReduce$WordCountReduce</value></property><property><name>mapreduce.job.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.job.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property><!--putdir--><property><name>mapred.input.dir</name><value>/user/${wf:user()}/${examplesRoot}/input-data</value></property><property><name>mapred.output.dir</name><value>/user/${wf:user()}/${examplesRoot}/output-data/${outputDir}</value></property></configuration></map-reduce><ok to="end"/><error to="fail"/></action><kill name="fail"><message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message></kill><end name="end"/></workflow-app>

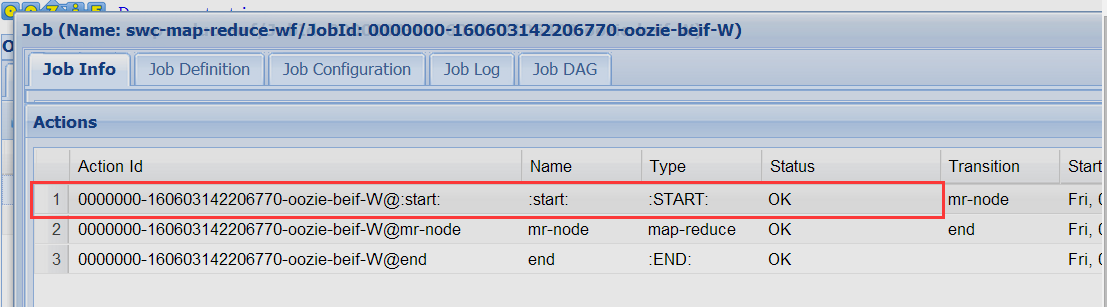

执行操作

上传制作好的map-reduce文件夹至HDFS中oozie工作文件夹oozie-apps

并删除oozie-apps/map-reduce/lib下默认jar包oozie-examples-4.0.0-cdh5.3.6.jar

bin/hdfs dfs -put /opt/modules/oozie-4.0.0-cdh5.3.6/oozie-apps/map-reduce/ oozie-apps

参照examples执行命令更改生成现有命令

//原examples执行命令bin/oozie job -oozie http://hadoop-senior.ibeifeng.com:11000/oozie -config examples/apps/map-reduce/job.properties -run

//现命令bin/oozie job -oozie http://hadoop-senior.ibeifeng.com:11000/oozie -config oozie-apps/map-reduce/job.properties -run

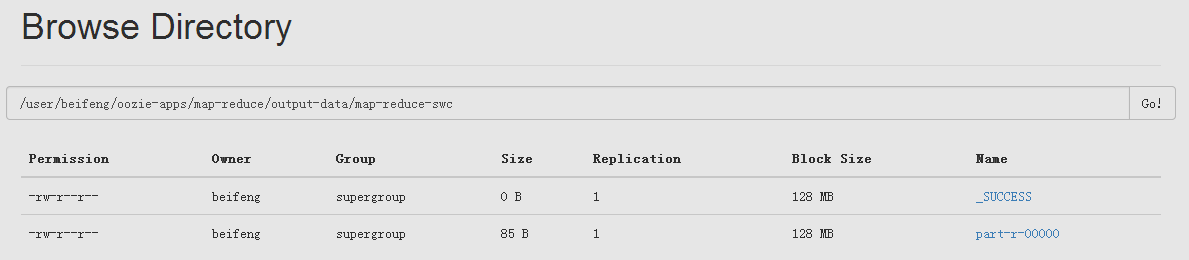

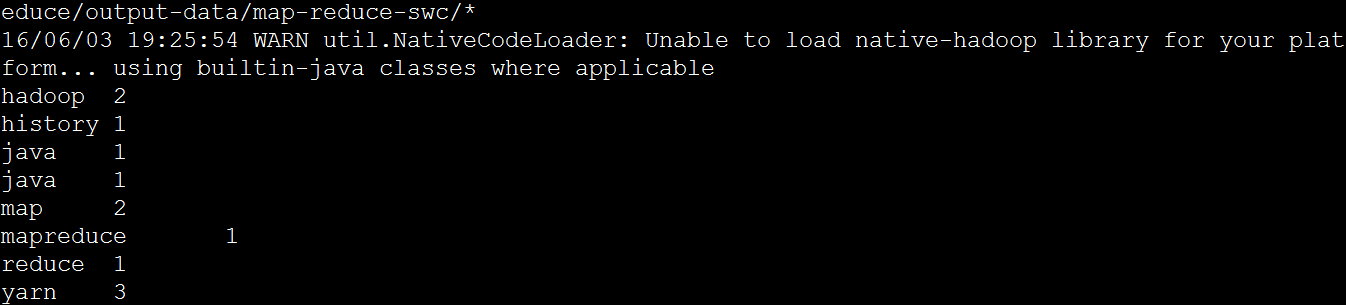

查看执行结果

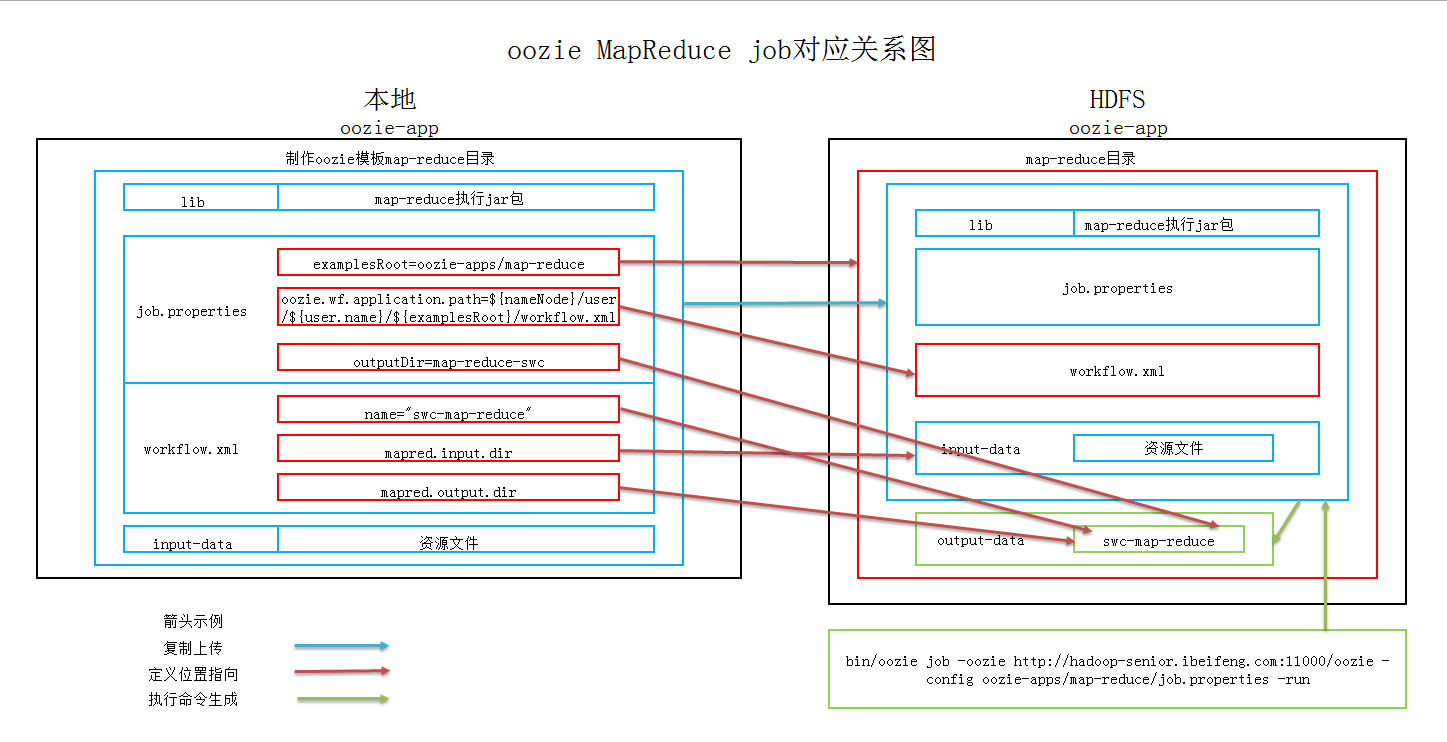

总结配置对应关系

2)Shell Action 配置时多处注意事项,企业常用

1.制作一个shell脚本

vi meninfo.sh

!bin/bash

/usr/bin/free -m >> /tmp/meminfo

2.复制examples/app文件夹下shell文件夹,到工作目录oozie-apps下,shell文件夹改名为mem-shell方便使用

3.配置job.properties文件

nameNode=hdfs://hadoop-senior.ibeifeng.com:8020jobTracker=hadoop-senior.ibeifeng.com:8032queueName=defaultexamplesRoot=oozie-apps/mem-shelloozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/EXEC=meminfo.sh

4.配置workflow.xml文件

① name="mem-shell-wf"

② 删除 decision kill、 name="fail-output"、 argument和 等标签

③参考官网页面 http://oozie.apache.org/docs/4.0.0/DG_ShellActionExtension.html 语法

<exec>${EXEC}</exec><argument>A</argument><argument>B</argument><file>${EXEC}#${EXEC}</file> <!--Copy the executable to compute node's current working directory -->

更改 exec 和 file。

argument通常在shell脚本中设置,在此设置会加大更改工作量

file标签:根据“ Copy the executable to compute node's current working directory ” ,${EXEC}的路径不能引用,必须要写决定路径,否则任务会失败。

<exec>${EXEC}</exec><file>/user/beifeng/oozie-apps/mem-shell/${EXEC}#${EXEC}</file>

将ok标签改为 end

<ok to="end"/>

④上传mem-shell文件夹到HDFS

bin/hdfs dfs -put /opt/modules/oozie-4.0.0-cdh5.3.6/oozie-apps/mem-shell/ oozie-apps

⑤执行命令

bin/oozie job -oozie http://hadoop-senior.ibeifeng.com:11000/oozie -config oozie-apps/mem-shell/job.properties -run

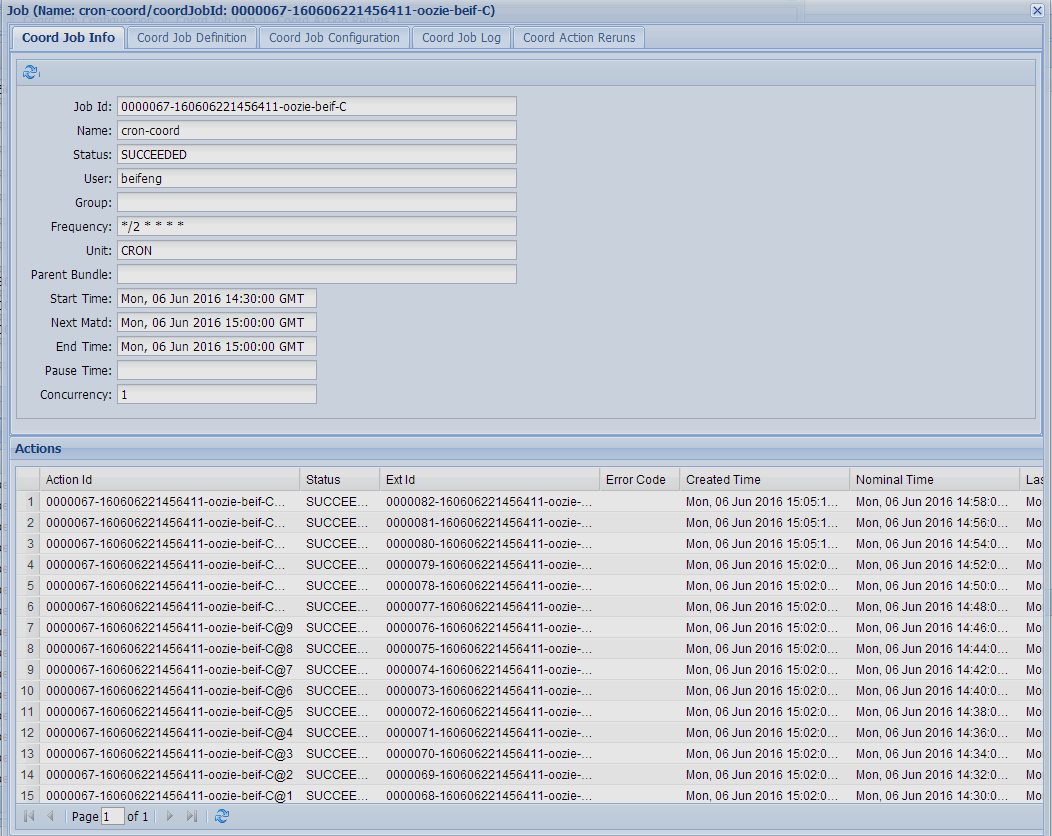

3)如何使用 Oozie 中 Coordinate 调度 WorkFlow

1.确认并同一服务器集群时间为通用协调时(UTC,Universal Time Coordinated)。UTC与格林尼治平均时(GMT,Greenwich Mean Time)相同。

date -R

Mon, 06 Jun 2016 20:38:57 +0800

+0800为东八区,时区正确。

如不正确, 修改oozie-site.xml添加

<property><name>oozie.processing.timezone</name><value>GMT+0800</value></property>

修改系统时区

# rm -rf /etc/localtime# ln -s /usr/share/zoneinfo/Asia/SSaigon Sakhalin Samarkand Seoul Shanghai Singapore# ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

修改js时区 $OOZIE_HOME/oozie-server/webapps/oozie/oozie-console.js

function getTimeZone() {Ext.state.Manager.setProvider(new Ext.state.CookieProvider());return Ext.state.Manager.get("TimezoneId","GMT+0800");

2.使用 mem-shell 案例中的workflow.xml

rm -rf oozie-apps/cron/workflow.xmlcp oozie-apps/mem-shell/workflow.xml oozie-apps/cron/

3.修改 job.properties

nameNode=hdfs://hadoop-senior.ibeifeng.com:8020jobTracker=hadoop-senior.ibeifeng.com:8032queueName=defaultexamplesRoot=oozie-apps/cronoozie.coord.application.path=${nameNode}/user/${user.name}/${examplesRoot}/start=2016-06-06T21:30+0800end=2016-06-06T23:00+0800workflowAppUri=${nameNode}/user/${user.name}/${examplesRoot}/EXEC=meminfo.sh

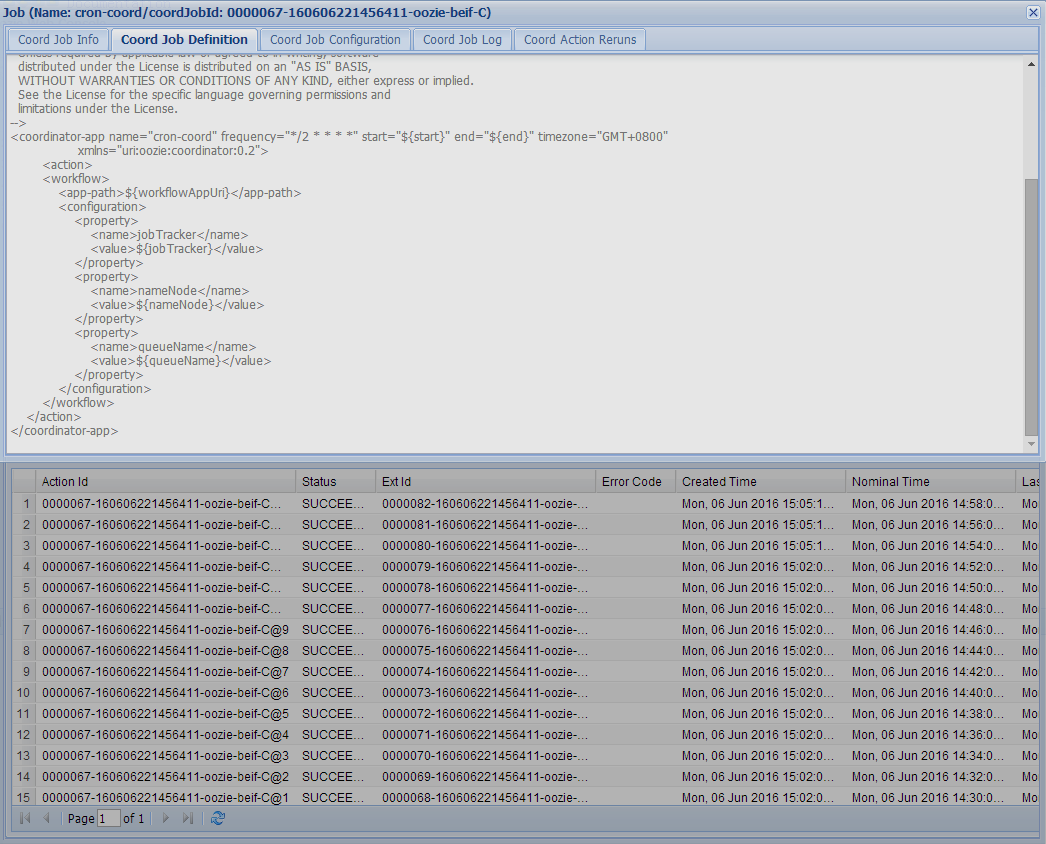

4.修改 coordinator.xml

<coordinator-app name="cron-coord" frequency="${coord:minutes(2)}" start="${start}" end="${end}" timezone="GMT+0800"xmlns="uri:oozie:coordinator:0.2">

或者

<coordinator-app name="cron-coord" frequency="*/2 * * * *" start="${start}" end="${end}" timezone="GMT+0800"xmlns="uri:oozie:coordinator:0.2">

5.因为 coordinator.xml 设置每2分钟执行一次任务 < 系统默认每5分钟检查时间 ,需关闭系统默认检查。

按照 oozie-default.xml 中的配置:

<property><name>oozie.service.coord.check.maximum.frequency</name><value>false</value><description>When true, Oozie will reject any coordinators with a frequency faster than 5 minutes. It is not recommended to disablethis check or submit coordinators with frequencies faster than 5 minutes: doing so can cause unintended behavior andadditional system stress.</description></property>

在 oozie-site.xml 中 添加该设置,并将值设置为false。

<property><name>oozie.service.coord.check.maximum.frequency</name><value>false</value><description>When true, Oozie will reject any coordinators with a frequency faster than 5 minutes. It is not recommended to disablethis check or submit coordinators with frequencies faster than 5 minutes: doing so can cause unintended behavior andadditional system stress.</description></property>

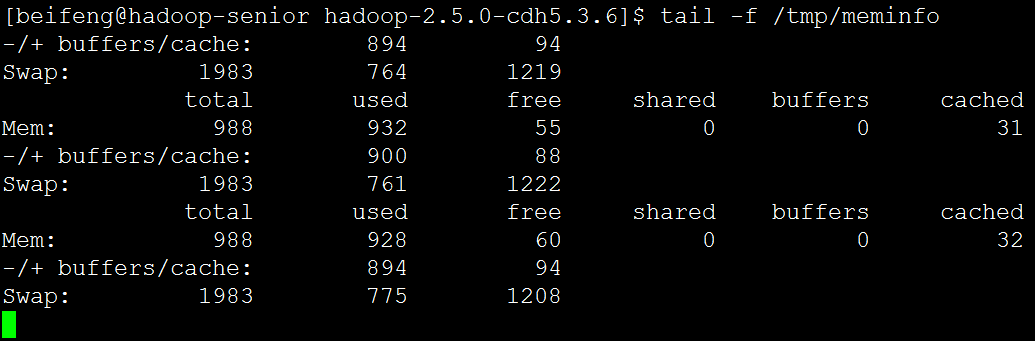

6.执行命令并查看结果

重启 oozie 后

bin/oozie job -oozie http://hadoop-senior.ibeifeng.com:11000/oozie -config oozie-apps/cron/job.properties -run

tail -f /tmp/meminfototal used free shared buffers cachedMem: 988 938 49 0 1 50-/+ buffers/cache: 887 101Swap: 1983 788 1195total used free shared buffers cachedMem: 988 940 48 0 1 57-/+ buffers/cache: 881 107Swap: 1983 790 1193total used free shared buffers cachedMem: 988 928 60 0 0 51-/+ buffers/cache: 876 112Swap: 1983 799 1184total used free shared buffers cachedMem: 988 939 49 0 0 60-/+ buffers/cache: 877 111Swap: 1983 797 1186total used free shared buffers cachedMem: 988 938 50 0 1 50-/+ buffers/cache: 886 102Swap: 1983 790 1193total used free shared buffers cached